Table of Contents[Hide][Show]

The future is here. And, in this future machines comprehend the world around them in the same way that people do. Computers can drive automobiles, diagnose diseases, and accurately forecast the future.

This may seem like science fiction, but deep learning models are making it a reality.

These sophisticated algorithms are revealing the secrets of artificial intelligence, allowing computers to self-learn and develop. In this post, we’ll delve into the realm of deep learning models.

And, we will investigate the enormous potential they have for revolutionizing our lives. Prepare to learn about cutting-edge technology that is changing humanity’s future.

What Exactly Are Deep Learning Models?

Have you ever played a game in which you have to identify the differences between two images?

It is fun however, it can also be tough, right? Imagine being able to teach a computer to play that game and win every time. Deep learning models accomplish just that!

Deep learning models are similar to super-smart machines that can examine a large number of images and determine what they have in common. They accomplish this by disassembling the images and studying each one individually.

They then apply what they’ve learned to identify patterns and make predictions about fresh images they’ve never seen before.

Deep learning models are artificial neural networks that can learn and extract complicated patterns and characteristics from massive datasets. These models are made up of several layers of linked nodes, or neurons, that analyze and change incoming data to generate an output.

Deep learning models are particularly well-suited to jobs requiring great accuracy and precision, such as image identification, speech recognition, natural language processing, and robotics.

They’ve been utilized in everything from self-driving cars to medical diagnostics, recommender systems, and predictive analytics.

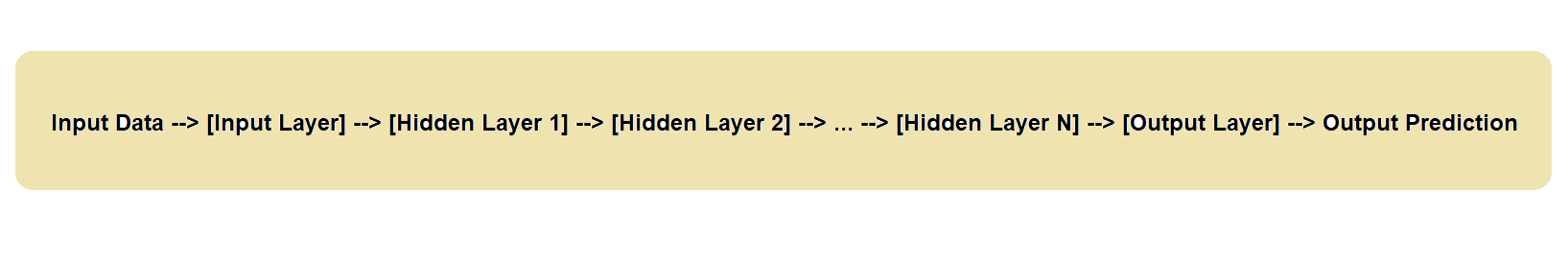

Here’s a simplified version of the visualization to illustrate data flow in a deep learning model.

The input data flows into the model’s input layer, which then passes the data through a number of hidden layers before providing an output prediction.

Each hidden layer performs a series of mathematical operations on the input data before passing it to the next layer, which provides the final prediction.

Now, let’s see what are deep learning models and how can we use them in our life.

1. Convolutional Neural Networks (CNNs)

CNNs are a deep learning model that has transformed the area of computer vision. CNNs are used to classify images, recognize objects, and segment images. The structure and function of the human visual cortex informed the design of CNNs.

How Do They Work?

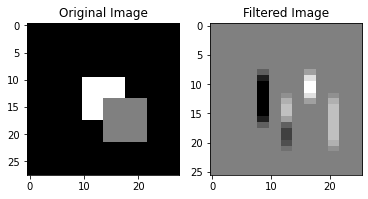

A CNN is made up of a number of convolutional layers, pooling layers, and fully linked layers. The input is an image, and the output is a prediction of the class label of the image.

A CNN’s convolutional layers build a feature map by performing a dot product between the input picture and a set of filters. The pooling layers lower the size of the feature map by downsampling it.

Finally, the feature map is used by the fully connected layers to predict the image’s class label.

Why are CNNs Important?

CNNs are essential because they can learn to detect patterns and characteristics in images that people find difficult to notice. CNNs can be taught to recognize characteristics like edges, corners, and textures using big datasets. After learning these properties, a CNN can use them to identify objects in fresh photos. CNNs have demonstrated cutting-edge performance on a variety of image identification applications.

Where do We Use CNNs

Healthcare, the auto industry, and retail are just a few sectors that employ CNNs. In the healthcare industry, they can be beneficial for illness diagnosis, medication development, and medical image analysis.

In the automobile sector, they help with lane detection, object detection, and autonomous driving. They are also greatly used in retail for visual search, image-based product recommendation, and inventory control.

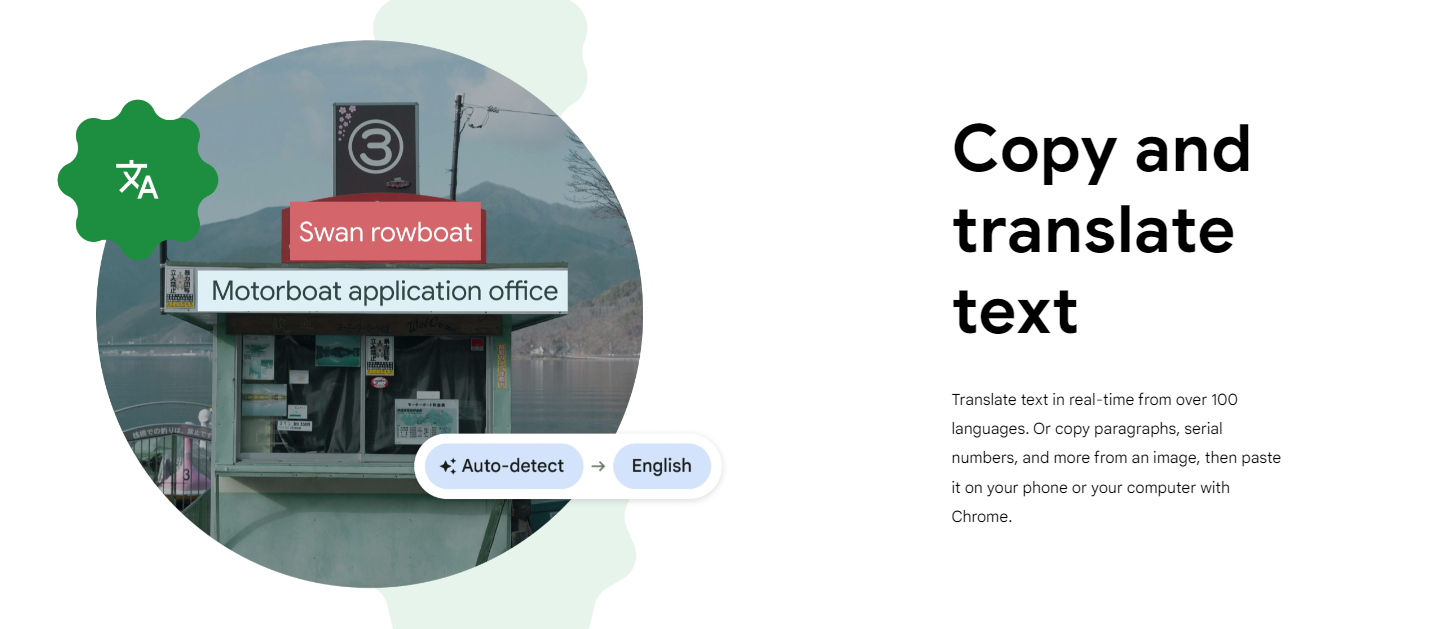

For example; Google employs CNNs in a variety of applications, including Google Lens, a well-liked image identification tool. The program uses CNNs to evaluate photographs and give users information.

Google Lens, for instance, can recognize things in an image and offer details about them, such as the type of flower.

It may also translate the text that is extracted from a picture into multiple languages. Google Lens is able to give consumers useful information because of CNNs’ assistance in accurately identifying items and extracting characteristics from photos.

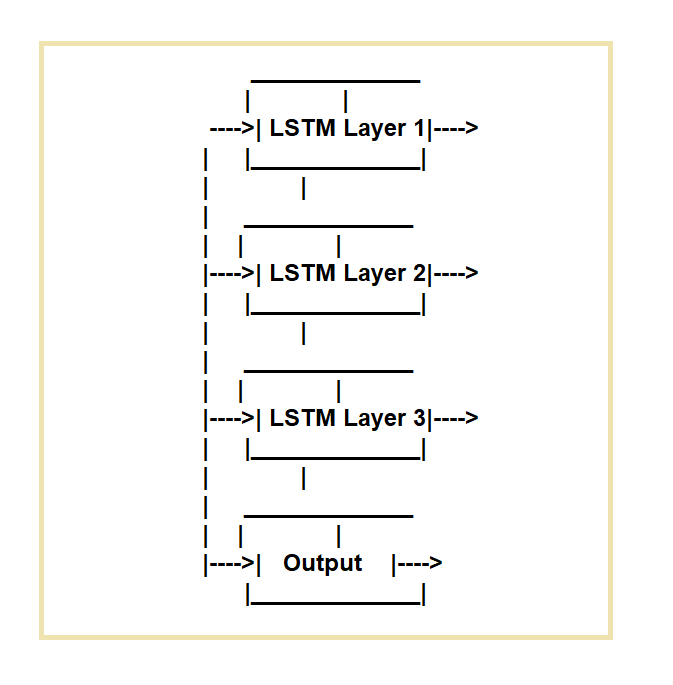

2. Long Short-Term Memory (LSTM) networks

Long Short-Term Memory (LSTM) networks are created to address the shortcomings of regular recurrent neural networks (RNNs). LSTM networks are ideal for tasks that demand the processing of data sequences across time.

They function by employing a specific memory cell and three gating mechanisms.

They regulate the flow of information into and out of the cell. The input gate, forget gate and output gate are the three gates.

The input gate regulates the flow of data into the memory cell, the forget gate regulates the deletion of data from the cell, and the output gate regulates the flow of data out of the cell.

What is their Significance?

LSTM networks are useful because they can successfully represent and forecast data sequences with long-term relationships. They can record and retain information about previous inputs, allowing them to make more accurate predictions about future inputs.

Speech recognition, handwriting recognition, natural language processing, and picture captioning are just a few of the applications that have made use of LSTM networks.

Where Do We Use LSTM Networks?

Many software and technology applications employ LSTM networks, including speech recognition systems, natural languages processing tools like sentiment analysis, machine translation systems, and text and picture generating systems.

They’ve also been utilized in the creation of self-driving cars and robots, as well as in the finance industry to detect fraud and anticipate stock market movements.

3. Generative Adversarial Networks (GANs)

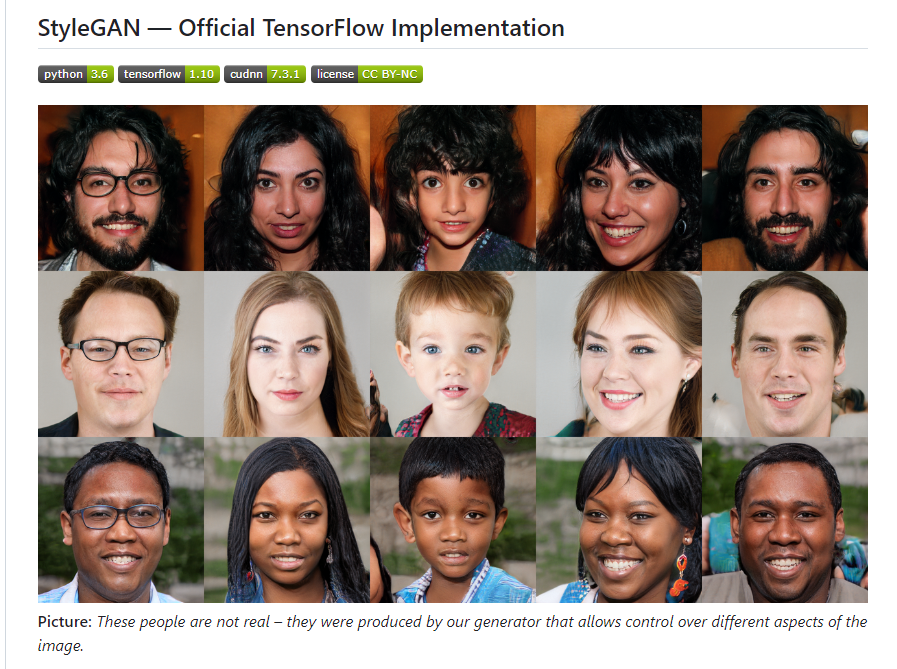

GANs are a deep learning technique that is used to generate new data samples that are similar to a given dataset. GANs are made up of two neural networks: one that learns to produce new samples and one that learns to distinguish between genuine and generated samples.

In a similar approach, these two networks are trained together until the generator can generate samples that are indistinguishable from actual ones.

Why Do We Use GANs

GANs are significant because of their capacity to produce high-quality synthetic data that may be utilized for a variety of applications, including picture and video production, text generation, and even music generation.

GANs have also been used for data augmentation, which is the generation of synthetic data to supplement real-world data and improve the performance of machine-learning models.

Furthermore, by creating synthetic data that can be used to train models and imitate trials, GANs have the potential to transform sectors such as medicine and drug development.

Applications of GANs

GANs can supplement datasets, create new pictures or movies, and even generate synthetic data for scientific simulations. Furthermore, GANs have the potential to be employed in a variety of applications ranging from entertainment to medical.

ages and videos. NVIDIA’s StyleGAN2, for example, has been used to create high-quality photographs of celebrities and artwork.

4. Deep Belief Networks (DBNs)

Deep Belief Networks (DBNs) are artificial intelligence systems that can learn to spot patterns in data. They accomplish this by segmenting the data into smaller and smaller chunks, gaining a more thorough grasp of it at each level.

DBNs may learn from data without being informed what it is (this is referred to as “unsupervised learning”). This makes them extremely valuable for detecting patterns in data that a person would find difficult or impossible to discern.

What Makes DBNs Significant?

DBNs are significant because of their capacity to learn hierarchical data representations. These representations can be utilized for a variety of applications like classification, anomaly detection, and dimensionality reduction.

The capacity of DBNs to undertake unsupervised pre-training, which can increase the performance of deep learning models with minimal labeled data, is a significant benefit.

What Are the Applications of DBNs?

One of the most significant applications is object detection, in which DBNs are used to recognize certain types of things such as airplanes, birds, and humans. They are also utilized for image generation and classification, motion detection in films, and natural language comprehension for voice processing.

Furthermore, DBNs are commonly employed in datasets to assess human postures. DBNs are a great tool for a variety of industries, including healthcare and banking, and technology.

5. Deep Reinforcement Learning Networks (DRLs)

Deep Reinforcement Learning Networks (DRLs) integrate deep neural networks with reinforcement learning techniques to allow agents to learn in a complicated environment via trial and error.

DRLs are used to teach agents how to optimize a reward signal by interacting with their surroundings and learning from their mistakes.

What Makes Them Remarkable?

They’ve been used effectively in a variety of applications, including gaming, robotics, and autonomous driving. DRLs are important because they can learn directly from raw sensory input, allowing agents to make decisions based on their interactions with the environment.

Important Applications

DRLs are employed in real-world circumstances because they can handle difficult issues.

DRLs have been included in several prominent software and tech platforms, including OpenAI’s Gym, Unity’s ML-Agents, and Google’s DeepMind Lab. AlphaGo, built by Google’s DeepMind, for example, employs DRL to play the board game Go at a world champion level.

Another use of DRL is in robotics, where it is used to control the movements of robotic arms to execute tasks such as gripping things or stacking blocks. DRLs have many uses and are a useful tool for training agents to learn and make decisions in complicated settings.

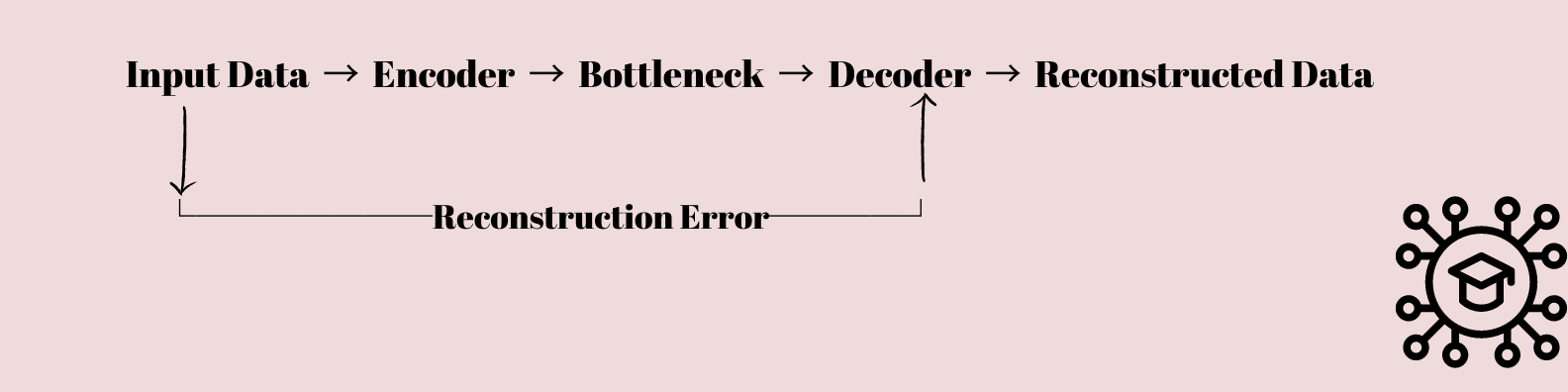

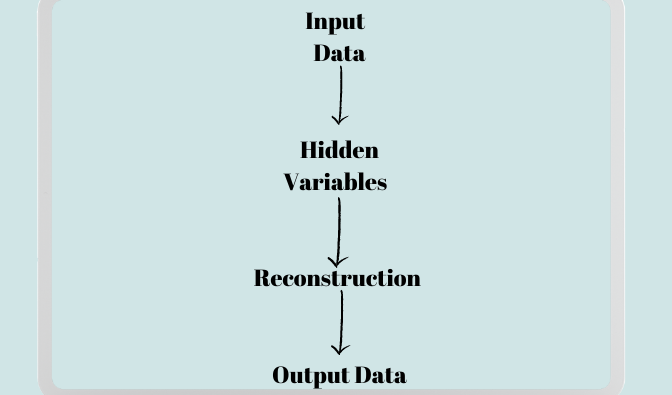

6. Autoencoders

Autoencoders are an interesting type of neural network that has caught the interest of both scholars and data scientists. They are fundamentally designed to learn how to compress and restore data.

The input data is fed through a succession of layers that gradually lower the dimensionality of the data until it is compressed into a bottleneck layer with fewer nodes than the input and output layers.

This compressed representation is then used to recreate the original input data using a sequence of layers that gradually raise the data’s dimensionality back to its original shape.

Why is It Important?

Autoencoders are a crucial component of deep learning because they make feature extraction and data reduction possible.

They are able to identify the key elements of the incoming data and translate them into a compressed form that may then be applied to other tasks like classification, grouping, or the creation of new data.

Where Do We Use Autoencoders?

Anomaly detection, natural language processing, and computer vision are just a few of the disciplines where autoencoders are used. Autoencoders, for instance, can be used for image compression, image denoising, and picture synthesis in computer vision.

We can use Autoencoders in tasks like text creation, text categorization, and text summarization in natural language processing. It can identify anomalous activity in data that deviates from the norm in anomaly identification.

7. Capsule Networks

Capsule Networks is a new deep learning architecture that was developed as a replacement for Convolutional Neural Networks (CNNs).

Capsule Networks are based on the notion of grouping brain units called capsules that are responsible for recognizing the existence of a certain item in an image and encoding its attributes, such as orientation and position, into their output vectors. Capsule Networks can therefore manage spatial interactions and perspective fluctuations better than CNNs.

Why do We Choose Capsule Networks over CNN’s?

Capsule Networks are useful because they overcome CNN’s difficulties in capturing hierarchical relationships between items in a picture. CNNs can recognize things of various sizes but struggle to grasp how these items connect to one another.

Capsule Networks, on the other hand, can learn to recognize things and their pieces, as well as how they are placed spatially in an image, making them a viable contender for computer vision applications.

Areas of Applications

Capsule Networks have already demonstrated promising results in a variety of applications, including image classification, object identification, and picture segmentation.

They’ve been used to distinguish things in medical photos, recognize people in films, and even create 3D models out of 2D images.

To increase their performance, Capsule Networks have been combined with other deep learning architectures such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs). Capsule Networks are predicted to play an increasingly vital role in enhancing computer vision technologies as the science of deep learning evolves.

For example; Nibabel is a well-known Python tool for reading and writing neuroimaging file types. For image segmentation, it employs Capsule Networks.

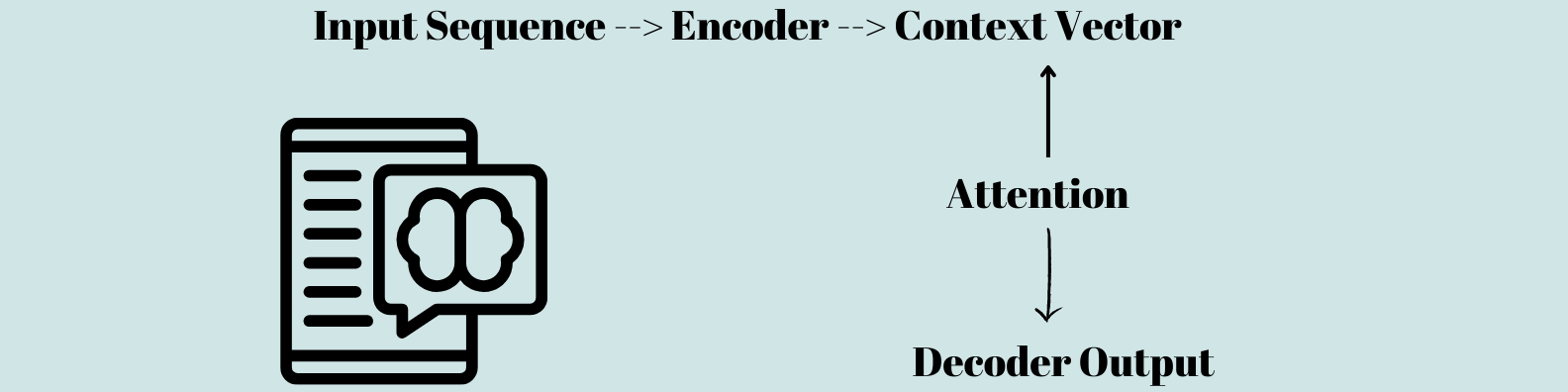

8. Attention-based models

Deep learning models known as attention-based models, also known as attention mechanisms, strive to increase the accuracy of machine learning models. These models work by concentrating on certain features of incoming data, resulting in more efficient and effective processing.

In natural language processing tasks such as machine translation and sentiment analysis, attention methods have shown to be quite successful.

What is Their Significance?

Attention-based models are useful because they enable more effective and efficient processing of complicated data.

Traditional neural networks evaluate all input data as equally important, resulting in slower processing and decreased accuracy. Attention processes concentrate on crucial aspects of input data, allowing for faster and more accurate predictions.

Areas of Usage

In the field of artificial intelligence, attention mechanisms have a broad range of applications, including natural language processing, picture and audio recognition, and even driverless vehicles.

Attention methods, for example, can be used to improve machine translation in natural language processing by allowing the system to focus on certain words or phrases that are essential to the context.

Attention methods in autonomous cars can be employed to assist the system in focusing on certain items or challenges in its surroundings.

9. Transformer Networks

Transformer networks are deep learning models that examine and produce data sequences. They function by processing the input sequence one element at a time and producing an output sequence of the same or different lengths.

Transformer networks, unlike standard sequence-to-sequence models, do not process sequences using recurrent neural networks (RNNs). Instead, they employ self-attention processes to learn the links between the sequence’s pieces.

What Is the Importance of Transformer Networks?

Transformer networks have grown in popularity in recent years as a result of their better performance in natural language processing jobs.

They are especially well-suited for text-creation tasks such as language translation, text summarization, and conversation production.

Transformer networks are significantly more efficient computationally than RNN-based models, making them a preferred choice for large-scale applications.

Where Can You Find Transformer Networks?

Transformer networks are widely employed in a broad range of applications, most notably natural language processing.

The GPT (Generative Pre-trained Transformer) series is a prominent transformer-based model that has been utilized for tasks such as language translation, text summarization, and chatbot generation.

BERT (Bidirectional Encoder Representations from Transformers) is another common transformer-based model that has been utilized for natural language comprehension applications such as question answering and sentiment analysis.

Both GPT and BERT were created with PyTorch, an open-source deep-learning framework that has been popular for developing transformer-based models.

10. Restricted Boltzmann Machines( RBMs)

Restricted Boltzmann Machines (RBMs) are a sort of unsupervised neural network that learns in a generative manner. Because of their capacity to learn and extract essential characteristics from high-dimensional data, they have been widely employed in the fields of machine learning and deep learning.

RBMs are made up of two layers, visible and hidden, with each layer consisting of a group of neurons connected by weighted edges. RBMs are designed to learn a probability distribution that describes the input data.

What are Restricted Boltzmann Machines?

RBMs employ a generative learning strategy. In RBMs, the visible layer reflects the input data, while the buried layer encodes the input data’s characteristics. The weights of the visible and concealed layers show the strength of their link.

RBMs adjust the weights and biases between the layers during training using a technique known as contrastive divergence. Contrastive divergence is an unsupervised learning strategy that maximizes the model’s prediction likelihood.

What is the significance of Restricted Boltzmann Machines?

RBMs are significant in machine learning and deep learning because they can learn and extract relevant characteristics from large amounts of data.

They’re very effective for picture and speech recognition, and they’ve been employed in a variety of applications like recommender systems, anomaly detection, and dimensionality reduction. RBMs can find patterns in vast datasets, resulting in superior predictions and insights.

Where may Restricted Boltzmann Machines be used?

Applications for RBMs include dimensionality reduction, anomaly detection, and recommendation systems. RBMs are particularly helpful for sentiment analysis and topic modeling in the context of natural language processing.

Deep belief networks, a kind of neural network used for voice and picture recognition, also employ RBMs. The Deep Belief Network Toolbox, TensorFlow, and Theano are some particular examples of software or technology that uses RBMs.

Wrap Up

Deep Learning models are becoming more and more crucial in a variety of industries, including speech recognition, natural language processing, and computer vision.

Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) have shown the most promise and are extensively utilized in many applications, however, all Deep Learning models have their advantages and disadvantages.

However, researchers are still looking into Restricted Boltzmann Machines (RBMs) and other varieties of Deep Learning models because they too have special advantages.

New and creative models are anticipated to be created as the area of deep learning continues to advance in order to handle harder problems

Leave a Reply