Table of Contents[Hide][Show]

Advanced analytics and machine learning programs are propelled by data, but access to that data can be difficult for academics due to challenges with privacy and business procedures.

Synthetic data, which can be shared and utilized in ways that actual data cannot, is a potential new direction to pursue. However, this new strategy is not without dangers or disadvantages, therefore it’s crucial that businesses carefully consider where and how they use their resources.

In the current era of AI, we can also state that data is the new oil, but only a select few are sitting on a gusher. Therefore, a lot of people are producing their own fuel, which is both affordable and efficient. It is known as synthetic data.

In this post, we’ll take a detailed look at synthetic data—why you should use it, how to produce it, what makes it different from actual data, what use cases it can serve, and much more.

So, what is Synthetic Data?

When genuine data sets are inadequate in terms of quality, number, or diversity, synthetic data can be used to train AI models in place of real historical data.

When existing data doesn’t satisfy business requirements or has privacy risks when utilized to develop machine learning models, test software, or the like, synthetic data can be a significant tool for corporate AI efforts.

Simply said, synthetic data is frequently utilized in place of actual data. More precisely, it is data that has been artificially tagged and produced by simulations or computer algorithms.

Synthetic data is information that has been created by a computer program artificially rather than as a result of actual occurrences. Companies can add synthetic data to their training data to cover all usage and edge situations, reduce the cost of data gathering, or satisfy privacy regulations.

Artificial data is now more accessible than ever thanks to improvements in processing power and data storage methods like the cloud. Synthetic data improves the creation of AI solutions that are more beneficial for all end-users, and that is undoubtedly a good development.

How synthetic data is important and why should you use it?

When training AI models, developers frequently need huge datasets with precise labeling. When taught with more varied data, neural networks perform more accurately.

Collecting and labeling these massive datasets containing hundreds or even millions of items, however, can be unreasonably time- and money-consuming. The price of producing training data can be greatly reduced by using synthetic data. For instance, if created artificially, a training image that costs $5 when purchased from a data labeling provider might only cost $0.05.

Synthetic data can alleviate privacy concerns related to potentially sensitive data generated from the actual world while also reducing expenses.

In comparison to genuine data, which could not precisely reflect the complete spectrum of facts about the real world, it might help lessen prejudice. By providing unusual occurrences that represent plausible possibilities but may be challenging to get from legitimate data, synthetic data can offer greater diversity.

Synthetic data could be a fantastic fit for your project for the reasons listed below:

1. The robustness of the model

Without having to acquire it, access more varied data for your models. With synthetic data, you can train your model using variants of the same person with various haircuts, facial hair, glasses, head poses, etc., as well as skin tone, ethnic traits, bone structure, freckles, and other characteristics to generate unique faces and strengthen it.

2. Edge cases are taken into account

A balanced dataset is preferred by machine learning algorithms. Think back to our example of face recognition. The accuracy of their models would have improved (and in fact, some of these businesses did just this), and they would have produced a more moral model if they had produced synthetic data of darker-skinned faces to fill in their data gaps. Teams can cover all use cases, including edge cases where data is scarce or nonexistent, with the help of synthetic data.

3. It can be obtained more quickly than “actual” data

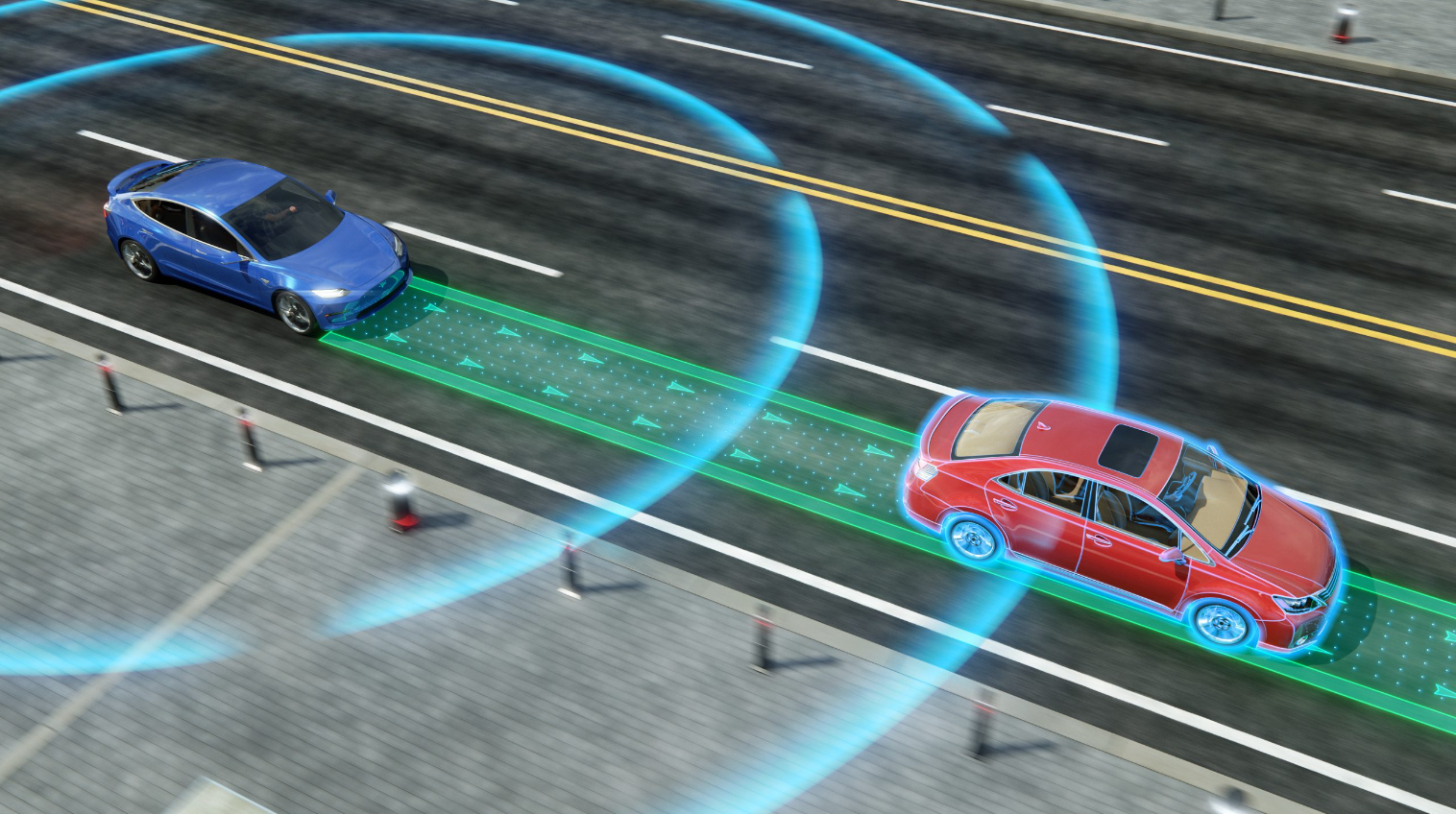

Teams are able to generate vast amounts of synthetic data quickly. This is especially useful when the real-life data depends on sporadic events. Teams may find it difficult to get enough real-world data on severe road conditions while gathering data for a self-driving car, for instance, due to their rarity. In order to speed up the laborious annotation process, data scientists can put up algorithms to automatically label the synthetic data as it is generated.

4. It secures user privacy information

Companies may have security difficulties while handling sensitive data, depending on the business and kind of data. Personal health information (PHI), for instance, is frequently included in inpatient data in the healthcare industry and must be handled with the utmost security.

Because synthetic data doesn’t include information about actual people, privacy issues are lessened. Consider using synthetic data as an alternative if your team has to adhere to certain data privacy laws.

Real data Vs Synthetic data

In the real world, real data is obtained or measured. When someone uses a smartphone, laptop, or computer, wears a wristwatch, accesses a website, or makes an online transaction, this type of data is generated instantly.

Additionally, surveys can be used to provide genuine data (online and offline). Digital settings produce synthetic data. With the exception of the portion that was not derived from any real-world events, synthetic data is created in a way that successfully mimics the actual data in terms of fundamental qualities.

The idea of using synthetic data as a substitute for actual data is very promising since it can be used to provide the training data that machine learning models require. But it’s not certain that artificial intelligence can solve every issue that arises in the actual world.

Use cases

Synthetic data is useful for a variety of commercial purposes, including model training, model validation, and testing of new products. We’ll list a few of the sectors that have led the way in its application to machine learning:

1. Healthcare

Given the sensitivity of its data, the healthcare sector is well-suited for the use of synthetic data. Synthetic data can be used by teams to record the physiologies of every sort of patient that might exist, thus assisting in the quicker and more accurate diagnosis of illnesses.

Google’s melanoma detection model is an intriguing illustration of this since it incorporates synthetic data of people with darker skin tones (an area of clinical data that is regrettably underrepresented) to provide the model with the capacity to function effectively for all skin kinds.

2. Automobiles

Simulators are frequently used by companies creating self-driving automobiles to evaluate performance. When the weather is harsh, for example, gathering real road data might be risky or difficult.

To rely on live tests with actual automobiles on the roads is generally not a good idea since there are just too many variables to take into account in all of the different driving situations.

3. Portability of Data

To be able to share their training data with others, organizations require trustworthy and secure methods. Hiding personally identifiable information (PII) before making the dataset public is another intriguing application for synthetic data. Exchanging scientific research datasets, medical data, sociological data, and other fields that could contain PII, are referred to as privacy-preserving synthetic data.

4. Security

Organizations are more secure thanks to synthetic data. Regarding our face recognition example again, you may be familiar with the phrase “deep fakes,” which describes fabricated photos or videos. Deep fakes can be produced by businesses to test their own facial recognition and security systems. Synthetic data is also used in video surveillance to train models more quickly and at a cheaper cost.

Synthetic Data and Machine Learning

To build a solid and trustworthy model, machine learning algorithms need a significant amount of data to be processed. In the absence of synthetic data, producing such a large volume of data would be challenging.

In domains like computer vision or image processing, where the development of models is facilitated by the development of early synthetic data, it can be extremely significant. A new development in the field of picture recognition is the use of Generative Adversarial Networks (GANs). Usually consists of two networks: a generator and a discriminator.

While the discriminator network aims to separate the actual photos from the fake ones, the generator network functions to produce synthetic images that are considerably more similar to real-world images.

In machine learning, GANs are a subset of the neural network family, where both networks continuously learn and develop by adding new nodes and layers.

When creating synthetic data, you have the option to change the environment and type of the data as needed to enhance the model’s performance. While accuracy for synthetic data can be easily attained with a strong score, accuracy for labeled real-time data can occasionally be extremely expensive.

How you can generate synthetic data?

The approaches used to create a synthetic data collection are as follows:

Based on the statistical distribution

The strategy used in this case is to take numbers from distribution or to look at actual statistical distributions in order to create false data that looks comparable. Real data may be completely absent in some circumstances.

A data scientist can generate a dataset containing a random sample of any distribution if he has a deep grasp of the statistical distribution in actual data. The normal distribution, exponential distribution, chi-square distribution, lognormal distribution, and more are just a few examples of statistical probability distributions that can be used to do this.

The data scientist’s level of experience with the situation will have a significant impact on the trained model’s accuracy.

Depending on the model

This technique builds a model that accounts for observed behavior before using that model to generate random data. In essence, this involves fitting real data to data from a known distribution. The Monte Carlo approach can then be used by corporations to create fake data.

In addition, distributions can also be fitted using machine learning models like decision trees. Data scientists must pay attention to the forecast, though, as decision trees typically overfit owing to their simplicity and depth expansion.

With deep learning

Deep learning models that use a Variational Autoencoder (VAE) or Generative Adversarial Network (GAN) models are two ways to create synthetic data. Unsupervised machine learning models include VAEs.

They are made up of encoders, which shrink and compact the original data, and decoders, which scrutinize this data to provide a representation of the real data. Keeping input and output data as identical as possible is the basic objective of a VAE. Two opposing neural networks are GAN models and adversarial networks.

The first network, known as the generator network, is in charge of producing fake data. The discriminator network, the second network, works by comparing created synthetic data with actual data in an effort to identify whether the dataset is fraudulent. The discriminator alerts the generator when it discovers a bogus dataset.

The following batch of data provided to the discriminator is subsequently modified by the generator. As a result, the discriminator gets better over time at spotting bogus datasets. This kind of model is frequently used in the financial sector for fraud detection as well as in the healthcare sector for medical imaging.

Data Augmentation is a different method that data scientists employ to produce more data. It should not be mistaken with fake data, though. Simply said, data augmentation is the act of adding new data to a genuine dataset that already exists.

Creating several pictures from a single image, for instance, by adjusting the orientation, brightness, magnification, and more. Sometimes, the actual data set is used with only the personal information remaining. Data anonymization is what this is, and a set of such data is likewise not to be regarded as synthetic data.

Challenges & limitations of Synthetic data

Although synthetic data has various benefits that can assist firms with data science activities, it also has certain limitations:

- The data’s dependability: It is common knowledge that every machine learning/deep learning model is only as good as the data it is fed. The quality of synthetic data in this context is strongly related to the quality of the input data and the model used to produce the data. It is critical to ensure that no biases exist in the source data, as these can be very clearly mirrored in the synthetic data. Furthermore, before making any forecasts, the data quality should be confirmed and verified.

- Requires knowledge, effort, and time: While creating synthetic data could be simpler and less expensive than creating genuine data, it does need some knowledge, time, and effort.

- Replicating anomalies: The perfect replica of real-world data is not possible; synthetic data can only approximate it. Therefore, some outliers that exist in real data may not be covered by synthetic data. Data anomalies are more significant than typical data.

- Controlling the production and ensuring quality: Synthetic data is intended to replicate real-world data. Data manual verification becomes essential. It is essential to verify the accuracy of the data before incorporating it into machine learning/deep learning models for complicated datasets created automatically utilizing algorithms.

- User feedback: As synthetic data is a novel concept, not everyone will be ready to believe forecasts made with it. This indicates that in order to increase user acceptability, it is first necessary to raise knowledge of the utility of synthetic data.

Future

The use of synthetic data has increased dramatically in the previous decade. While it saves companies time and money, it is not without its drawbacks. It lacks outliers, which occur naturally in actual data and are critical for accuracy in some models.

It’s also worth noting that the quality of the synthetic data is frequently reliant on the input data used for creation; biases in the input data can quickly spread into the synthetic data, thus choosing high-quality data as a starting point shouldn’t be overstated.

Finally, it needs further output control, including comparing the synthetic data with human-annotated real data to verify that discrepancies are not introduced. Despite these obstacles, synthetic data remains a promising field.

It helps us to create novel AI solutions even when real-world data is unavailable. Most significantly, it enables enterprises to build products that are more inclusive and indicative of their end consumers’ diversity.

In the data-driven future, however, synthetic data intends to help the data scientists to perform novel and creative tasks that would be challenging to complete with real-world data alone.

Conclusion

In certain cases, synthetic data can alleviate a data deficit or a lack of relevant data inside a business or organization. We also looked at which strategies can aid in the generation of synthetic data and who can profit from it.

We also spoke about some of the difficulties that come with dealing with synthetic data. For commercial decision-making, real data will always be favored. However, realistic data is the next best option when such true raw data is not accessible for analysis.

However, it must be remembered that in order to produce synthetic data, data scientists with a solid grasp of data modeling are required. A thorough comprehension of the real data and its surroundings is also essential. This is essential to make sure that, if available, the produced data is as accurate as feasible.

Leave a Reply