Every Machine Learning project relies on a good dataset. It is this large dataset that will allow you to train and validate your ML model. So, a big part of the work in an ML project is finding the perfect dataset for your needs. However, it is not always possible to find an option that fits your ambition, as many files that look interesting, in the end, are not.

It can be daunting to waste time downloading countless datasets until you arrive at an ideal set. With that in mind, we have gathered some options that seem interesting and can help you develop your ML project. Note that some are intended for personal instead of commercial use, so look at these options as a way to gain experience in the ML universe.

Basics of Datasets

Before we mention the datasets, we should define some terms. In Artificial Intelligence projects, especially Machine Learning, a large amount of data is required, which will be used to train the algorithm. This amount of data is gathered in a database, which is extremely useful to teach an algorithm.

With this data, the algorithm is trained – also tested – and becomes able to find patterns, establish relationships and thus make decisions autonomously. Without training, Machine Learning algorithms are unable to perform any action. Therefore, the better the training data, the better the model will perform. For a database to be useful to the project, it is not about quantity: it is also about classification.

Ideally, the data should be well labeled. Think about the case of chatbots: language insertion is important, but careful syntactic analysis must be done so that the algorithm created can understand when the interlocutor is using slang. Only then will the virtual assistant be able to launch the answer according to what was requested by the user.

Datasets can be generated from surveys, user purchase data, evaluations left on services, and in many other ways that allow gathering useful information organized in columns and rows in a CSV file.

Before you set out in search of the perfect dataset, it’s important you know the purpose of your project, especially if it’s from a specific area, such as weather, finance, health, etc. This will dictate the source from which you will source your dataset.

Datasets for ML

Chatbot training

An effective chatbot requires a massive amount of training data in order to quickly solve user inquiries without human intervention. However, the primary bottleneck in chatbot development is obtaining realistic, task-oriented dialog data to train these Machine Learning-based systems.

A conversational dataset gathers data in a question and answer format. It is ideal for training chatbots that will give automated answers to the audience. Without this data, the chatbot will fail to quickly solve user inquiries or answer user questions without the need for human intervention.

Using these datasets, businesses can create a tool that provides quick answers to customers 24/7 and is significantly cheaper than having a team of people doing customer support.

1. Question-Answer Dataset

This dataset provides a set of Wikipedia articles, questions and their respective manually generated answers. It is a dataset collected between 2008 and 2010 for use in academic research.

2. Language Data

Language Data is a database managed by Yahoo with information generated from some of the company’s services, such as Yahoo! Answer, which works as an open community for users to post questions and answers.

3. WikiQA

The WikiQA corpus also consists of a set of questions and answers. The source of the questions is Bing, while the answers link to a Wikipedia page with the potential to solve the initial question.

In total, there are more than 3,000 questions and a set of 29,258 sentences in the dataset, of which about 1,400 have been categorized as answers to a corresponding question.

In total, there are more than 3,000 questions and a set of 29,258 sentences in the dataset, of which about 1,400 have been categorized as answers to a corresponding question.

Government data

Datasets generated by governments bring demographic data, which are great inputs for projects related to understanding social trends, creating public policies, and improving society. This can be useful for political campaigns, targeted advertising, or market analysis.

These datasets typically contain anonymized data, so while the models can access the raw data, there are no violations of personal privacy.

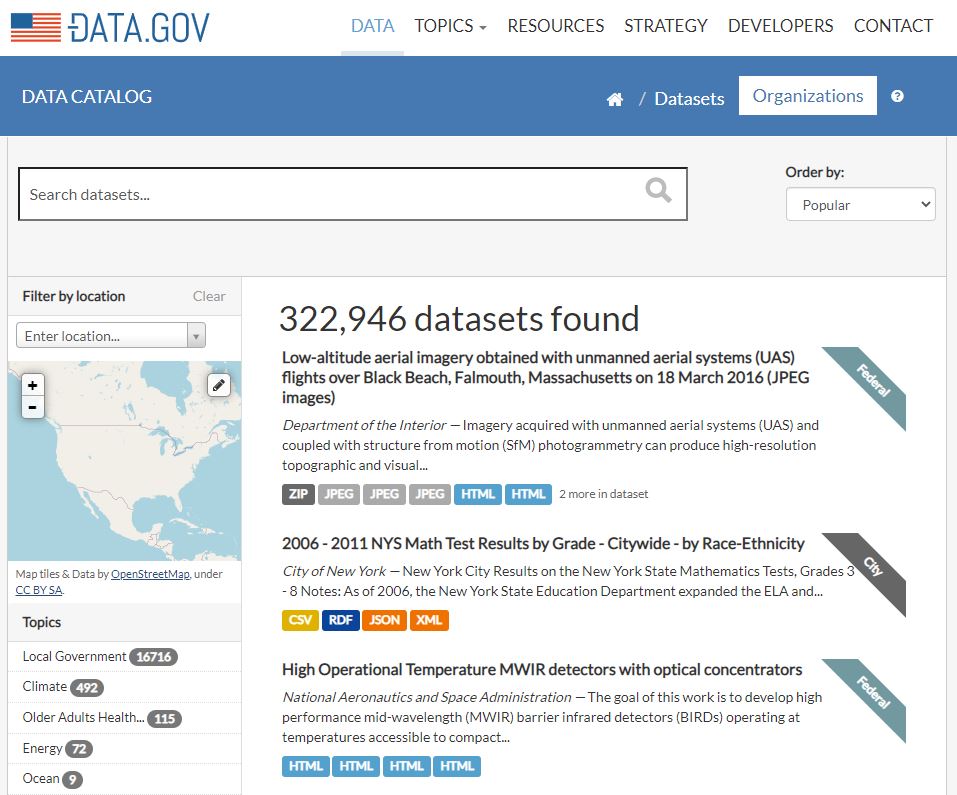

4. Data.gov

Launched in 2009, Data.gov is the North American source for data. Its catalog is impressive: more than 218,000 datasets that allow segmentation by format, tags, types, and topics.

5. EU Open Data Portal

The EU Open Data Portal provides access to open data shared by institutions of the European Union. These are data that can be intended for commercial and non-commercial use. At the user’s disposal are more than 15.5 thousand datasets, covering topics such as health, energy, environment, culture, and education.

Health data

In the wake of the ongoing health crisis worldwide, datasets generated by health organizations are essential to developing effective solutions to save lives. These datasets can help identify the risk factors, work out disease transmission patterns, and speed up diagnosis.

These datasets consist of health records, demographics of patients, disease prevalence, medicinal usage, nutritional values, and much more.

6. Global Health Observatory

This data set is an initiative of the World Health Organization (WHO). It provides public data related to different areas of health, organized by themes such as health systems, tobacco use control, maternity, HIV/AIDS, etc. There is also the option to consult data on COVID-19.

7. CORD-19

CORD-19 is a corpus of academic publications on COVID-19 and other articles about the new coronavirus. It is an open dataset intended to generate new insights on COVID-19.

Economics data

Datasets related to the financial environment usually gather a huge amount of information, since it is common that they have been gathered for a long time. They are ideal for creating economic predictions or establishing investment trends.

With the right financial datasets, a Machine Learning model might be able to predict the behavior of a given asset. That’s why the financial sector is doing everything in its power to create an effective ML model, as anything that can predict even reasonably well has the potential to generate millions of dollars. Machine Learning is already predicting the behavior of citizens, which is impacting the way policymakers are doing their jobs.

8. International Monetary Fund

The IMF dataset holds a range of economic and financial indicators, member country statistics, and other loan and exchange rate data.

9. World Bank

The World Bank’s repository contains different datasets with economic information from different countries. There are more than 17,000 datasets divided by continents.

Product and services reviews

Sentiment analysis has found its applications in various fields that are now helping enterprises to estimate and learn from their clients or customers correctly. Sentiment analysis is increasingly being used for social media monitoring, brand monitoring, the voice of the customer (VoC), customer service, and market research.

Sentiment analysis uses NLP (neuro-linguistic programming) methods and algorithms that are either rule-based, hybrid, or rely on Machine Learning techniques to learn data from datasets.

The data needed in sentiment analysis should be specialized and are required in large quantities. The most challenging part about the sentiment analysis training process isn’t finding data in large amounts; instead, it is to find the relevant datasets. These data sets must cover a wide area of sentiment analysis applications and use cases.

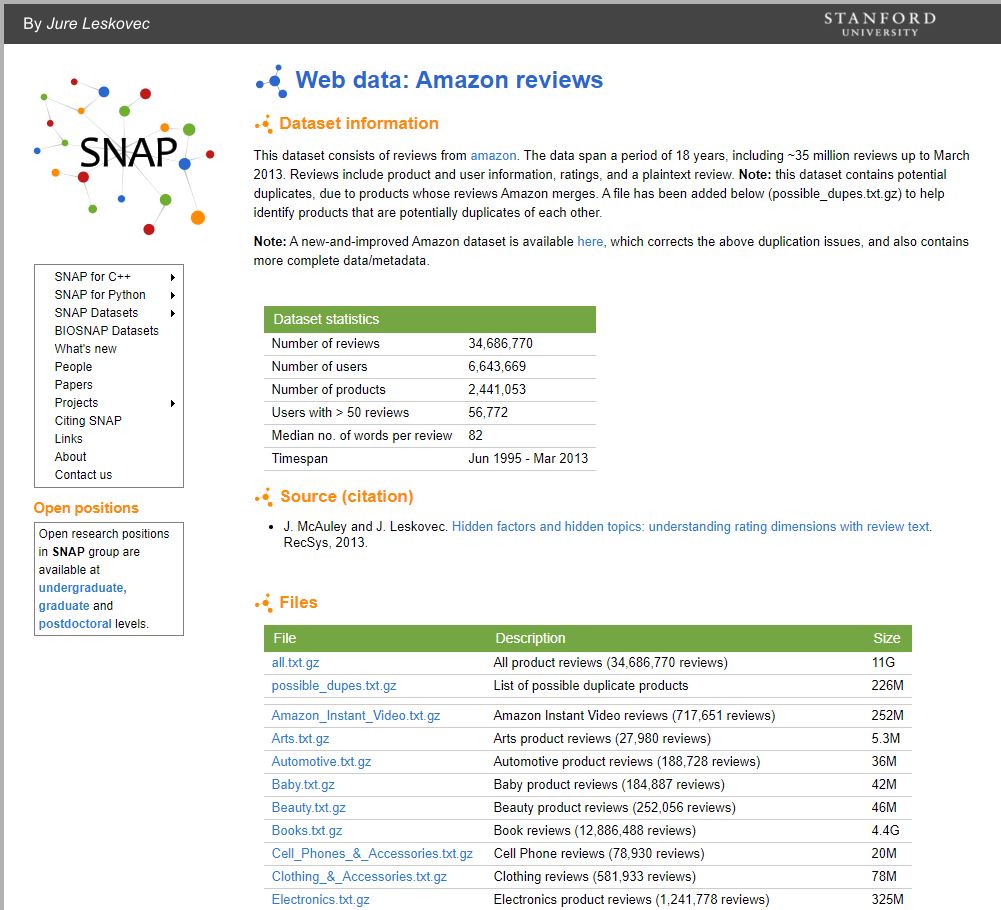

10. Amazon Reviews

This dataset contains about 35 million Amazon reviews, spanning an 18-year period of collected information. It is a dataset of product, user, and review content.

11. Yelp Reviews

Yelp also offers a dataset based on information gathered from its service. There are over 8 million reviews, 1 million tips, plus almost 1.5 million attributes related to businesses, such as opening hours and availability.

12. IMDB Reviews

This database contains a set of more than 25 thousand movie reviews for training and another 25 thousand for tests taken informally from the IMDB page, specialized in movie ratings. It also offers unlabelled data as an additional.

Datasets for the first steps in ML

13. Wine Quality Dataset

This dataset provides information related to wine, both red and green, produced in northern Portugal. The goal is to define the wine quality based on physicochemical tests. Interesting for those who want to practice creating a prediction system.

14. Titanic Dataset

This dataset brings data from 887 real passengers from the Titanic, with each column defining if they survived, their age, passenger class, gender, and the boarding fee they paid. This dataset was part of a challenge launched by the Kaggle platform, whose aim was to create a model that could predict which passengers survived the sinking of the Titanic.

Platforms for Finding Other Datasets

If you want to go further and find your own dataset, the best way is to browse through the most famous repositories of the Machine Learning universe:

Kaggle

Kaggle, a subsidiary of Google LLC, is an online community of data scientists and Machine Learning professionals. Kaggle allows users to find and publish datasets, explore and create models in a web-based data science environment; work with other data scientists and Machine Learning Engineers, and participate in contests to solve data science challenges.

Kaggle started in 2010 by offering Machine Learning contests and now also offers a public data platform, a cloud-based workbench for data science and Artificial Intelligence education.

Dataset Search

Dataset Search is a search engine from Google that helps researchers locate online data that is freely available for use. Across the web, there are millions of datasets about nearly any subject that interests you.

If you’re looking to buy a puppy, you could find datasets compiling complaints of puppy buyers or studies on puppy cognition. Or if you like skiing, you could find data on the revenue of ski resorts or injury rates and participation numbers. Dataset Search has indexed almost 25 million of these datasets, giving you a single place to search for datasets and find links to where the data is.

UCI Machine Learning Repository

The UCI Machine Learning Repository is a collection of databases, domain theories, and data generators that are used by the Machine Learning community for the empirical analysis of Machine Learning algorithms. The archive was created as an ftp archive in 1987 by David Aha and fellow graduate students at UC Irvine.

Since that time, it has been widely used by students, educators, and researchers all over the world as a primary source of ML datasets. As an indication of the impact of the archive, it has been cited over 1000 times, making it one of the top 100 most cited “papers” in all of computer science.

Quandl

Quandl is a platform that provides its users with economic, financial, and alternative datasets. Users can download free data, buy paid data or sell data to Quandl. It can be a useful tool for the development of trading algorithms, for instance.

Conclusion

By exploring these tools, you’re sure to find great inputs for your projects. Be sure to choose the dataset that is most suitable for your specific needs and always keep in mind: it’s not just about quantity, but also quality. The dataset is the basis of any Machine Learning project and it is essential to build on quality data in order to avoid the risk of reaching faulty conclusions.

Leave a Reply