Diffusion models have swept the globe by storm with the release of Dall-E 2, Google’s Imagen, Stable Diffusion, and Midjourney, sparking innovation and stretching the bounds of machine learning.

These models can produce an almost unlimited number of images from word prompts, including photorealistic, magical, futuristic, and, of course, cute images.

These capabilities reimagine what it means for humans to interface with silicon, giving us the ability to make practically any picture we can envision.

As these models develop or the next generative paradigm takes over, humans will be able to produce images, films, and other immersive experiences with only a thought.

In this post, we will discuss the diffusion model, stable diffusion, how it works, and a diffusion model inpainting tutorial, among other things.

What is the Diffusion model?

Machine learning models that can create new data from training data are referred to as generative models. Other generative models include flow-based models, variational autoencoders, and generative adversarial networks (GANs).

Each can generate pictures of excellent quality. Diffusion models learn to recover the data by reversing this noise-adding process after damaging the training data by adding noise. To put it another way, diffusion models are able to create coherent pictures out of the noise.

Diffusion models learn by introducing noise to pictures, which the model later masters the removal of. In order to produce realistic visuals, the model then applies this denoising technique to random seeds.

By conditioning the picture production process, these models can be used in conjunction with text-to-image guidance to generate an almost limitless number of images from text alone. The seeds can be directed by inputs from embeddings like CLIP to give strong text-to-image capabilities.

Diffusion models can perform a variety of tasks, including image creation, image denoising, inpainting, outpainting, and bit diffusion.

Now, what is stable diffusion?

Stable Diffusion is a machine learning model for text-based picture creation provided by Stability.AI. It is capable of generating images from text.

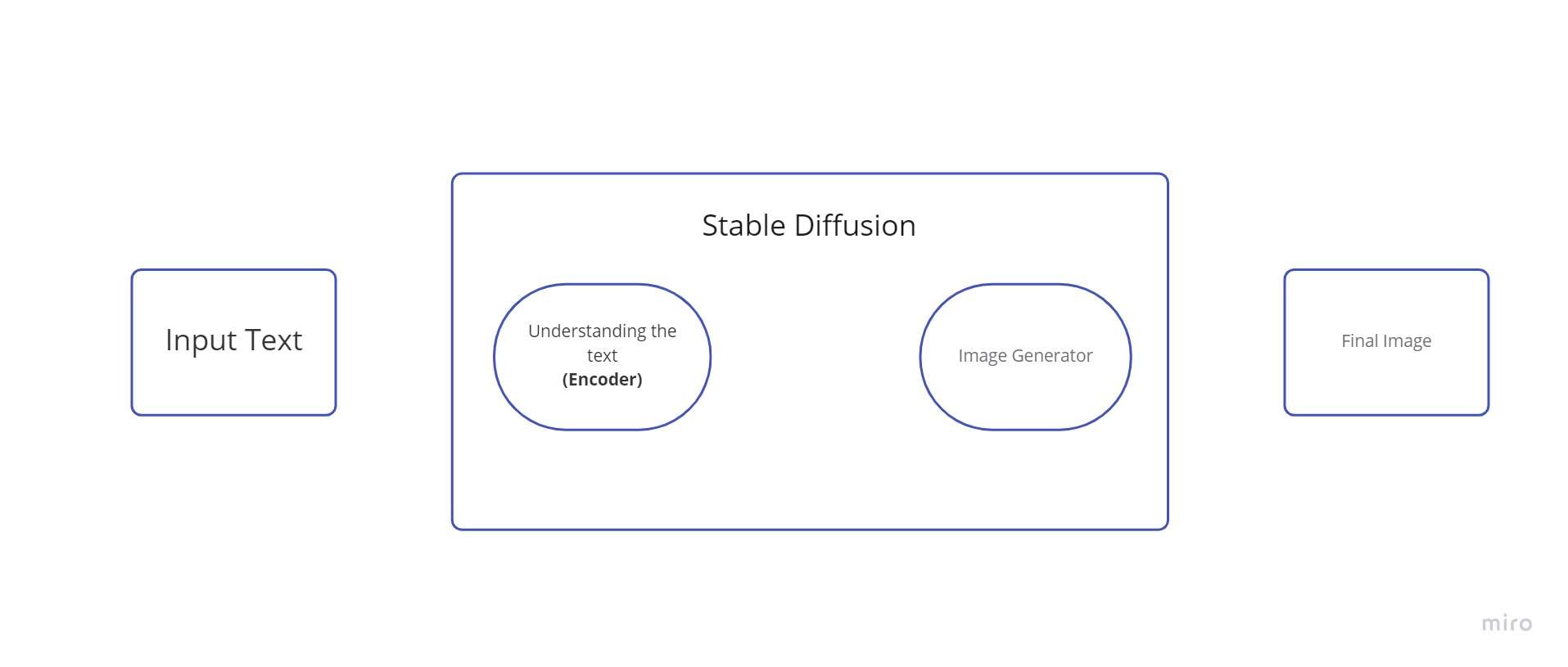

Components of stable diffusion

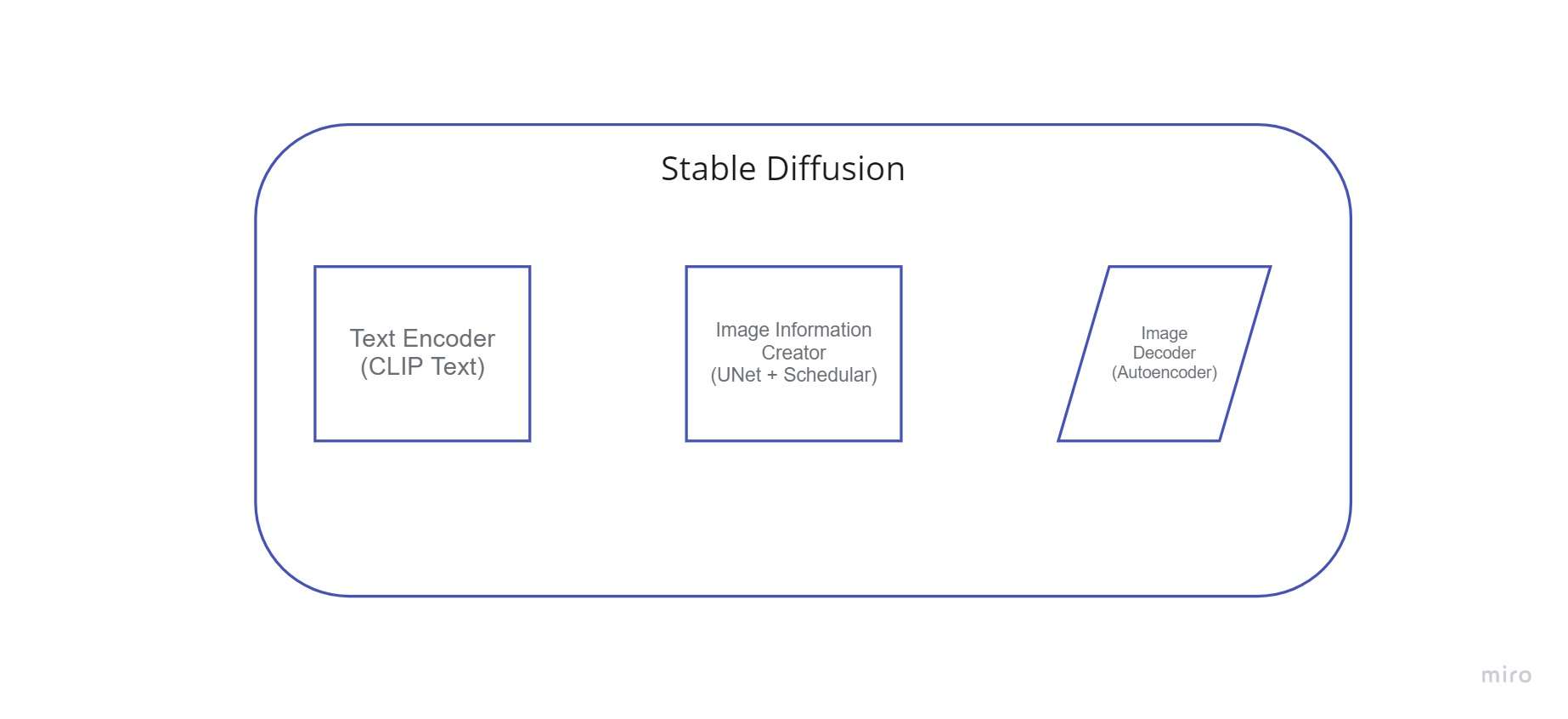

Stable Diffusion is a system comprised of several components and concepts. It is not a single model. When we check behind the hood, the first thing we see is that there is a text-understanding component that converts text information into a numeric representation that captures the text’s concepts.

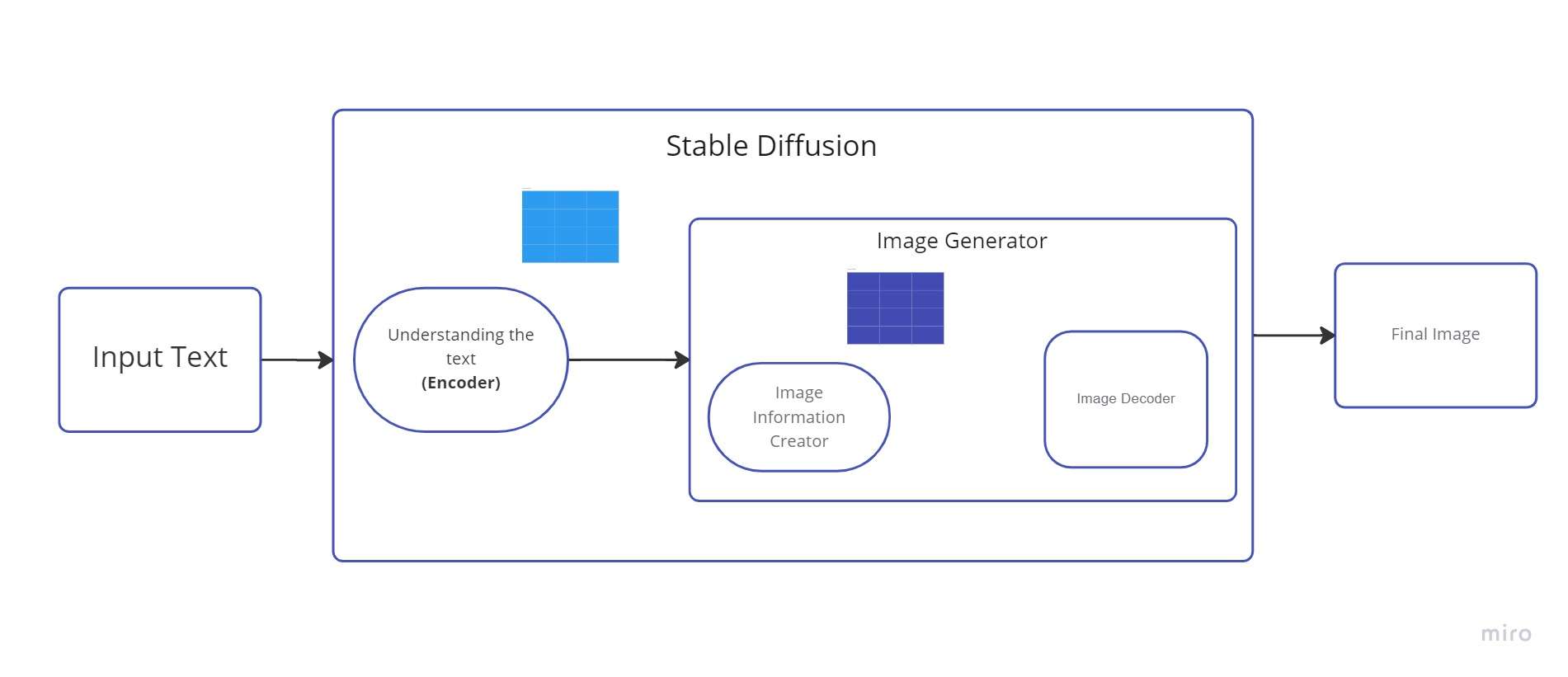

We can call this text encoder a Transformer language model (technically: the text encoder of a CLIP model). It takes the input text and generates a list of integers (a vector) for each word/token in the text. That data is then supplied to the Image Generator, which is made up of several components.

There are two steps in the image generator:

1. Image Information creator

The major component in Stable Diffusion is this element. It is where the majority of the improvement in performance over earlier versions is made.

This component passes through several stages to provide picture data. The creator of picture information operates only within the image information space (or latent space).

It is quicker than earlier diffusion models that operated in pixel space because of this characteristic. Technically speaking, this component is composed of a scheduling algorithm and a UNet neural network.

The process that takes place in this component is referred to as “diffusion”. A high-quality image is ultimately produced as a result of the information being processed in steps (by the next component, the image decoder).

2. Image Decoder

Using the data it received from the information producer, the image decoder creates a picture. It just executes once to create the finished pixel picture at the conclusion of the operation.

Stable Diffusion Impainting tutorial

Stable Diffusion picture inpainting is the technique of filling in missing or damaged areas of an image. The purpose of picture inpainting is to conceal the fact that the image has been restored.

This technique is frequently used to eliminate undesired things from an image or to restore damaged areas of historical photographs. Stable Diffusion Inpainting is a relatively recent way of inpainting that is yielding promising effects.

Following the instructions below will get you started exploring inpainting and modifying existing photos if you want to try inpainting with stable diffusion:

- Go to Huggingface Stable Diffusion Impainting

- Upload your own image

- Erase the portion of your image that needs to be replaced.

- Enter your prompt here (what you want to add in place of what you are removing)

- Select “run”

In the video up top, we upload a picture with three lemons and swap them out for apples. I personally recommend trying it out with your own photographs and prompts.

Conclusion

In general, steady diffusion inpainting is an excellent method for producing fake images or videos that appear to be extremely real. As we move towards new tech advancement, it will get harder and harder to distinguish between authentic and fraudulent as technology advances.

The first half is completely unrelated to the second half. It would have been really cool if the author would have explained how inpaint works in the framework of the model that he explained earlier, could have given insights. But no! That would have required a real understanding, rather than collecting and processing a random text.