Table of Contents[Hide][Show]

The new and improved AI has improved abilities, comprehension, and the capacity to produce higher-resolution images. You may have lately come across some strange and amusing images floating around the internet.

A Shiba Inu dog is dressed in a beret and a black turtleneck. And a sea otter in the manner of Dutch painter Vermeer’s “Girl with a Pearl Earring.” And there’s a cup of soup that looks like a woolly monster.

These images were not created by a human artist.

Instead, DALL-E 2, a new AI system that can convert textual descriptions into images, created them.

Simply write down what you want to see, and the AI will create it for you – in vivid detail, great quality, and, in some cases, genuine inventiveness. In this post, we’ll take a deep look at OpenAI’s latest study, DALL.E 2, as well as how it works, and much more. Let’s get started.

So, what exactly is DALL.E 2?

DALL-E 2 is a “generative model,” a type of machine learning algorithm that generates complicated output rather than performing prediction or classification tasks on input data.

You provide DALL-E 2 with a written description, and it creates a picture that corresponds to it. By combining concepts, qualities, and styles, OpenAI’s DALLE 2 can produce innovative, realistic graphics and art from a basic linguistic description.

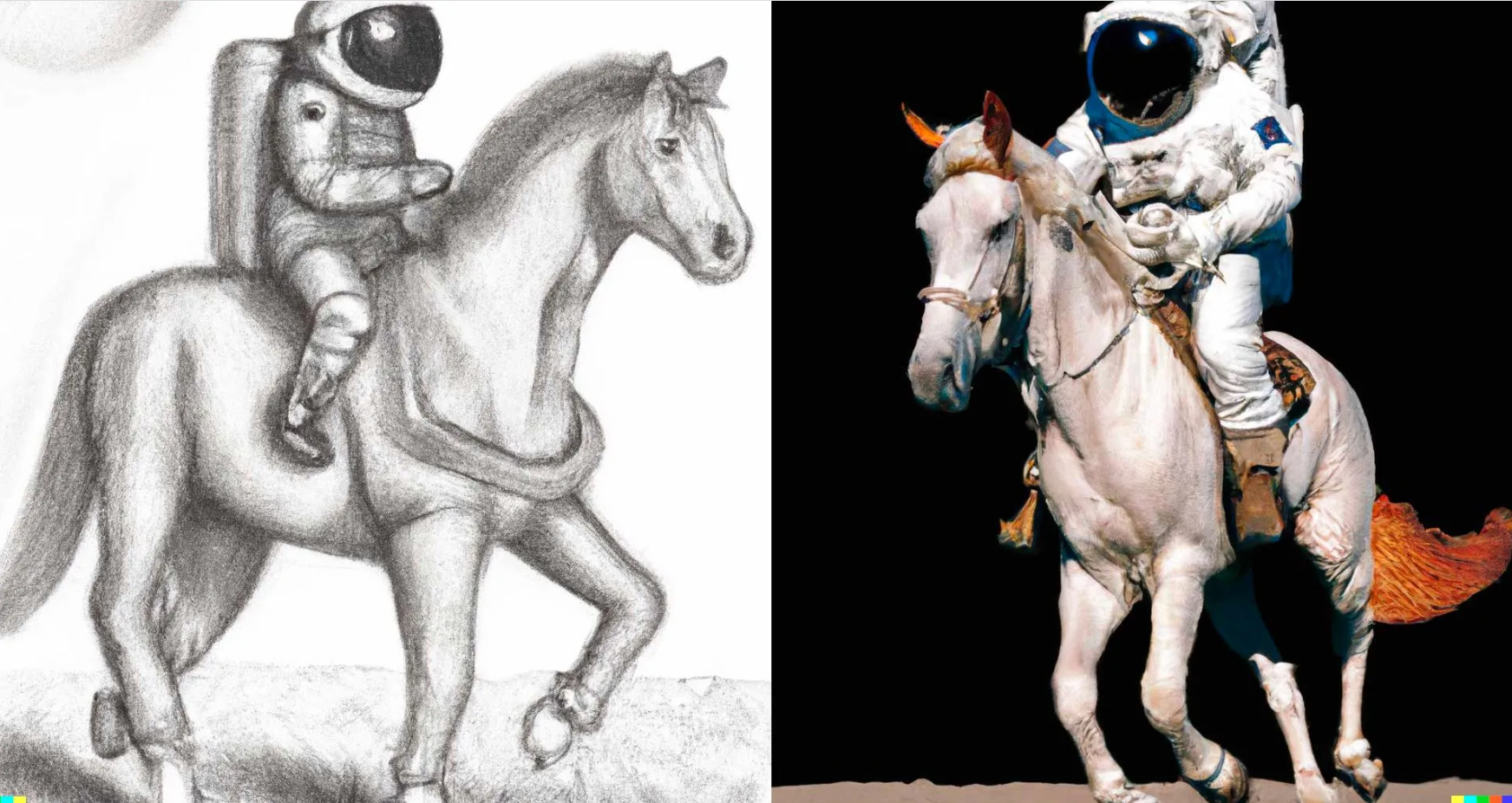

The latest version, DALLE 2, is said to be more versatile, capable of making pictures from captions at higher resolutions and in a wider spectrum of creative styles. For instance, the pictures below (from the DALL-E 2 blog post) are created by the description “An astronaut riding a horse.”

One description concludes, “like a pencil sketch,” whereas the other concludes, “in a photorealistic manner.”

It can also change existing photographs with astonishing precision. So, you can add or delete elements while keeping colors, reflections, and shadows, all while maintaining the original image’s appearance.

How does it work?

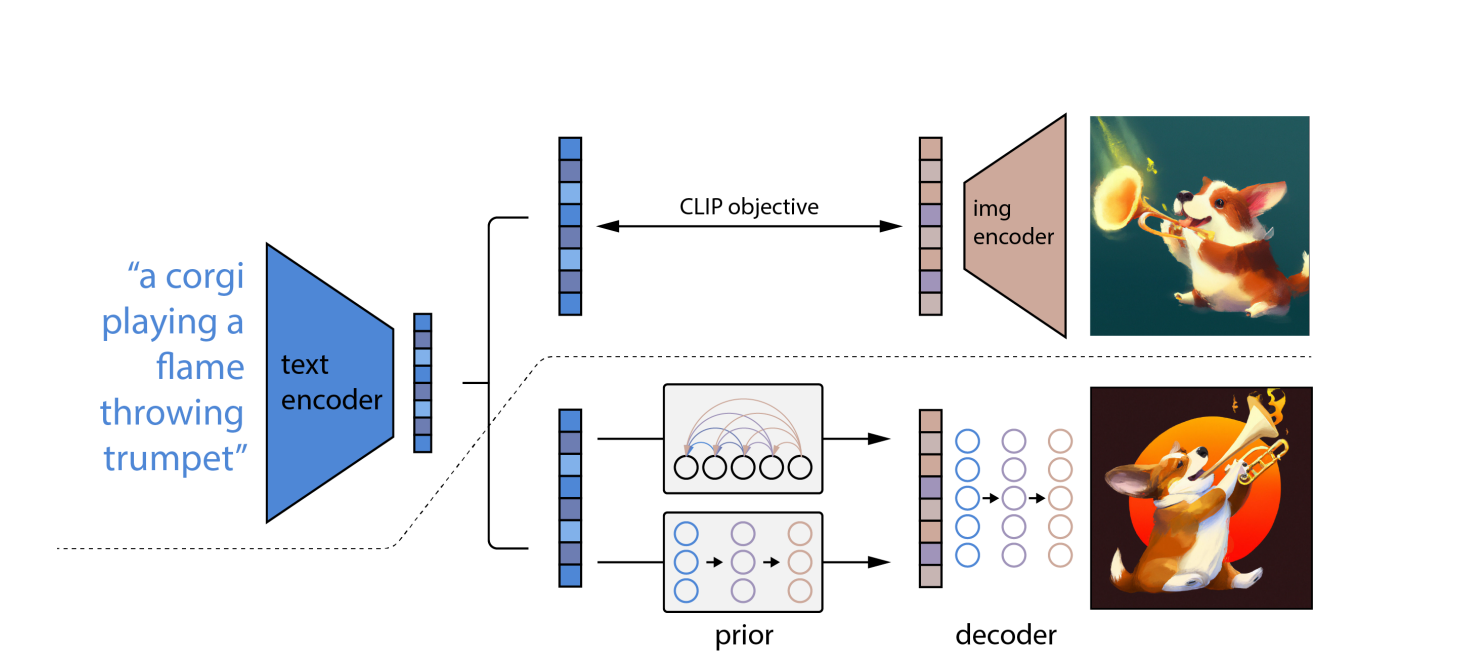

DALL-E 2 makes use of CLIP and diffusion models, two sophisticated deep learning approaches developed in recent years. However, it is based on the same notion as all other deep neural networks: representation learning. CLIP simultaneously trains two neural networks on pictures and captions.

One network learns the visual representations in the picture, while the other learns the text representations. During training, the two networks attempt to modify their parameters so that comparable pictures and descriptions result in similar embeddings.

“Diffusion,” a type of generative model that learns to make pictures by gradually noising and denoising its training samples, is the other machine learning approach utilized in DALL-E 2. Diffusion models are similar to autoencoders in that they transform input data into an embedding representation and then use the embedding information to recreate the original data.

Using OpenAI’s language model CLIP, which can connect textual descriptions with photographs, it first translates the written prompt into an intermediate form that incorporates the crucial properties that a picture should have to match that prompt (according to CLIP).

Second, DALL-E 2 creates a CLIP-compliant image using a diffusion model, which is a neural network.

On distorted photos with random pixels, diffusion models are learned. They learn how to restore the photos’ original form. Diffusion models can produce high-quality synthetic images, especially when used in conjunction with a guiding approach that prioritizes accuracy over diversity.

As a consequence, the diffusion model takes the random pixels and uses CLIP to convert them into a new image that matches the word prompt. Because of the diffusion concept, DALL-E 2 can produce higher-resolution images faster than DALL-E.

DALL.E 2 use case

In the last twenty years, computer vision technology has progressed from a simple notion to a major breakthrough. Despite these advancements, picture and object recognition models still face significant obstacles in everyday life. The absence of datasets is one of the most significant drawbacks of image recognition and computer vision. Because there is a data shortage on both ends, training image recognition models to give 100 percent accurate results is almost difficult.

Fortunately, OpenAI’s new machine learning model can bridge the gap in technology. DALLE 2 is capable of generating amazing pictures based on text descriptions. This fake picture production can provide data to image recognition models based on their requirements. The absence of data is a significant stumbling block for object and picture identification.

In the digital era, datasets are ubiquitous, yet we’re still looking for shortcuts to feed the AI model, so it can provide good outcomes. However, it is not simple to train an image recognition model. It necessitates a large number of data sets with little differences, which we might not have been able to retrieve simply.

So, what’s the answer: The answer is DALLE 2. The OpenAI picture generator, with its capacity to produce images from texts and change existing ones, can help to bridge the gap. This will aid in the generation of additional training data while also reducing the amount of human labeling required. Despite the significant benefit, you should be aware of fraudulent image productions and images that exclude inclusion. This might lead to image detection methods producing biased results.

Limitations

DALL.E 2 may well have a harmful influence if it falls into the wrong hands, according to OpenAI. In today’s world of deep fakes, the model could easily be used to spread false information or racist imagery, which is why OpenAI only allows developers to use DALL.2 by invitation. The model must comply with a rigorous content restriction for all suggestions she gets.

To exclude the potential of DALL.E 2 creating any hostile or violent pictures, the dataset was created without any deadly weaponry. While OpenAI has stated that it plans to transform it into an API in the future, in the case of DALL.E 2, it is willing to proceed with caution.

Conclusion

DALL-E 2 is another interesting OpenAI research discovery that opens the door to new applications.

One example is creating massive datasets to meet one of computer vision’s main bottlenecks–data. While the economic case for many DALL-E-based apps will be determined by the price and policies that OpenAI establishes for its API users, they will all undoubtedly advance picture production.

Leave a Reply