Table of Contents[Hide][Show]

You are most likely aware that a computer can describe a picture.

For example, a picture of a dog playing with your children can be translated as ‘dog and children in the garden.’ But did you know the opposite way around is now feasible as well? You type some words, and the machine generates a new picture.

Unlike a Google search, which searches existing photographs, this is all fresh. In recent years, OpenAI has been one of the leading organizations, reporting stunning outcomes.

They train their algorithms on massive text and picture databases. They published a paper on their GLIDE image model, which was trained on hundreds of millions of photos. In terms of photorealism, it outperforms their prior ‘DALL-E’ model.

In this post, we’ll look at OpenAI’s GLIDE, one of several fascinating initiatives aimed at producing and altering photorealistic pictures with text-guided diffusion models. Let’s begin.

What is Open AI Glide?

While most images can be described in words, creating images from text inputs necessitates specialized knowledge and a significant amount of time.

Allowing an AI agent to produce photorealistic pictures from natural language prompts not only allows people to create rich and diverse visual material with unprecedented ease but also allows for simpler iterative refinement and fine-grained control of the images created.

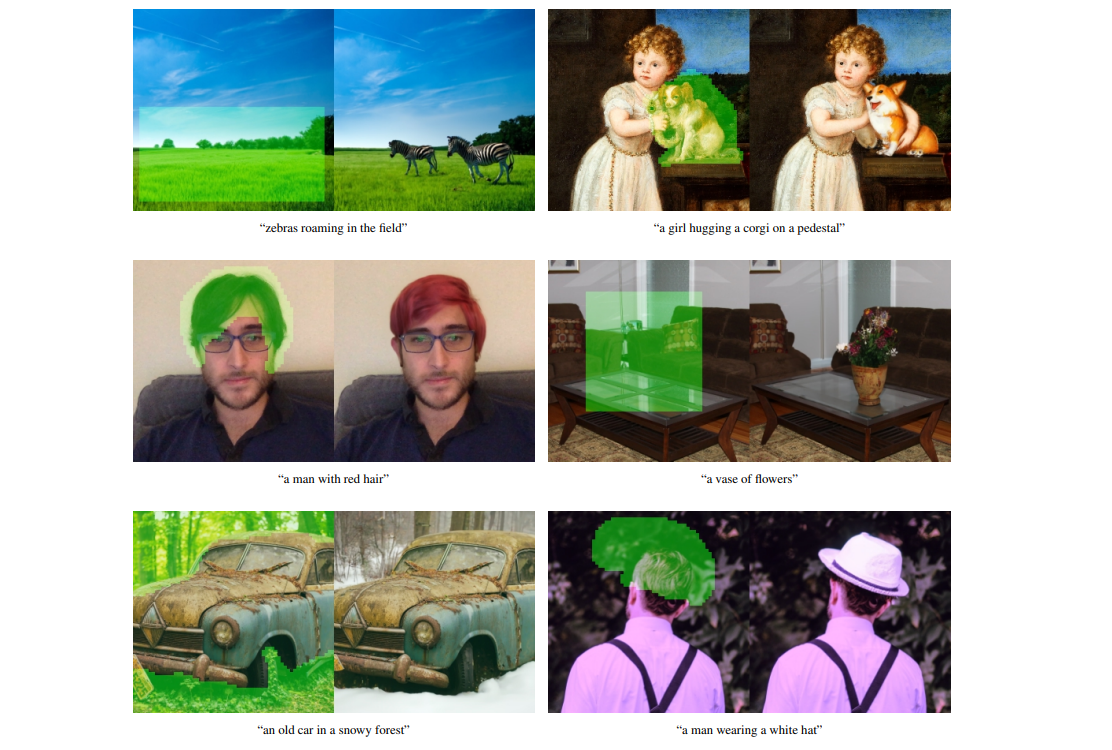

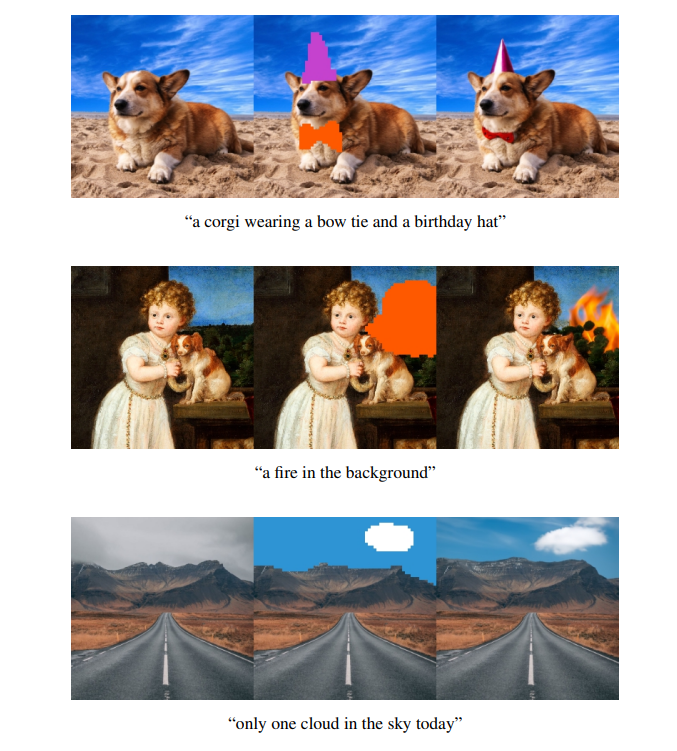

GLIDE can be used to edit existing photos by utilizing natural language text prompts to insert new objects, create shadows and reflections, perform image inpainting, and so on.

It can also turn basic line drawings into photorealistic photographs, and it has exceptional zero-sample manufacturing and repair capabilities for complex situations.

Recent research has demonstrated that likelihood-based diffusion models can also produce high-quality synthetic pictures, particularly when combined with a guiding approach that balances variety and fidelity.

OpenAI published a guided diffusion model in May, which allows diffusion models to be conditional on the labels of a classifier. GLIDE improves on this success by bringing guided diffusion to the problem of text-conditional image creation.

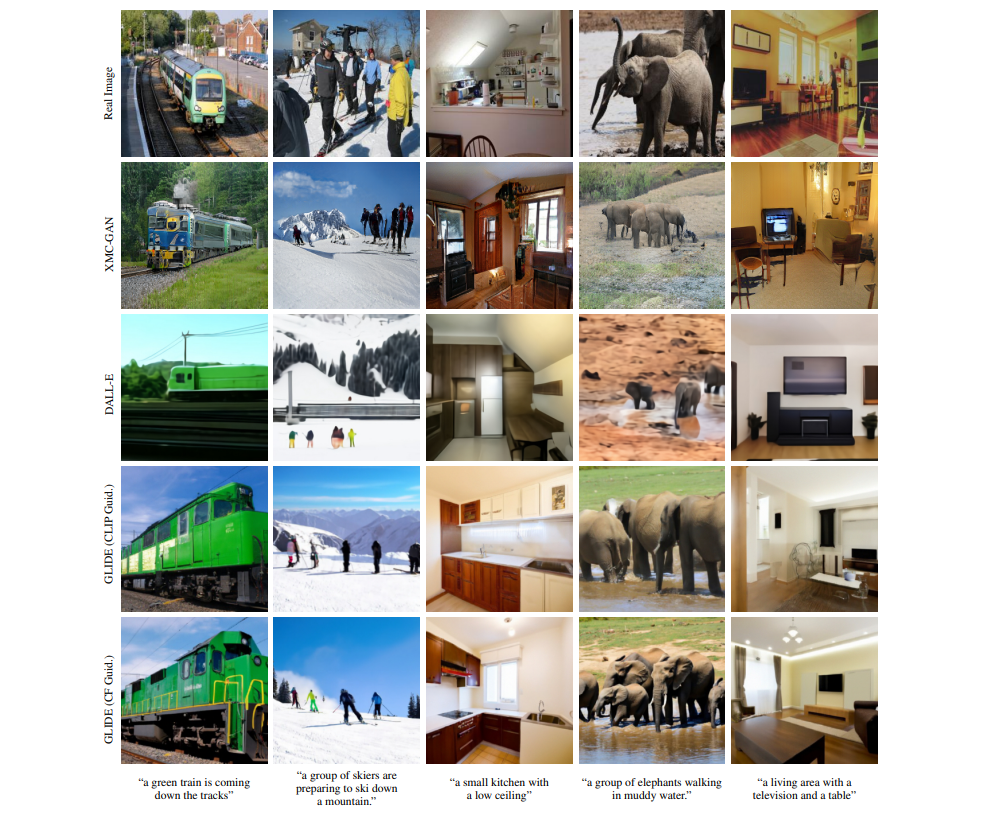

After training a 3.5 billion parameter GLIDE diffusion model using a text encoder to condition on natural language descriptions, the researchers tested two alternative guiding strategies: CLIP guidance and classifier-free guidance.

CLIP is a scalable technique for learning joint representations of text and pictures that delivers a score based on how near an image is to a caption.

The team used this strategy in their diffusion models by substituting the classifier with a CLIP model that “guides” the models. Meanwhile, classifier-free guidance is a strategy for directing diffusion models that do not involve the training of a separate classifier.

GLIDE Architecture

The GLIDE architecture consists of three components: an Ablated Diffusion Model (ADM) trained to generate a 64 × 64 image, a text model (transformer) that influences image generation via a text prompt, and an upsampling model that converts our small 64 × 64 images to more interpretable 256 x 256 pixels.

The first two components work together to control the picture generating process so that it appropriately reflects the text prompt, while the latter is required to make the images we create easier to comprehend. The GLIDE project was inspired by a report published in 2021 that showed that ADM techniques outperformed currently popular, state-of-the-art generative models in terms of picture sample quality.

For the ADM, the GLIDE authors employed the same ImageNet 64 x 64 model as Dhariwal and Nichol, but with 512 channels instead of 64. The ImageNet model has roughly 2.3 billion parameters as a result of this.

The GLIDE team, unlike Dhariwal and Nichol, wanted to have greater direct control over the picture generating process, thus they combined the visual model with an attention-enabled transformer. GLIDE gives you some control over the picture generating process output by processing the text input prompts.

This is accomplished by training the transformer model on a suitably big dataset of photos and captions (similar to that employed in the DALL-E project).

The text is initially encoded into a series of K tokens in order to condition it. After that, the tokens are loaded into a transformer model. The output of the transformer can then be used in two ways. For the ADM model, the final token embedding is utilized instead of the class embedding.

Second, the token embeddings’ final layer – a series of feature vectors – is projected independently to the dimensions for each attention layer in the ADM model and concatenated to each attention context.

In reality, this enables the ADM model to produce a picture from new combinations of similar text tokens in a unique and photorealistic fashion, based on its learned comprehension of the inputs words and their related images. This text-encoding transformer contains 1.2 billion parameters and employs 24 leftover blocks with a width of 2048.

Finally, the upsampler diffusion model includes around 1.5 billion parameters and varies from the basic model in that its text encoder is smaller, with a width of 1024 and 384 base channels, compared to the base model. This model, as the name indicates, aids in the upgrade of the sample in order to improve interpretability for both machines and humans.

Diffusion model

GLIDE generates images using its own version of the ADM (ADM-G for “guided”). The ADM-G model is a modification of the diffusion U-net model. A diffusion U-net model differs dramatically from the more common image synthesis techniques such as VAE, GAN, and transformers.

They build a Markov chain of diffusion steps to gradually inject random noise into the data, and then learn to reverse the diffusion process and rebuild the required data samples from the noise alone. It operates in two stages: forward and reverse diffusion.

The forward diffusion method, given a data point from the sample’s true distribution, adds a tiny amount of noise to the sample over a preset series of steps. As the steps increase in size and approach infinity, the sample loses all recognizable characteristics and the sequence begins to resemble an isotropic Gaussian curve.

During the backward diffusion phase, the diffusion model learns to reverse the influence of the added noise on the pictures and lead the produced image back to its original shape by attempting to resemble the original input sample distribution.

A completed model could do so with a real Gaussian noise input and a prompt. The ADM-G method varies from the preceding one in that a model, either CLIP or a customized transformer, impacts the backward diffusion phase by employing the text prompt tokens that are inputted.

Glide capabilities

1. Generation of Image

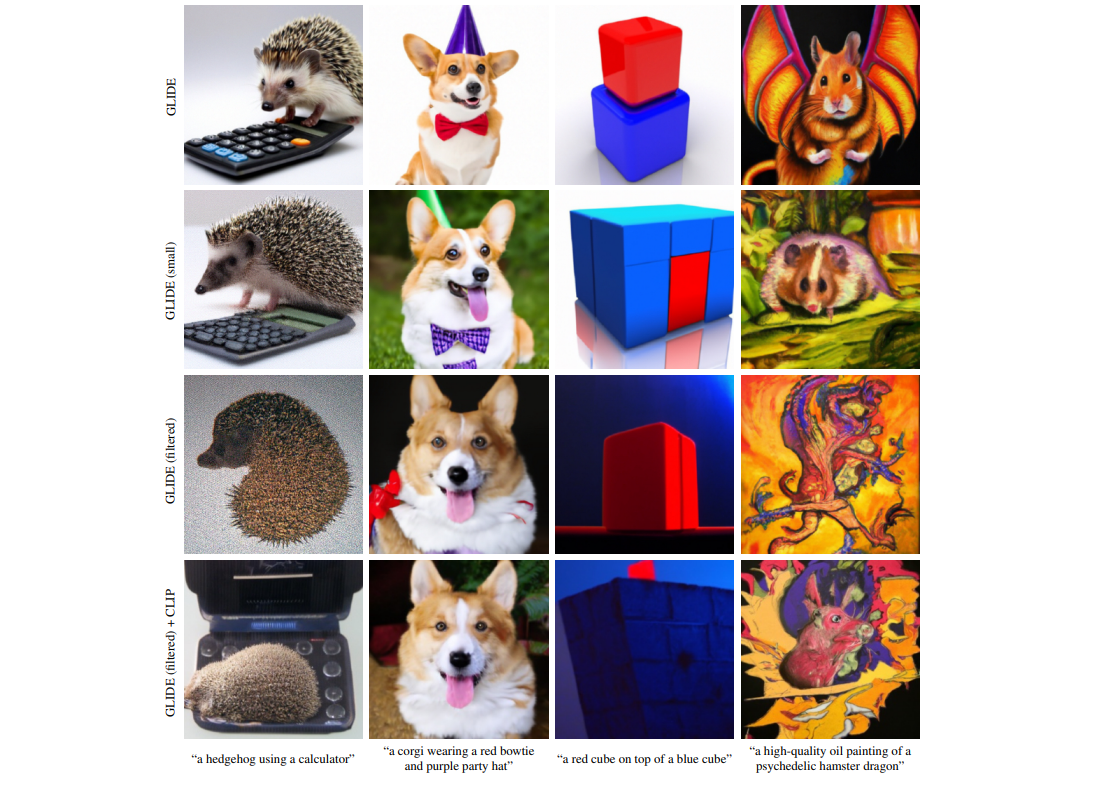

The most popular and widely used use of GLIDE will probably be image synthesis. Although the pictures are modest and GLIDE has difficulty with animal/human forms, the potential for one-shot image production is nearly endless.

It can create photos of animals, celebrities, landscapes, buildings, and much more, and it can do it in a variety of art styles as well as photo-realistically. The authors of the researchers assert that GLIDE is capable of interpreting and adapting a broad variety of textual inputs into a visual format, as seen in the samples below.

2. Glide inpainting

GLIDE’s automatic photo inpainting is arguably the most fascinating use. GLIDE can take an existing picture as input, process it with the text prompt in mind for locations that need to be altered, and then make active modifications to those parts with ease.

It must be used in conjunction with an editing model, such as SDEdit, to produce even better results. In the future, apps that take advantage of capabilities like these might be crucial in developing code-free picture-altering approaches.

Conclusion

Now that we’ve gone through the process, you should grasp the fundamentals of how GLIDE works, as well as the breadth of its capabilities in picture creation and in-image modification.

Leave a Reply