Table of Contents[Hide][Show]

Voice calls are being phased out in favor of text and visuals in the communication sector. According to a Facebook poll, more than half of buyers prefer to buy from a company that they can speak with. Chatting has become the new socially acceptable mode of communication.

It enables businesses to communicate with their clients at any time and from any location. Chatbots are increasingly gaining popularity among companies and customers due to their ease of use and reduced wait times.

Chatbots, or automated conversational programs, provide clients with a more customized method to access services through a text-based interface. The newest AI-powered chatbots can recognize a query (question, command, order, etc.) made by a person (or another bot, inception) in a specific environment and respond appropriately (answer, action, etc.).

In this post, we’ll go over what chatbots are, their benefits, use cases, and how to make your own deep learning chatbot in Python, among other things.

Let’s get started.

So, what are chatbots?

A chatbot is frequently referred to be one of the most advanced and promising forms of human-machine interaction. These digital assistants improve customer experience by streamlining interactions between people and services.

Simultaneously, they provide businesses with new options to optimize the customer contact process for efficiency, which can cut conventional support expenses.

In a nutshell, it is AI-based software that is meant to communicate with humans in their natural languages. These chatbots often interact via audio or written techniques, and they can easily mimic human languages in order to connect with humans in a human-like manner.

Chatbots learn from their interactions with users, becoming more realistic and efficient over time. They can handle a wide range of business activities, such as authorizing spending, engaging with consumers online, and generating leads.

Creating your own deep learning chatbot with python

There are many distinct kinds of chatbots in the field of machine learning and AI. Some chatbots are virtual assistants, while others are just there to converse with, while others are customer service agents.

You’ve probably seen some of the ones employed by businesses to answer inquiries. We’ll make a small chatbot in this tutorial to answer frequently requested queries.

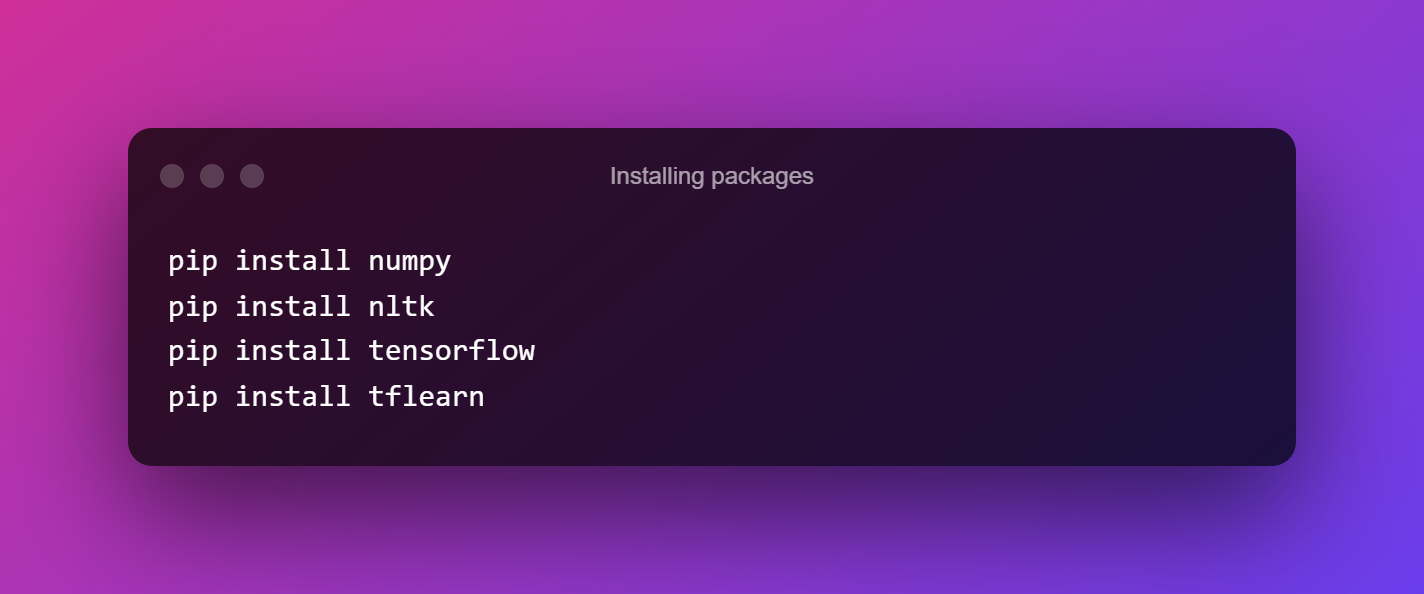

1. Installing packages

Our first step is to install the following packages.

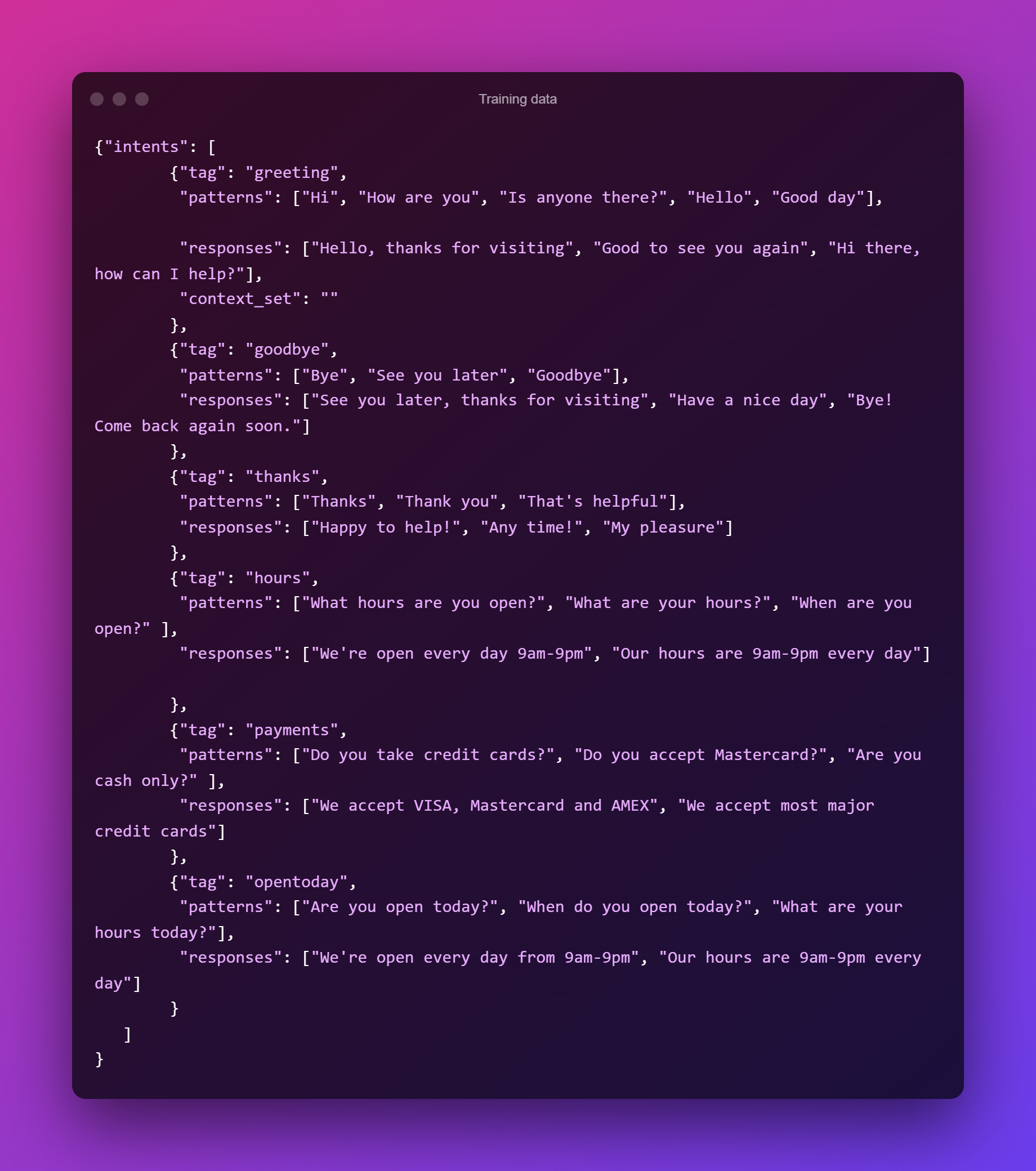

2. Training Data

Now it’s time to figure out what type of information we’ll need to give our chatbot. We don’t need to download any large datasets because this is a simple chatbot.

We’ll only utilize the information that we’ve created ourselves. To effectively follow along with the lesson, you’ll need to generate a .JSON file with the same format as the one seen below. My file is named “intents.json.”

The JSON file is used to create a set of messages that the user is likely to input and map to a set of relevant answers. Each dictionary in the file has a tag that identifies which group each message belongs to.

We’ll use this information to train a neural network to categorize a phrase of words as one of the tags in our file.

We can then just take a response from those groups and provide it to the user. The chatbot will be better and more complicated if you offer it with additional tags, replies, and patterns.

3. JSON data loading

We’ll start by loading in our .json data and importing some modules. Assemble your.json file in the same directory as your Python script. Our .json data will now be saved in the data variable.

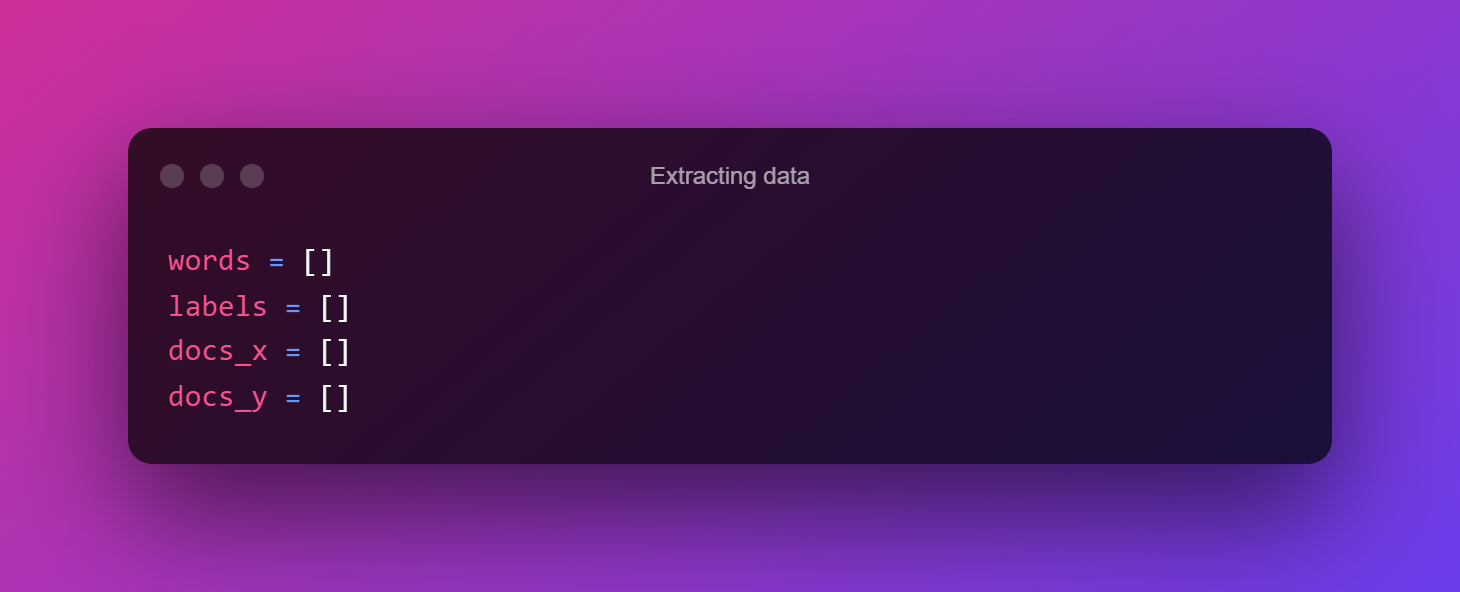

4. Data Extraction

Now it’s time to extract the information we need from our JSON file. All of the patterns, as well as the class/tag to which they belong, are required.

We’ll also need a list of all the unique terms in our patterns (for reasons we’ll explain later), so let’s create some blank lists to keep track of these values.

Now we’ll loop through our JSON data and retrieve the information we need. Rather than having them as strings, we’ll use nltk.word tokenizer to transform each pattern into a list of words.

Then, in our docs_x list, we’ll add each pattern, along with its associated tag, to the docs_y list.

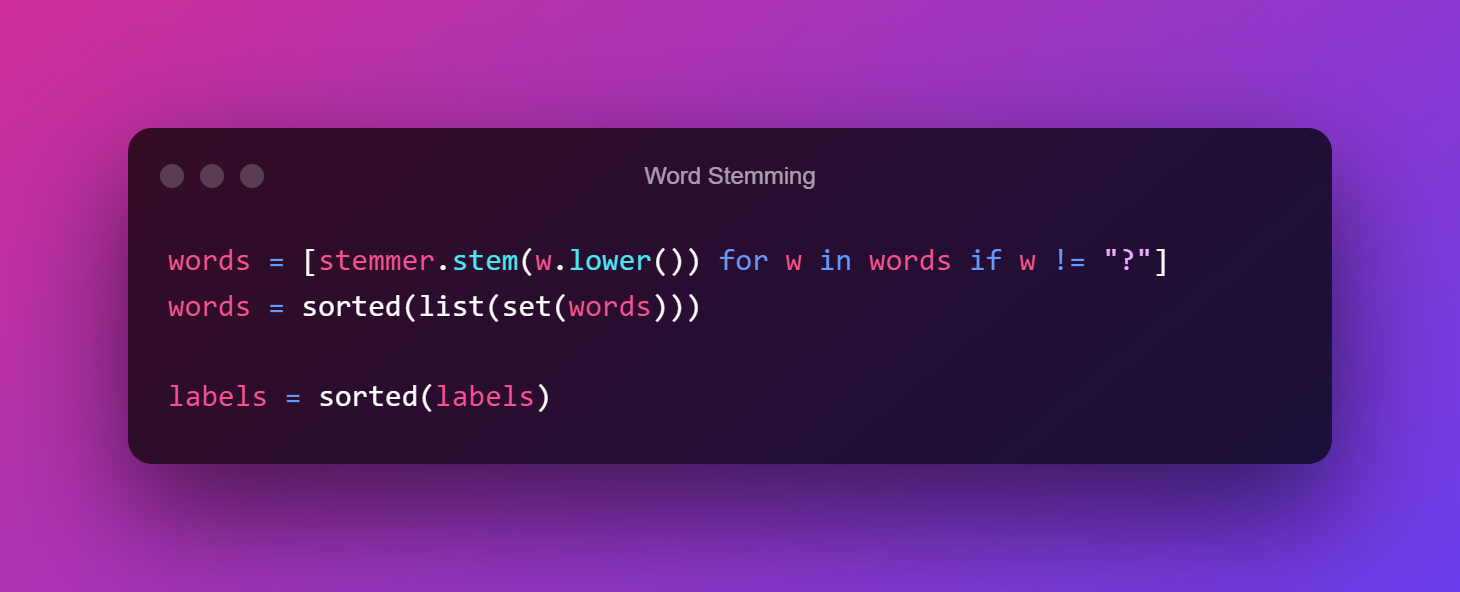

5. Word Stemming

Finding the root of a word is known as stemming. For instance, the stem of the word “thats” stem might be “that,” whereas the stem of the word “happening” could be “happen.”

We’ll use this stemming technique to trim down our model’s vocabulary and try to figure out what sentences imply in general. This code will simply generate a unique list of stemmed words that will be used in the next phase of our data preparation.

6. Bag of Words

It’s time to speak about a bag of words now that we’ve imported our data and generated a stemmed vocabulary. Neural networks and machine learning algorithms, as we all know, require numerical input. So our string list isn’t going to cut it. We need a mechanism to represent numbers in our sentences, which is where a bag of words comes in.

Each phrase will be represented by a list of the length of the number of terms in our model’s vocabulary. Each word in our vocabulary will be represented by a place in the list. If the position in the list is a 1, the word appears in our statement; if it is a 0, the word does not appear in our sentence.

We call it a bag of words because we don’t know the sequence in which the words appear in the phrase; all we know is that they exist in our model’s vocabulary.

In addition to structuring our input, we must also format our output so that the neural network understands it. We’ll build output lists that are the length of the number of labels/tags in our dataset, similar to a bag of words. Each place in the list represents a unique label/tag, and a 1 in any of those locations indicates which label/tag is being represented.

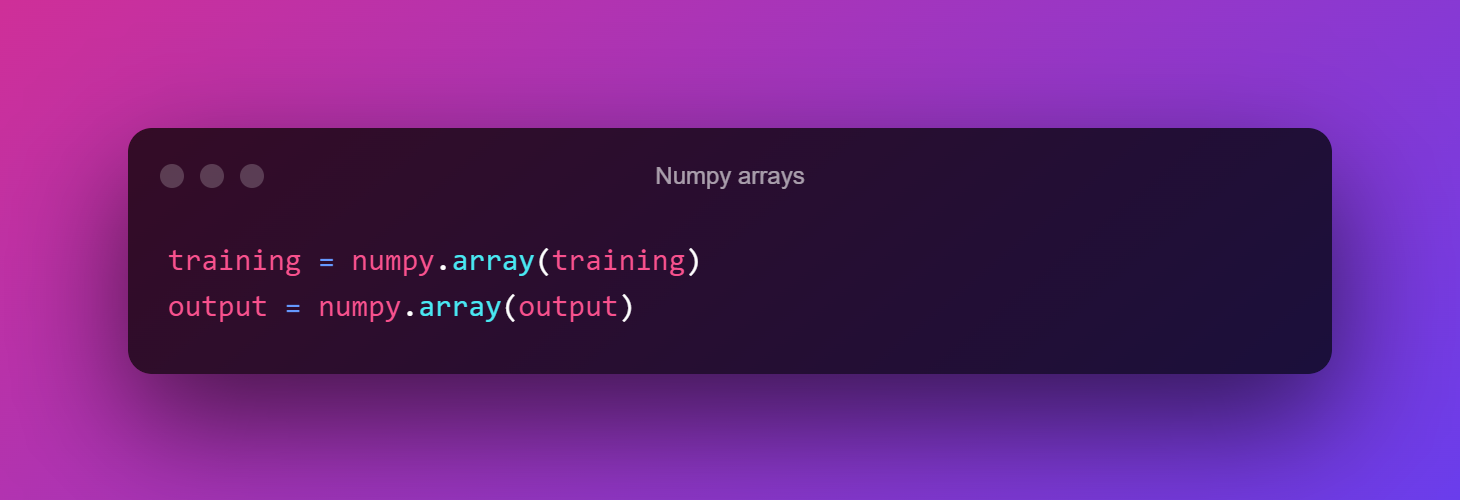

Finally, we’ll use NumPy arrays to store our training data and output.

7. Model Development

We’re ready to start building and training a model now that we’ve preprocessed all of our data. We’ll utilize a very basic feed-forward neural network with two hidden layers for our objectives.

Our network’s purpose will be to look at a collection of words and assign them to a class (one of our tags from the JSON file). We’ll begin by establishing our model’s architecture. Keep in mind that you can play with some of the numbers to come up with a better model! Machine learning is mostly based on trial and error.

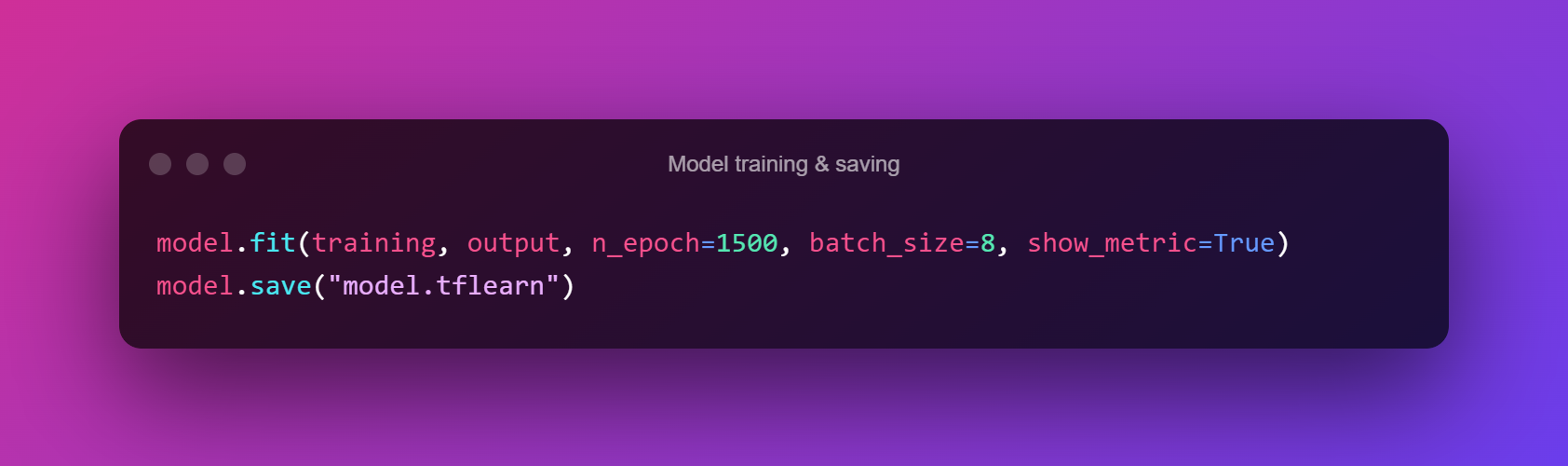

8. Model Training & Saving

It’s time to train our model on our data now that we’ve set it up! We’ll achieve this by fitting our data to the model. The number of epochs we provide is the number of times the model will be exposed to the same data during training.

We can save the model to the file model once we’ve finished training it. tflearn is a script that can be used in other scripts.

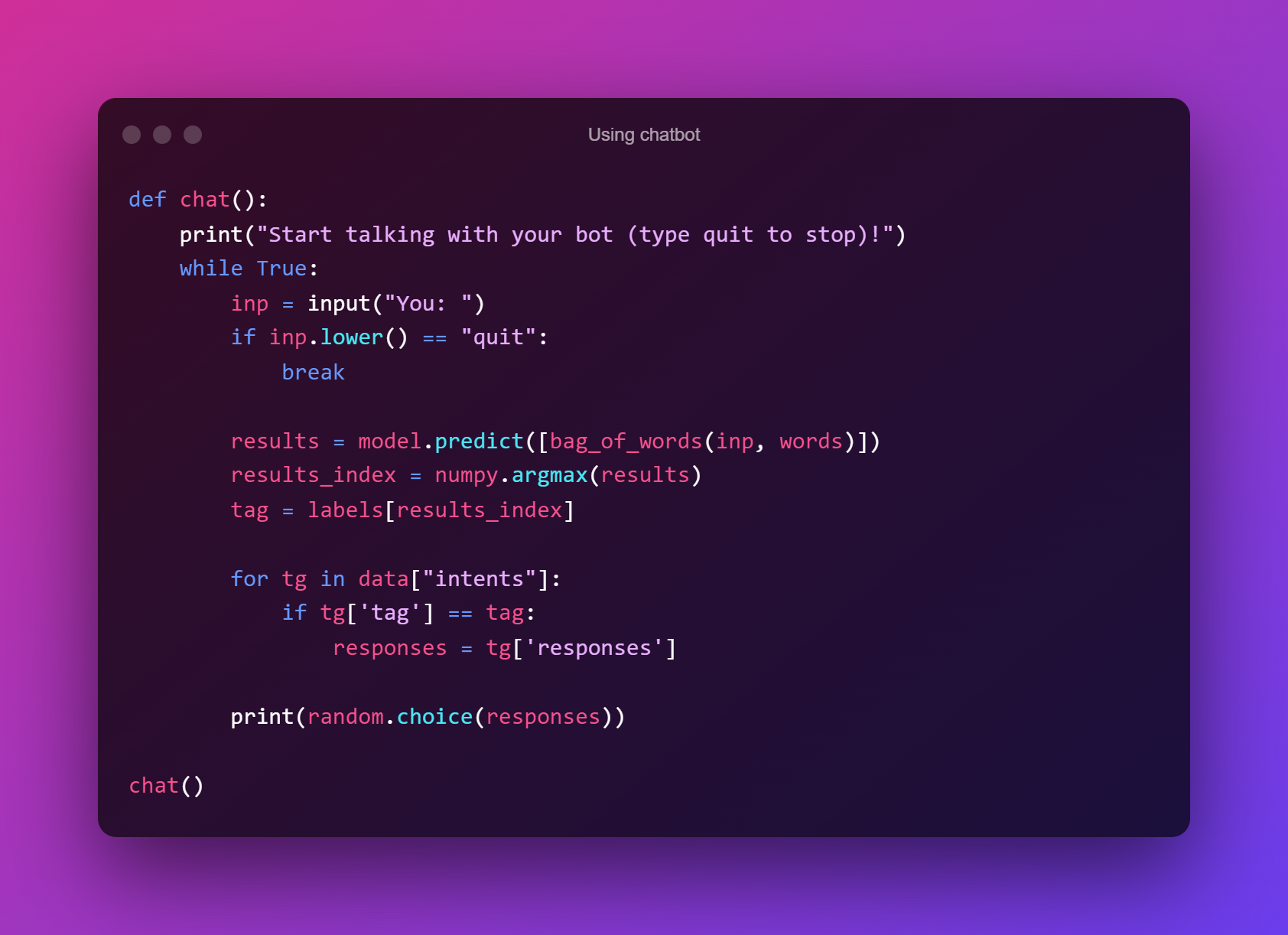

9. Using a chatbot

Now you can start chatting with your bot.

Benefits of Chatbot

- As bots are expected to operate 365 days a year, 24 hours a day, without pay, increase availability and reaction speed.

- These bots are perfect tools for tackling big data’s three key Vs: volume, velocity, and variety.

- Chatbots are software that can be used to learn about and comprehend a company’s consumers.

- It has superior power that it has a cheap maintenance cost after having top benefits.

- Chatbot Applications create data that may be preserved and utilized for analytics and forecasts.

Usecase

- Resolving customer queries

- Answering frequently asked questions

- Assigning customers to support team

- Collecting customer feedback

- Recommending new offers

- Shop with conversational commerce

- IT Helpdesk

- Booking accommodations

- Money transfer

Conclusion

Chatbots, like other AI technologies, will be used to augment human skills and liberate humans to be more creative and imaginative by allowing them to spend more time on strategic rather than tactical tasks.

Businesses, employees, and consumers are likely to benefit from enhanced chatbot features such as faster recommendations and predictions, as well as easy access to high-definition video conferencing from within a conversation, in the near future, when AI is combined with the development of 5G technology.

These and other possibilities are still being investigated, but as internet connectivity, AI, NLP, and machine learning progress, they will become more prevalent.

Hello,

Thank you for this program.

I have a question.

“bag_of_words” is not defined. I can’t understand this error.

Could you tell me how can I solve this error??

Thank you for this program!! Have a good day

Please add a function before using the chatbot section:

///////////////////////////////////////////////////////////////////////////////

def bag_of_words(s, words):

bag = [0 for _ in range(len(words))]

s_words = nltk.word_tokenize(s)

s_words = [stemmer.stem(word.lower()) for word in s_words]

for se in s_words:

for i, w in enumerate(words):

if w == se:

bag[i] = 1

return numpy.array(bag)

// It will definitely resolve your issue. //

////////////////////////////////////////////////////////////////////////////

I am sharing the complete code with you, so you will get a clear picture of it.

///////////////////////////////////////////////////////////

import nltk

from nltk.stem.lancaster import LancasterStemmer

stemmer = LancasterStemmer()

import numpy

import tflearn

import tensorflow

import random

import json

import pickle

with open(“intents.json”) as file:

data = json.load(file)

try:

with open(“data.pickle”, “rb”) as f:

words, labels, training, output = pickle.load(f)

except:

words = []

labels = []

docs_x = []

docs_y = []

for intent in data[“intents”]:

for pattern in intent[“patterns”]:

wrds = nltk.word_tokenize(pattern)

words.extend(wrds)

docs_x.append(wrds)

docs_y.append(intent[“tag”])

if intent[“tag”] not in labels:

labels.append(intent[“tag”])

words = [stemmer.stem(w.lower()) for w in words if w != “?”]

words = sorted(list(set(words)))

labels = sorted(labels)

training = []

output = []

out_empty = [0 for _ in range(len(labels))]

for x, doc in enumerate(docs_x):

bag = []

wrds = [stemmer.stem(w.lower()) for w in doc]

for w in words:

if w in wrds:

bag.append(1)

else:

bag.append(0)

output_row = out_empty[:]

output_row[labels.index(docs_y[x])] = 1

training.append(bag)

output.append(output_row)

training = numpy.array(training)

output = numpy.array(output)

with open(“data.pickle”, “wb”) as f:

pickle.dump((words, labels, training, output), f)

tensorflow.reset_default_graph()

net = tflearn.input_data(shape=[None, len(training[0])])

net = tflearn.fully_connected(net, 8)

net = tflearn.fully_connected(net, 8)

net = tflearn.fully_connected(net, len(output[0]), activation=”softmax”)

net = tflearn.regression(net)

model = tflearn.DNN(net)

try:

model.load(“model.tflearn”)

except:

model.fit(training, output, n_epoch=1500, batch_size=8, show_metric=True)

model.save(“model.tflearn”)

def bag_of_words(s, words):

bag = [0 for _ in range(len(words))]

s_words = nltk.word_tokenize(s)

s_words = [stemmer.stem(word.lower()) for word in s_words]

for se in s_words:

for i, w in enumerate(words):

if w == se:

bag[i] = 1

return numpy.array(bag)

def chat():

print(“Start talking with the bot (type quit to stop)!”)

while True:

inp = input(“You: “)

if inp.lower() == “quit”:

break

results = model.predict([bag_of_words(inp, words)])

results_index = numpy.argmax(results)

tag = labels[results_index]

for tg in data[“intents”]:

if tg[‘tag’] == tag:

responses = tg[‘responses’]

print(random.choice(responses))

chat()

/////////////////////////////////////////////////////////////////

Thank you,

Happy coding!

Hello,

Could you give me an idea of the process to carry out in the case of wanting to create a chatbot in python, but the information is obtained from a survey in excel. Thank you!