Table of Contents[Hide][Show]

Hey, did you know, that a 3D scene can be created from 2D data inputs in seconds with NVIDIA’s Instant NeRF neural rendering model, and photographs of that scene can be rendered in milliseconds?

It is possible to quickly convert a collection of still photographs into a digital 3D environment using the technique known as inverse rendering, which enables AI to mimic how light works in the actual world.

It is one of the first models of its kind that can combine ultra-fast neural network training and quick rendering, thanks to a technique that NVIDIA’s research team devised that completes the operation incredibly quickly – nearly instantaneously.

This article will examine NVIDIA’s NeRF in-depth, including its speed, use cases, and other factors.

So, what is NeRF?

NeRF stands for neural radiance fields, which refers to a technique for creating unique views of complicated scenes by refining an underlying continuous volumetric scene function using a small number of input views.

When given a collection of 2D photos as input, NVIDIA’s NeRFs employ neural networks to represent and generate 3D scenes.

A small number of photos from various angles around the area are needed for the neural network, together with the location of the camera in each frame.

The sooner these pictures are taken, the better, especially in scenes with moving actors or objects.

The AI-generated 3D scene will be smudged if there is too much motion during the 2D picture capturing procedure.

By predicting the color of light emanating in every direction from any location in the 3D environment, the NeRF effectively fills in the gaps left by this data to construct the entire image.

Since NeRF can generate a 3D scene in a couple of milliseconds after receiving the proper inputs, it is the quickest NeRF approach to date.

NeRF works so quickly that it is virtually instantaneous, hence its name. If standard 3D representations like polygonal meshes are vector pictures, NeRFs are bitmap images: they densely capture the way light emanates from an object or inside a scene.

Instant NeRF is essential to 3D as digital cameras and JPEG compression have been to 2D photography, dramatically enhancing the speed, convenience, and reach of 3D capture and sharing.

Instant NeRF can be used to produce avatars or even entire sceneries for virtual worlds.

To pay homage to the early days of Polaroid photos, the NVIDIA Research team recreated a famous shot of Andy Warhol taking an instant photo and converted it into a 3D scene using Instant NeRF.

Is it really 1,000 times faster?

A 3D scene could take hours to create before NeRF, depending on its intricacy and quality.

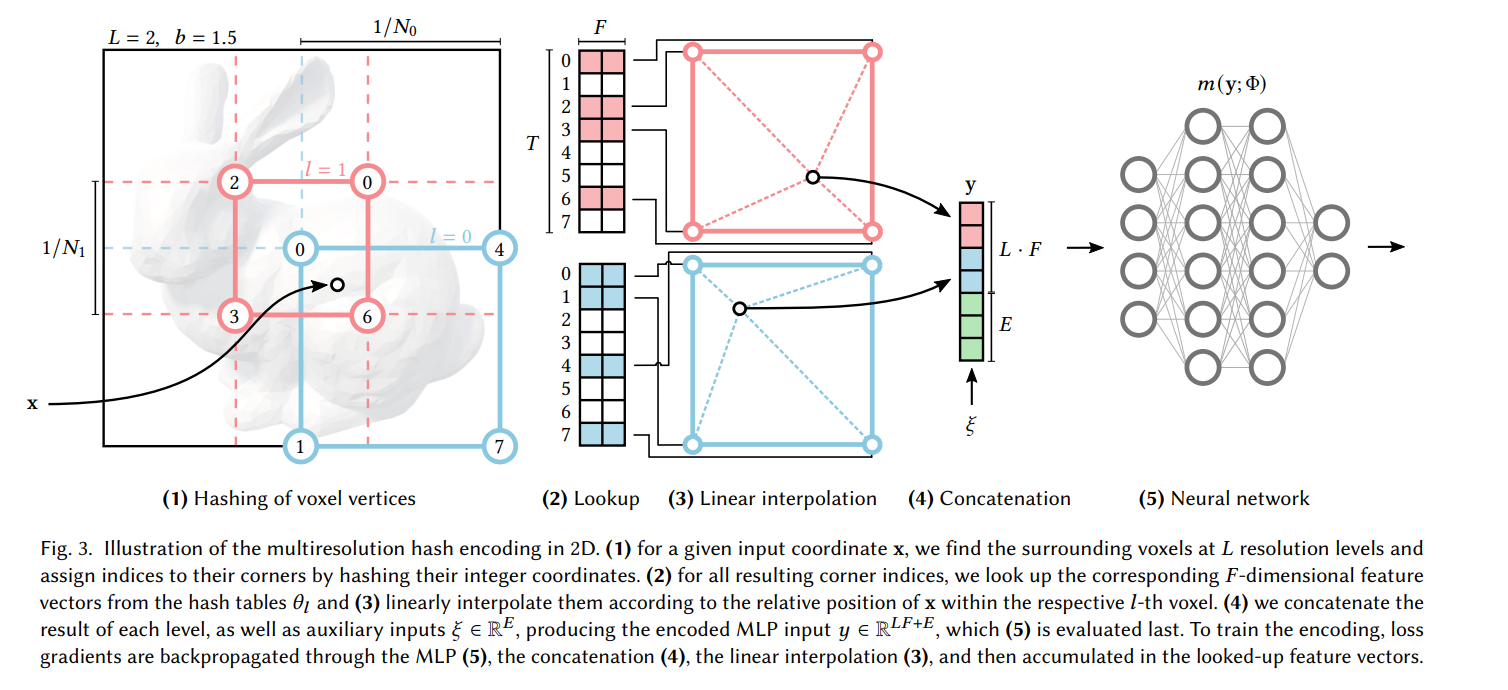

AI greatly sped up the process, but it could still take hours to properly train. Using a method called multi-resolution hash encoding, pioneered by NVIDIA, Instant NeRF reduces render times by a factor of 1,000.

The Tiny CUDA Neural Networks package and the NVIDIA CUDA Toolkit were used to create the model. According to NVIDIA, because it is a lightweight neural network, it can be trained and used on a single NVIDIA GPU, with NVIDIA Tensor Core cards operating at the quickest speeds.

Use Case

Self-driving automobiles are one of the most significant applications of this technology. These vehicles largely operate by imagining their surroundings as they go.

However, the problem with today’s technology is that it’s clumsy and takes a bit too long.

However, using Instant NeRF, all that is required for a self-driving car to approximate/understand the size and shape of real-world objects is to capture still photographs, turn them into 3D, and then use that information.

There could still be another use in the metaverse or video game production industries.

Because Instant NeRF allows you to build avatars or even entire virtual worlds quickly, this is true.

Almost little 3D character modeling would be required because all you would need to do is run the neural network, and it would generate a character for you.

In addition, NVIDIA is still exploring applying this technology for additional machine learning-related applications.

For example, it may be used to translate languages more accurately than previously and enhance the general-purpose deep learning algorithms now in use for a wider range of tasks.

Conclusion

Many graphics issues rely on task-specific data structures to make use of the problem’s smoothness or sparsity.

The practical learning-based alternative offered by NVIDIA’s multi-resolution hash encoding automatically concentrates on pertinent detail, regardless of the workload.

To learn more about how things operate inside, check out the official GitHub repository.

Leave a Reply