Hive is a widely used Big Data Analytics tool in the business, and it’s a fantastic place to start if you’re new to Big Data. This Apache Hive lesson goes through the fundamentals of Apache Hive, why a hive is necessary, its features, and everything else you should know.

Let’s first understand the Hadoop framework upon which Apache Hive is built on.

Apache Hadoop

Apache Hadoop is a free and open-source platform for storing and processing big datasets ranging in size from gigabytes to petabytes. Hadoop allows clustering numerous computers to analyze enormous datasets in parallel, rather than requiring a single large computer to store and analyze the data.

MapReduce and Hadoop Distributed File System are two of the components:

- MapReduce – MapReduce is a parallel programming technique for handling huge volumes of organized, semi-structured, and unstructured data on commodity hardware clusters.

- HDFS – HDFS (Hadoop Distributed File System) is a Hadoop framework component that stores and processes data. It’s a fault-tolerant file system that runs on standard hardware

Different sub-projects (tools) in the Hadoop ecosystem, including Sqoop, Pig, and Hive, are used to aid Hadoop modules.

- Hive – Hive is a framework for writing SQL-style scripts that perform MapReduce computations.

- Pig – Pig is a procedural programming language that may be used to create a script for MapReduce processes.

- Sqoop – Sqoop is a tool for importing and exporting data between HDFS and RDBMS.

What is Apache Hive?

Apache Hive is an open-source data warehouse program for reading, writing, and managing huge data sets stored directly in the Apache Hadoop Distributed File System (HDFS) or other data storage systems like Apache HBase.

SQL developers may use Hive to create Hive Query Language (HQL) statements for data query and analysis that are comparable to regular SQL statements. It was created to make MapReduce programming easier by eliminating the need to learn and write long Java code. Instead, you may write your queries in HQL, and Hive will build the map and reduce the functions for you.

The SQL-like interface of Apache Hive has become the Gold Standard for performing ad-hoc searches, summarizing, and analyzing Hadoop data. When included in cloud computing networks, this solution is especially cost-effective and scalable, which is why many firms, including Netflix and Amazon, continue to develop and improve Apache Hive.

History

During their time at Facebook, Joydeep Sen Sarma and Ashish Thusoo co-created Apache Hive. They both recognized that to get the most out of Hadoop, they’d have to create some rather complicated Java Map-Reduce tasks. They recognized they wouldn’t be able to educate their rapidly expanding engineering and analytic teams on the skills they’d need to leverage Hadoop across the company. Engineers and analysts frequently utilized SQL as a user interface.

While SQL could meet the majority of analytics needs, the developers also intended to incorporate Hadoop’s programmability. Apache Hive arose from these two objectives: a SQL-based declarative language that also enabled developers to bring in their own scripts and programs when SQL wasn’t enough.

It was also developed to hold centralized metadata (Hadoop-based) about all the datasets in the company to make the construction of data-driven organizations easier.

How does Apache Hive work?

In a nutshell, Apache Hive converts an input program written in the HiveQL (SQL-like) language into one or more Java MapReduce, Tez, or Spark tasks. (All of these execution engines are compatible with Hadoop YARN.) After that, Apache Hive arranges the data into tables for the Hadoop Distributed File System HDFS) and performs the tasks on a cluster to get an answer.

Data

The Apache Hive tables are arranged in the same way as tables in a relational database are organized, with data units ranging in size from bigger to smaller. Databases are made up of tables that are divided into divisions, which are further divided into buckets. HiveQL (Hive Query Language) is used to access the data, which can be altered or appended. Table data is serialized within each database, and each table has its own HDFS directory.

Architecture

Now we’ll talk about the most important aspect of Hive Architecture. The components of Apache Hive are as follows:

Metastore — It keeps track of information about each table, such as its structure and location. The partition metadata is likewise included in Hive. This allows the driver to keep track of the progress of different data sets spread across the cluster. The data is stored in a conventional RDBMS format. Hive metadata is extremely important for the driver to maintain track of the data. The backup server duplicates data on a regular basis so that it may be recovered in the event of data loss.

Driver – HiveQL statements are received by a driver, which functions as a controller. By establishing sessions, the driver initiates the execution of the statement. It keeps track of the executive’s lifespan and progress. During the execution of a HiveQL statement, the driver saves the required metadata. It also serves as a data or query result collecting point following the Reduce process.

Compiler – It executes the HiveQL query compilation. The query is now converted to an execution plan. The tasks are listed in the plan. It also includes the steps that MapReduce must take to obtain the result as translated by the query. The query is converted to an Abstract Syntax Tree by Hive’s compiler (AST). Converts the AST to a Directed Acyclic Graph after checking for compatibility and compile-time faults (DAG).

Optimizer – It optimizes DAG by performing different changes on the execution plan. It combines transformations for improved efficiency, such as turning a pipeline of joins into a single join. To improve speed, the optimizer might divide activities, such as applying a transformation to data before performing a reduction operation.

Executor – The executor runs the tasks when the compilation and optimization are finished. The jobs are pipelined by the Executor.

CLI, UI, and Thrift Server – The command-line interface (CLI) is a user interface that allows an external user to communicate with Hive. Hive’s thrift server, similar to the JDBC or ODBC protocols, allows external clients to communicate with Hive via a network.

Security

Apache Hive is integrated with Hadoop security, which uses Kerberos for client-server mutual authentication. The HDFS dictates permissions for newly generated files in Apache Hive, allowing you to approve by the user, group, and others.

Key features

- Hive supports external tables, which let you process data without storing it in HDFS.

- It also enables data segmentation at the table level to increase speed.

- Apache Hive excellently meets Hadoop’s low-level interface need.

- Hive makes data summarization, querying, and analysis easier.

- HiveQL does not require any programming skills; a simple understanding of SQL queries is sufficient.

- We can also use Hive to conduct ad-hoc queries for data analysis.

- It’s scalable, familiar, and adaptable.

- HiveQL does not require any programming skills; a simple understanding of SQL queries is sufficient.

Benefits

Apache Hive allows for end-of-day reports, daily transaction evaluations, ad-hoc searches, and data analysis. The comprehensive insights provided by Apache Hive give significant competitive advantages and make it easier for you to respond to market demands.

Here are some of the benefits of having such information readily available:

- Ease of use – With its SQL-like language, querying data is simple to understand.

- Accelerated data insertion — Because Apache Hive reads the schema without verifying the table type or schema definition, data does not have to be read, parsed, and serialized to disc in the database’s internal format. In contrast, in a conventional database, data must be validated each time it is added.

- Superior scalability, flexibility, and cost-effectiveness – Because data is stored in the HDFS, Apache Hive can hold 100s of petabytes of data, making it a far more scalable option than a typical database. Apache Hive, as a cloud-based Hadoop service, allows customers to swiftly spin up and down virtual servers to meet changing workloads.

- Extensive working capacity – Large datasets may handle up to 100,000 queries per hour.

Limitations

- In general, Apache Hive queries have very high latency.

- Subquery support is limited.

- Real-time queries and row-level changes are not available in Apache Hive.

- There is no support for materialized views.

- In the hive, update and delete actions are not supported.

- Not intended for OLTP (online transitional process).

Getting started with Apache Hive

Apache Hive is a strong Hadoop partner that simplifies and streamlines your workflows. To get the most out of Apache Hive, seamless integration is essential. The first step is to go to the website.

1. Installation Hive from a Stable Release

Start by downloading the most recent stable release of Hive from one of the Apache download mirrors (see Hive Releases). The tarball must then be unpacked. This will create a subfolder called hive-x.y.z (where x.y.z is the release number):

![]()

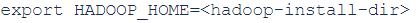

Set the environment variable HIVE_HOME to point to the installation directory:

Finally, add $HIVE_HOME/bin to your PATH:

![]()

2. Running Hive

Hive uses Hadoop, so:

- you must have Hadoop in your path OR

3. DLL Operation

Creating Hive Table

![]()

generates a table named pokes with two columns, the first of which is an integer and the second of which is a string.

![]()

Browsing through Tables

![]()

Listing All the Tables

![]()

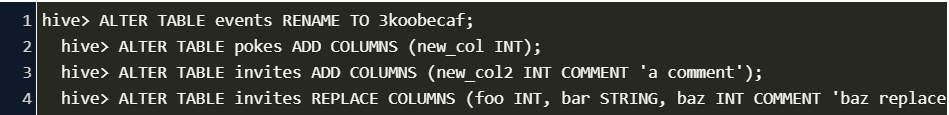

Altering and Dropping Tables

Table names can be changed and columns can be added or replaced:

It’s worth noting that REPLACE COLUMNS replaces all existing columns while only changing the table’s structure and not the data. A native SerDe must be used in the table. REPLACE COLUMNS can also be used to remove columns from the schema of a table:

![]()

Dropping Tables

![]()

There are many additional operations and features in Apache Hive that you may learn about by visiting the official website.

Conclusion

Hive definition is a data program interface for querying and analysis for huge datasets that are built on top of Apache Hadoop. Professionals choose it over other programs, tools, and software since it is mainly designed for Hive extensive data and is simple to use.

Hope this tutorial helps you kickstart with Apache Hive and make your workflows more efficient. Let us know in the comments.

Leave a Reply