Have you ever wanted to hear your favorite character talk to you? Natural-sounding text-to-speech is slowly becoming a reality with the help of machine learning.

For example, Google’s NAT TTS model is being used to power their new Custom Voice service. This service uses neural networks to generate a voice trained from recordings. Web apps such as Uberduck provide hundreds of voices for you to choose from to create your own synthesized text.

In this article, we’ll look over the impressive and equally enigmatic AI model known as 15.ai. Created by an anonymous developer, it may be one of the most efficient and emotive text-to-speech models so far.

What is 15.ai?

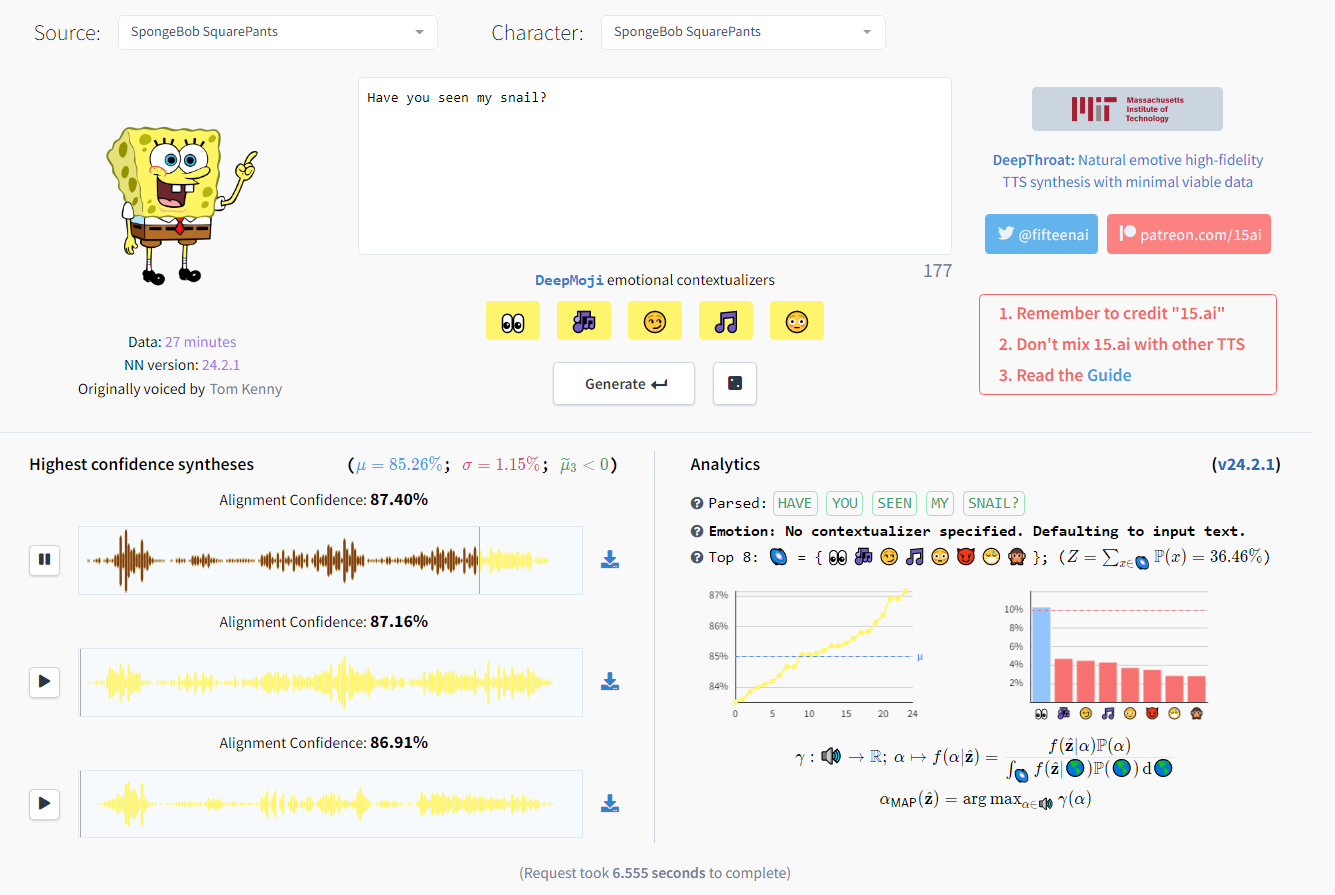

15.ai is an AI web application that is capable of generating emotive high-fidelity text-to-speech voices. Users can choose from a variety of voices from Spongebob Squarepants to HAL 9000 from 2001: A Space Odyssey.

The program was developed by an anonymous former MIT researcher working under the name 15. The developer has stated that the project was initially conceived as part of the university’s Undergraduate Research Opportunities Program.

Many of the voices available in 15.ai are trained on public datasets of characters from My Little Pony: Friendship is Magic. Avid fans of the show have formed a collaborative effort to collect, transcribe, and process hours of dialog with the goal of creating accurate text-to-speech generators of their favorite characters.

What can 15.ai do?

The 15.ai web application works by selecting one of dozens of fictional characters that the model has been trained on and submitting input text. After clicking on Generate, the user should receive three audio clips of the fictional character speaking the given lines.

Since the deep learning model used is nondeterministic, 15.ai outputs a slightly different speech every time. Similar to how an actor may require multiple takes to get the right delivery, 15.ai generates different delivery styles every time until the user finds an output they like.

The project includes a unique feature that allows users to manually alter the emotion of the generated line using emotional contextualizers. These parameters are able to deduce the sentiment of user-input emojis using MIT’s DeepMoji model.

According to the developer, what sets 15.ai apart from other similar TTS programs is that the model relies on very little data to accurately clone voices while “keeping emotions and naturalness intact”.

How Does 15.ai Work?

Let’s look into the technology behind 15.ai.

First, the main developer of 15.ai says that the program uses a custom model to generate voices with varying states of emotion. Since the author has yet to publish a detailed paper on the project, we can only make broad assumptions of what’s happening behind the scenes.

Retrieving the Phonemes

First, let’s look at how the program parses the input text. Before the program can generate speech, it must convert each individual word into its respective collection of phonemes. For example,the word “dog” is composed of three phonemes: /d/, /ɒ/, and /ɡ/.

But how does 15.ai know which phonemes to use for each word?

According to 15.ai’s About page, the program uses a dictionary lookup table. The table uses the Oxford Dictionaries API, Wiktionary, and the CMU Pronouncing Dictionary as sources. 15.ai uses other websites such as Reddit and Urban Dictionary as sources for newly coined terms and phrases.

If any given word does not exist in the dictionary, its pronunciation is deduced using phonological rules the model has learned from the LibriTTS dataset. This dataset is a corpus–a dataset of written or spoken words in a native language or dialect–of roughly 585 hours of people speaking English.

Embedding Emotions

According to the developer, the model tries to guess the perceived emotion of the input text. The model accomplishes this task through the DeepMoji sentiment analysis model. This particular model was trained on billions of tweets with emojis with the goal of understanding how language is used to express emotions. The result of the model is embedded into the TTS model to manipulate the output towards the desired emotion.

Once the phonemes and sentiment have been extracted from the input text, it is now time to synthesize speech.

Voice Cloning and Synthesis

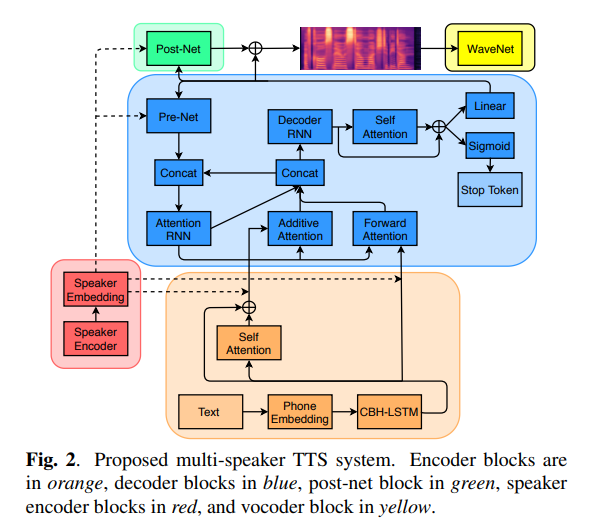

Text-to-speech models such as 15.ai are known as multi-speaker models. These models are built to be able to learn how to speak in different voices. In order to properly train our model, we must find a way to extract the unique voice features and represent it in a way that a computer can understand. This process is known as speaker embedding.

Current text-to-speech models use neural networks to create the actual audio output. The neural network typically consists of two main parts: an encoder and a decoder.

The encoder tries to build a single summary vector based on various input vectors. Information about the phonemes, emotive aspects, and voice features are placed into the encoder to create a representation of what the output should be. The decoder then converts this representation into audio and outputs a confidence score.

The 15.ai web application then returns the top three results with the best confidence score.

Issues

With the rise of AI-generated content such as deepfakes, developing advanced AI that can mimic real people can be a serious ethical issue.

Currently, the voices you can choose from the 15.ai web application are all fictional characters. However, that did not stop the app from garnering some controversy online.

A few voice actors have pushed back on the use of voice cloning technology. Concerns from them include impersonation, the use of their voice in explicit content, and the possibility that the technology might render the role of the voice actor obsolete.

Another controversy occurred earlier in 2022 when a company called Voiceverse NFT was discovered to be using 15.ai to generate content for their marketing campaign.

Conclusion

Text-to-speech is already quite prevalent in daily life. Voice assistants, GPS navigators. and automated phone calls have already become common-place. However, these applications are distinctly non-human enough that we can tell they’re machine-made speech.

Natural-sounding and emotive TTS technology might open the door for new applications. However, the ethics of voice cloning is still questionable at best. It certainly makes sense why a lot of these researchers have been reluctant to share the algorithm with the public.

Leave a Reply