In recent years, deep-learning models have become more effective at understanding human language.

Think of projects like GPT-3, which is now able to create entire articles and websites. GitHub has recently introduced GitHub Copilot, a service that provides entire code snippets by simply describing the type of code you need.

Researchers at OpenAI, Facebook, and Google have been working on ways to use deep learning to handle another task: captioning images. Using a large dataset with millions of entries, they’ve come up with some surprising results.

Lately, these researchers have tried to perform the opposite task: creating images from a caption. Is it now possible to create a completely new image out of a description?

This guide will explore two of the most advanced text-to-image models: OpenAI’s DALL-E 2 and Google’s Imagen AI. Each of these projects has introduced groundbreaking methods that may change society as we know it.

But first, let’s understand what we mean by text-to-image generation.

What is text-to-image generation?

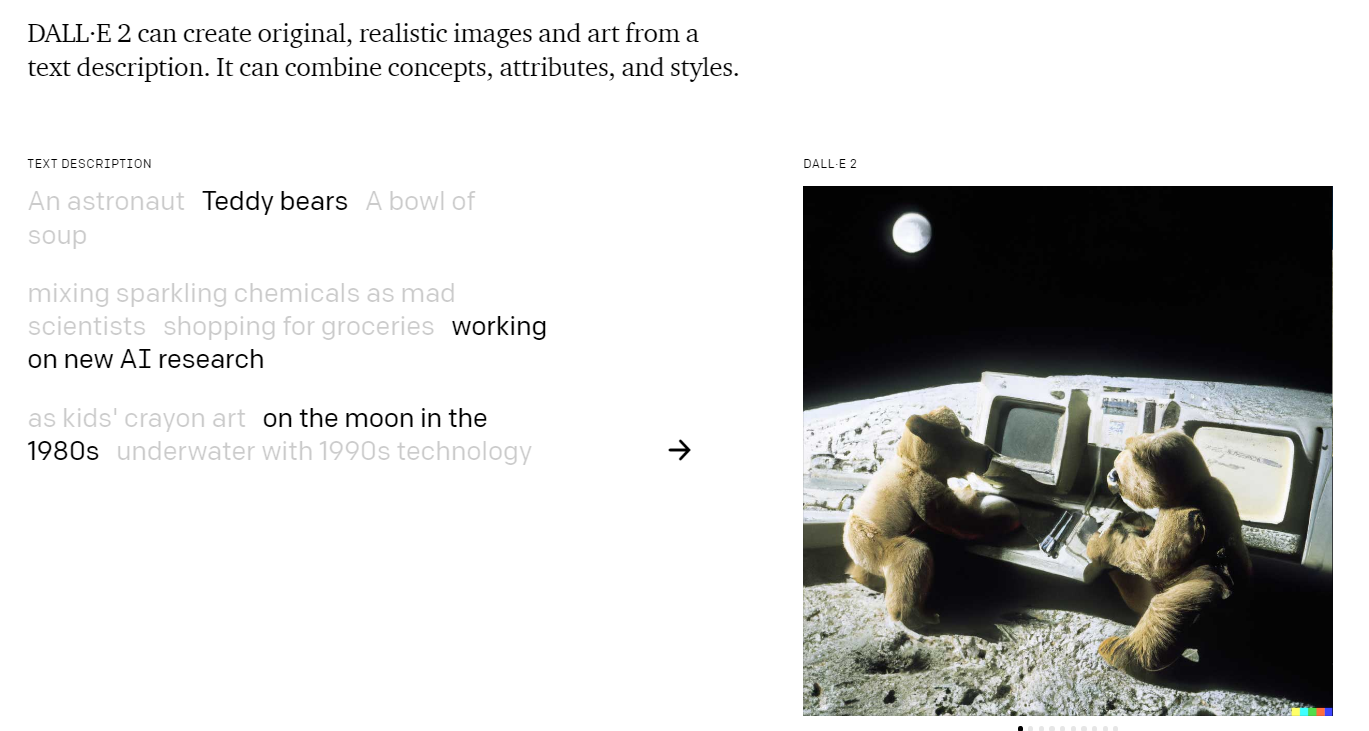

Text-to-image models allow computers to create new and unique images based on prompts. People can now provide a text description of an image they want to produce, and the model will try to create a visual that matches that description as closely as possible.

Machine learning models have leveraged the use of large datasets containing image-caption pairs to further improve performance.

Most text-to-image models use a transformer language model to interpret prompts. This type of model is a neural network that tries to learn the context and semantic meaning of natural language.

Next, generative models such as diffusion models and generative adversarial networks are used for image synthesis.

What is DALLE 2?

DALL-E 2 is a computer model by OpenAI that was released in April 2022. The model was trained on a database of millions of labeled pictures to associate words and phrases to images.

Users can type a simple phrase, such as “a cat eating lasagna”, and DALL-E 2 will generate its own interpretation of what the phrase is trying to describe.

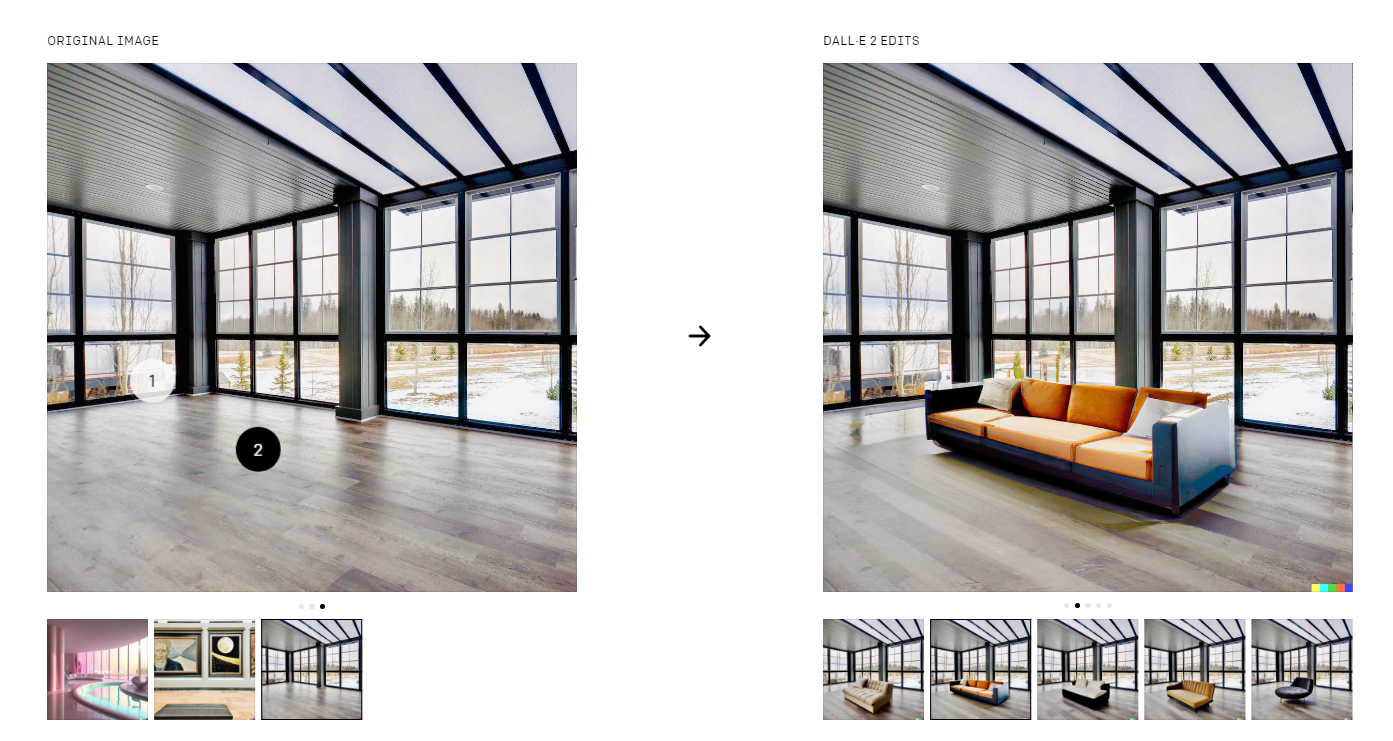

Besides creating images from scratch, DALL-E 2 can also edit existing images. In the example below, DALL-E was able to generate a modified image of a room with an added couch.

DALL-E 2 is just one of many similar projects OpenAI has released in the past few years. OpenAI’s GPT-3 became newsworthy when it seemed to generate text of varying styles.

Currently, DALL-E 2 is still in beta testing. Interested users can sign up for their waiting list and wait for access.

How Does it Work?

While the results of DALL-E 2 are impressive, you might be wondering how it all works.

DALL-E 2 is an example of a multimodal implementation of OpenAI’s GPT-3 project.

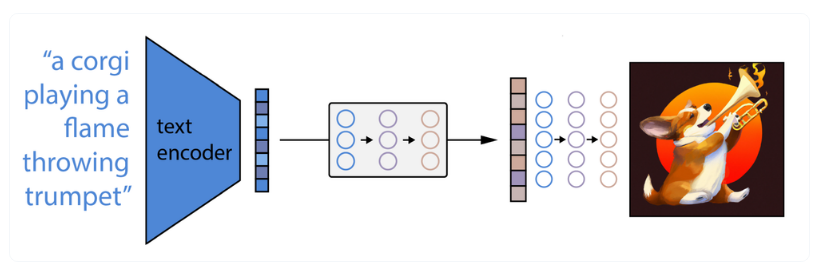

First, the user’s text prompt is placed into a text encoder that maps the prompt to a representation space. DALL-E 2 uses another OpenAI model called CLIP ( Contrastive Language-Image Pre-Training) to obtain semantic information from natural language.

Next, a model known as the prior maps the text encoding into an image encoding. This image encoding should capture the semantic information found in the text encoding step.

To create the actual image, DALL-E 2 uses an image decoder to generate a visual using semantic information and image encoding details. OpenAI uses a modified version of the GLIDE model to perform image generation. GLIDE relies on a diffusion model to create images.

The addition of GLIDE to the DALL-E 2 model enabled more photorealistic output. Since the GLIDE model is stochastic or randomly determined, the DALL-E 2 model can easily create variations by running the model again and again.

Limitations

Despite the impressive results of the DALL-E 2 model, it still faces some limitations.

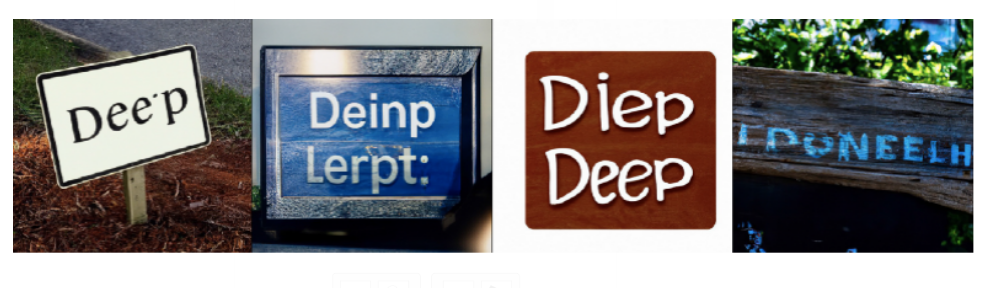

Spelling Text

Prompts that try to make DALL-E 2 generate text reveal that it has difficulty spelling words. Experts assume that this may be because spelling information is not part of the training dataset.

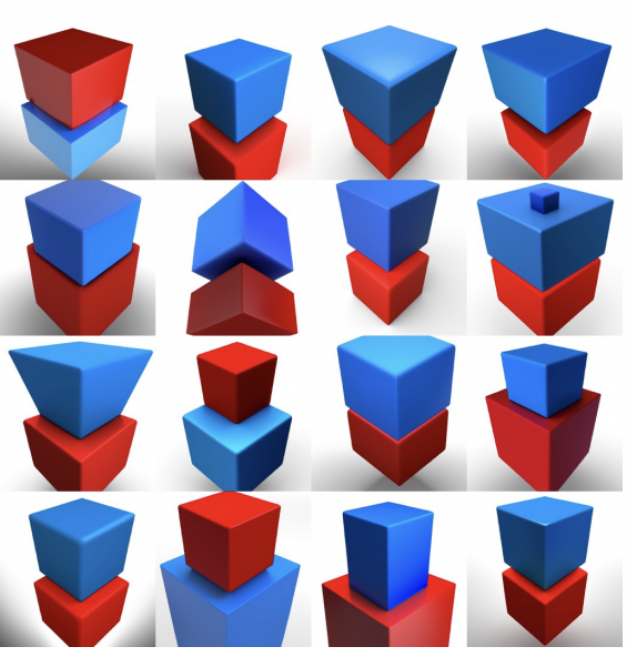

Compositional Reasoning

Researchers observe that DALL-E 2 still has some difficulty with compositional reasoning. Simply put, the model can understand individual aspects of an image while still having trouble figuring out the relations between these aspects.

For example, if given the prompt “red cube on top of a blue cube”, DALL-E will generate a blue cube and a red cube accurately but fail to correctly place them. The model has also been observed to have difficulty with prompts that require a specific number of objects to be drawn out.

Bias in the dataset

If the prompt contains no other details, DALL-E has been observed to depict white or Western people and environments. This representational bias occurs because of the abundance of Western-centric images in the dataset.

The model has also been observed to follow gender stereotypes. For example, typing in the prompt “flight attendant” mostly generates images of women flight attendants.

What is Google Imagen AI?

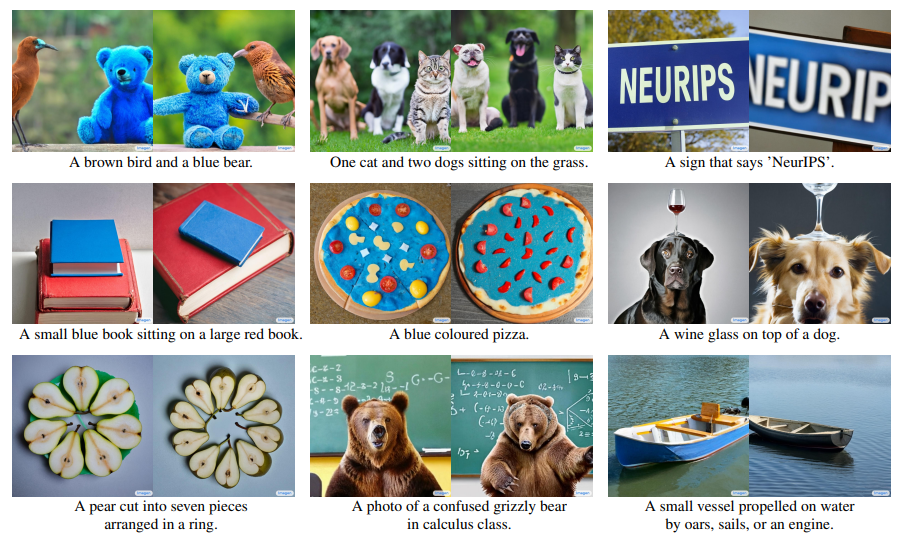

Google’s Imagen AI is a model that aims to create photorealistic images from input text. Similar to DALL-E, the model also uses transformer language models to understand the text and relies on the use of diffusion models to create high-quality images.

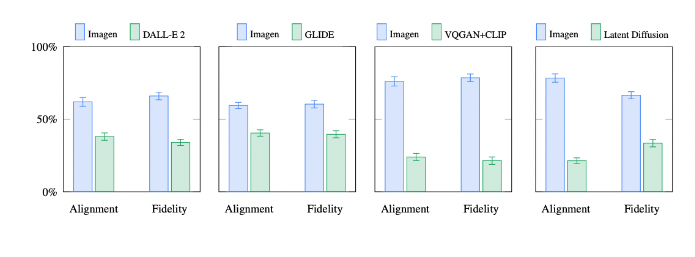

Alongside Imagen, Google has also released a benchmark for text-to-image models called DrawBench. Using DrawBench, they were able to observe that human raters preferred Imagen output over other models including DALL-E 2.

How Does it Work?

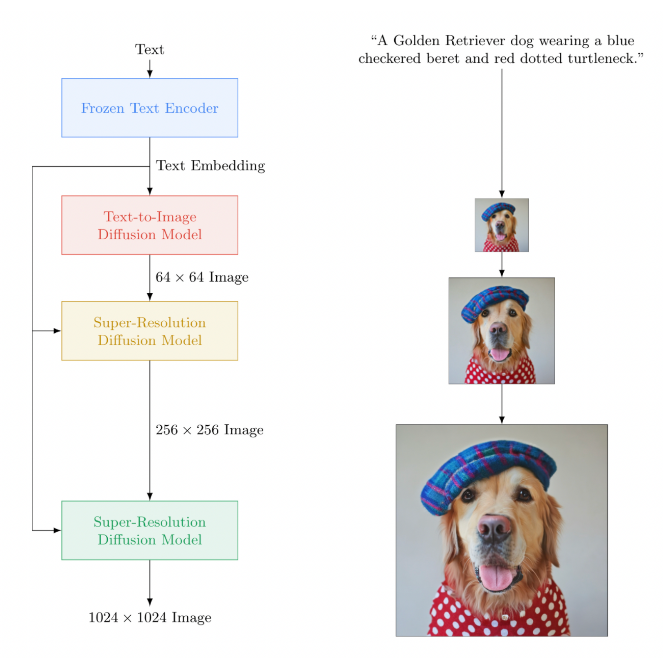

Similar to DALL-E, Imagen first converts the user prompt into a text embedding through a frozen text encoder.

Imagen uses a diffusion model which learns how to convert a pattern of noise into images. The initial output of these images are low resolution and are later passed through another model known as a super-resolution diffusion model to increase the resolution of the final image. The first diffusion model outputs a 64×64 pixel image and is later blown up to a high-resolution 1024×1024 image.

Based on the Imagen team’s research, large frozen language models trained only on text data are still highly effective text encoders for text-to-image generation.

The study also introduces the concept of dynamic thresholding. This method enables images to appear more photorealistic by increasing guidance weights when generating the image.

Performance of DALLE 2 vs Imagen

Preliminary results from Google’s benchmark shows that human respondents prefer images generated by Imagen over DALL-E 2 and other text-to-image models such as Latent Diffusion and VQGAN+CLIP.

Output coming from the Imagen team has also shown that their model performs better at spelling text, a known weakness of the DALL-E 2 model.

However, since Google has not yet released the model to the public, it still remains to be seen how accurate Google’s benchmarks are.

Conclusion

The rise of photorealistic text-to-image models is controversial because these models are ripe for unethical use.

The technology may lead to the creation of explicit content or as a tool for disinformation. Researchers from both Google and OpenAI are aware of this, which is partly why these technologies are still not accessible to everyone.

Text-to-image models also have significant economic implications. Will professions such as models, photographers, and artists be affected if models such as DALL-E become mainstream?

At the moment, these models still have limitations. Holding any AI-generated image to scrutiny will reveal its imperfections. With both OpenAI and Google competing for the most effective models, it may be a matter of time before a truly perfect output is generated: an image that is indistinguishable from the real thing.

What do you think will happen when technology goes that far?

Leave a Reply