Table of Contents[Hide][Show]

We spend a lot of time communicating with people online through chat, email, websites, and social media.

The enormous volumes of text data we produce every second escape our attention, but, not always.

Customers’ actions and reviews provide organizations with priceless information about what customers value and disapprove of in goods and services, as well as what they want from a brand.

The majority of businesses, however, are still having difficulty determining the most effective method for data analysis.

Since much of the data is unstructured, computers have a difficult time understanding it, and manually sorting it would be extremely time-consuming.

Processing a lot of data by hand becomes laborious, monotonous, and simply unscalable as a firm expands.

Thankfully, Natural Language Processing can assist you in finding insightful information in unstructured text and resolving a range of text analysis issues, including sentiment analysis, subject categorization, and more.

Making human language understandable to machines is the goal of the artificial intelligence field of natural language processing (NLP), which makes use of linguistics and computer science.

NLP enables computers to automatically evaluate enormous amounts of data, making it possible for you to quickly identify relevant information.

Unstructured text (or other kinds of natural language) can be used with a range of technologies to uncover insightful information and address a number of issues.

Although by no means comprehensive, the list of open-source tools presented below is a wonderful place to start for anybody or any organization interested in using natural language processing in their projects.

1. NLTK

One could argue that Natural Language Toolkit (NLTK) is the most feature-rich tool I have looked at.

Almost all of the NLP techniques are implemented, including categorization, tokenization, stemming, tagging, parsing, and semantic reasoning.

You can select the precise algorithm or approach you want to utilize because there are frequently several implementations available for each.

Numerous languages are supported as well. Although it is good for simple structures, the fact that it represents all data as strings makes it challenging to apply some sophisticated capabilities.

When compared to other tools, the library is also a little sluggish.

All things considered, this is an excellent toolset for experimentation, exploration, and applications that require a certain mix of algorithms.

Pros

- It is the most popular and complete NLP library with several third additions.

- In comparison to other libraries, it supports most languages.

Cons

- tough to understand and utilize

- It is slow

- no models of neural networks

- It only divides the text into sentences without considering the semantics

2. Spacy

SpaCy is NLTK’s most likely top rival. Although it just has one implementation for each NLP component, it is generally quicker.

Additionally, everything is represented as an object rather than a string, which simplifies the interface for developing apps.

Having a deeper grasp of your text data will enable you to accomplish more.

This also makes it easier for it to connect with several other frameworks and data science tools. But compared to NLTK, SpaCy doesn’t support as many languages.

It does feature many neural models for different aspects of language processing and analysis, as well as a straightforward user interface with a condensed range of options and excellent documentation.

In addition, SpaCy has been built to accommodate huge amounts of data and is extremely thoroughly documented.

It also includes a plethora of models for natural language processing that have already been trained, making it easier to learn, teach, and use natural language processing with SpaCy.

Overall, this is an excellent tool for new apps that don’t need a specific method and need to be performant in production.

Pros

- Compared to other things, it is quick.

- Learning and using it is simple.

- models are trained using neural networks

Cons

- less adaptability in comparison to NLTK

3. Gensim

The most effective and easy approaches to express documents as semantic vectors are achieved by using the specialized open-source Python framework known as Gensim.

Gensim was created by the authors to handle raw, unstructured plain text using a range of machine learning methods; hence, it is a smart idea to use Gensim to tackle jobs like Topic Modelling.

Additionally, Gensim effectively finds textual similarities, indexes content, and navigates between distinct texts.

It is a highly specialized Python library focusing on topic modeling tasks utilizing Latent Dirichlet Allocation and other LDA) methods.

Additionally, it is quite good at finding texts that are similar to one another, indexing texts, and navigating across papers.

This tool handles massive amounts of data efficiently and quickly. Here are some starting tutorials.

Pros

- simple user interface

- efficient use of well-known algorithms

- On a group of computers, it can do latent Dirichlet allocation and latent semantic analysis.

Cons

- It’s mostly intended for unsupervised text modeling.

- It lacks a complete NLP pipeline and should be used in conjunction with other libraries like Spacy or NLTK.

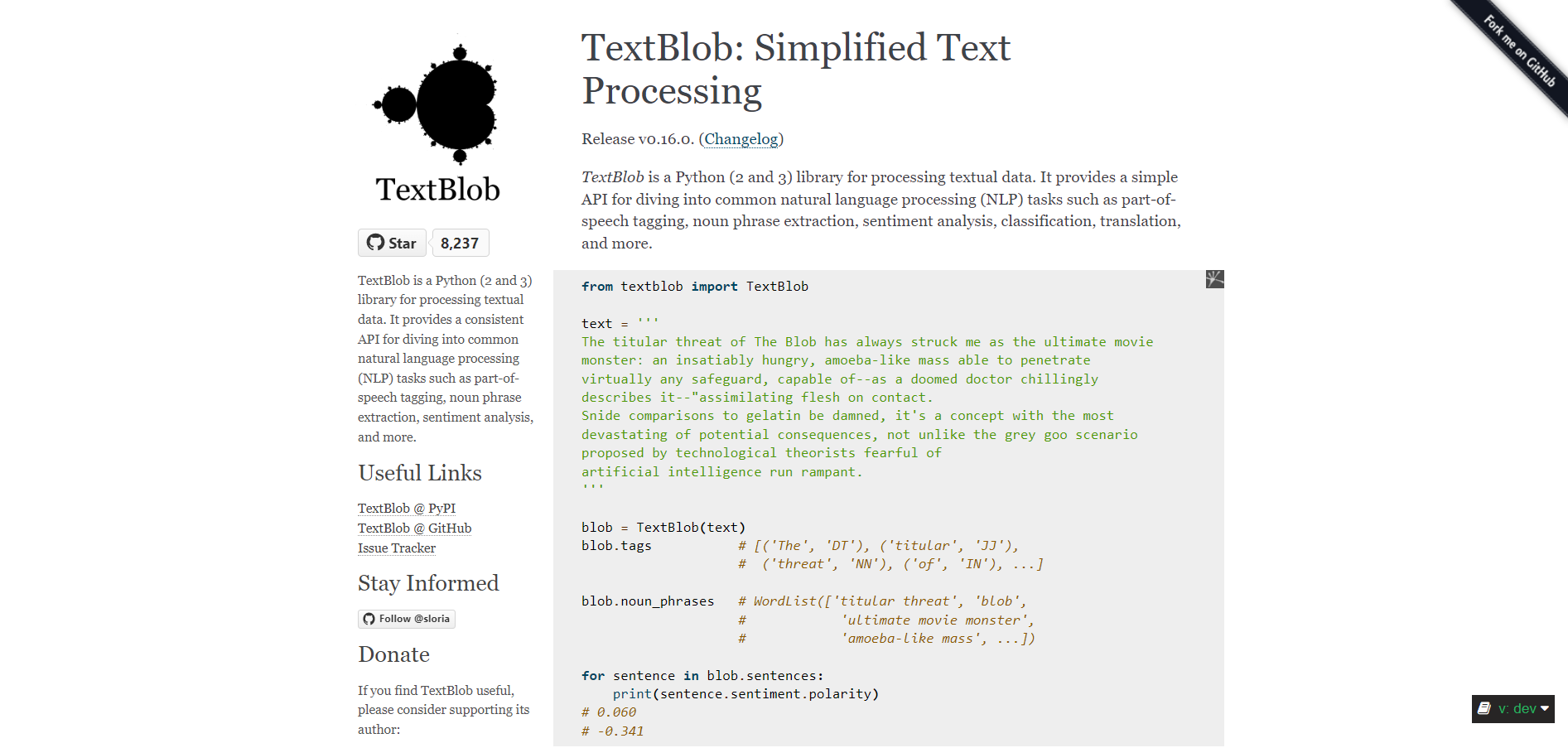

4. TextBlob

TextBlob is a sort of NLTK extension.

Through TextBlob, you can access numerous NLTK functions more easily, and TextBlob also incorporates Pattern library capabilities.

This could be a useful tool to use while learning if you’re just getting started, and it can be used in production for applications that don’t require a lot of performance.

It offers a far more user-friendly and straightforward interface for carrying out the same NLP functions.

It’s a great option for novices who wish to take on NLP tasks like sentiment analysis, text categorization, and part-of-speech tagging because its learning curve is less than with other open-source tools.

TextBlob is widely used and excellent for smaller projects overall.

Pros

- The library’s user interface is simple and clear.

- It offers language identification and translation services using Google Translate.

Cons

- In comparison to others, it’s slow.

- No models of neural networks

- No word vectors integrated

5. OpenNLP

It is simple to incorporate OpenNLP with other Apache projects like Apache Flink, Apache NiFi, and Apache Spark because it is hosted by the Apache Foundation.

It is a comprehensive NLP tool that can be used from the command line or as a library in an application.

It includes all of the NLP’s common processing components.

Additionally, it offers extensive language support. If you’re using Java, OpenNLP is a strong tool with a tonne of capabilities that is prepared for production workloads.

In addition to enabling the most typical NLP tasks, such as tokenization, sentence segmentation, and part-of-speech tagging, OpenNLP can be used to create more complex text processing applications.

Maximum entropy and perceptron-based machine learning are also included.

Pros

- A model training tool with several features

- Focuses on basic NLP tasks and excels at them, including entity identification, phrase detection, and tokenization.

Cons

- lacks sophisticated capabilities; if you want to continue with JVM, moving to CoreNLP is the next natural step.

6. AllenNLP

AllenNLP is ideal for commercial applications and data analysis since it is built on PyTorch tools and resources.

It develops into an all-encompassing tool for text analysis.

This makes it one of the list’s more sophisticated natural language processing tools. While performing the other tasks independently, AllenNLP preprocesses data using the free SpaCy open-source package.

AllenNLP’s key selling point is how easy it is to use.

AllenNLP streamlines the natural language processing process, in contrast to other NLP programs that include several modules.

As a consequence, the output results never feel confusing. It is a fantastic tool for those without much knowledge.

Pros

- Developed on top of PyTorch

- excellent for exploring and experimenting using cutting-edge models

- It can be used both commercially and academically

Cons

- Not appropriate for large-scale projects that are currently in production.

Conclusion

Companies are using NLP techniques to extract insights from unstructured text data such as emails, online reviews, social media postings, and more. Open-source tools are cost-free, adaptable, and give developers complete customization options.

What are you waiting for? Use them right away and create something incredible.

Happy Coding!

Leave a Reply