Table of Contents[Hide][Show]

When electronic devices like cellphones, smartwatches, and other wearable technology are upgraded with newer models, a sizable quantity of garbage is produced each year.

If older versions could have been updated with new sensors and processors that snap into the device’s internal chip, decreasing waste in terms of both money and materials, that would have been revolutionary. Consider a more sustainable future where smartphones, smartwatches, and other wearable technology aren’t constantly replaced with newer models or put on the shelf.

Instead, they can be updated with the newest sensors and processors that simply snap into a device’s internal chip, like LEGO bricks added to an existing structure. Such reprogrammable chips are might keep devices current while reducing our digital waste.

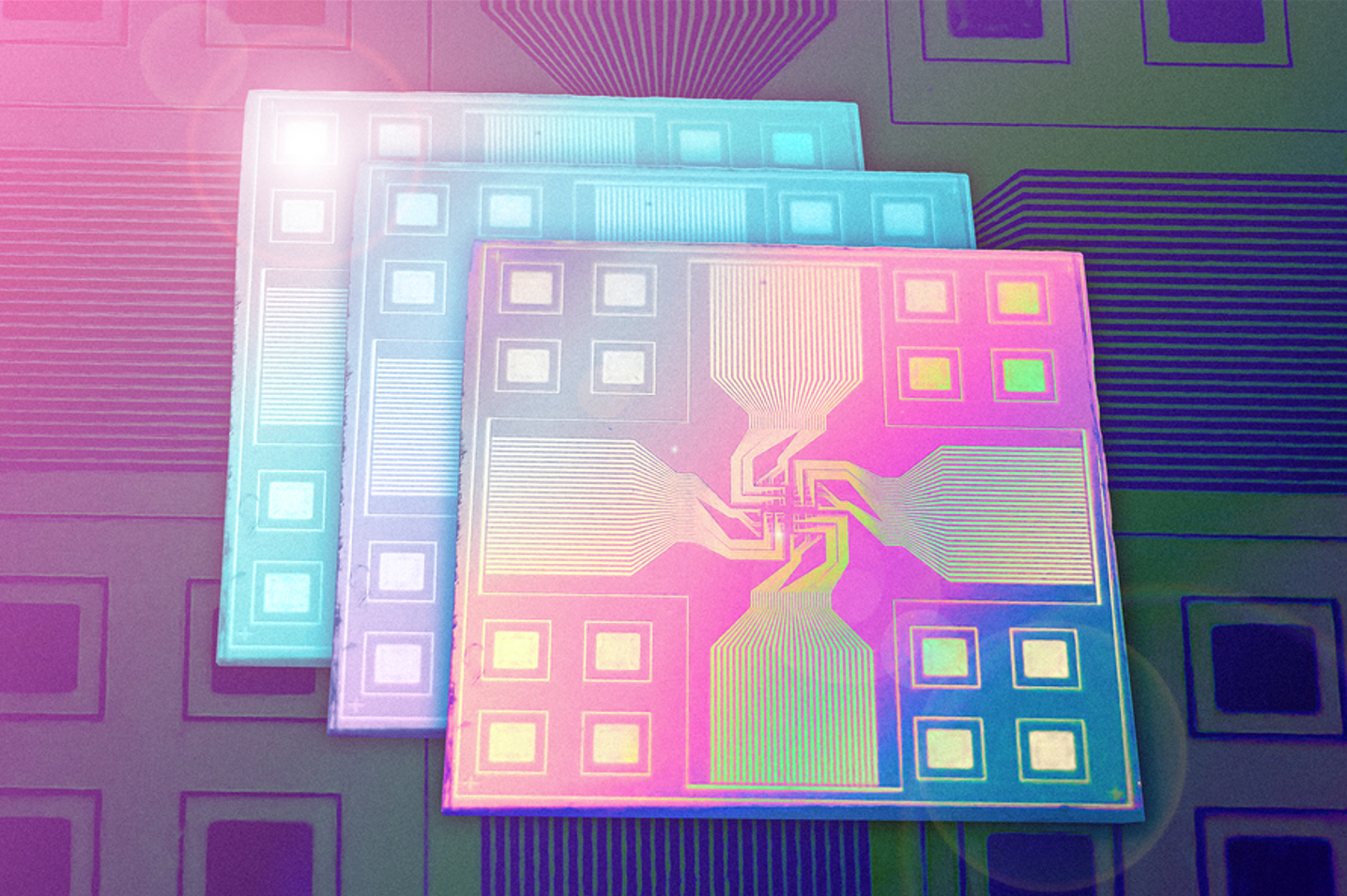

With their LEGO-like design for a stackable, customizable artificial intelligence chip, MIT engineers have now made a step towards that modular vision.

This post will take a thorough look at this chip, its configurations, and its future implications.

So, what is a LEGO-like Artificial Intelligence chip?

The next major development that will transform the planet is artificial intelligence. In order to produce modular and sustainable electronics, MIT engineers have now created an AI chip that resembles LEGO.

To make the process of adding additional sensors or upgrading old processors simpler, it is a reconfigurable chip with numerous layers that can be layered on top of one another or switched.

Based on the combination of the layers, the “reconfigurable” AI chips can be expanded indefinitely. Therefore, these chips can cut down on electronic waste while keeping our devices current.

Now, let’s explore the design of this chip.

Chip design

The AI chip architecture is genuinely exceptional because it combines alternating layers of processing and sensor components with LEDs (light-emitting diodes), which allow the chip layers to interact visually.

The architecture includes light-emitting diodes (LED) that enable optical communication across the chip’s layers as well as alternating layers of sensor and processing components. Signals are relayed across levels using normal wire in other modular chip architectures.

Such extensive connections make such stacking systems non-configurable since they are difficult, if not impossible, to cut and rewire. Instead of actual wires, the MIT concept transmits data through the chip using light.

As a result, the chip can be rearranged, with layers that can be added to or subtracted from, for example, to include new sensors or modern CPUs. The engineers’ novel new concept pairs image sensors with artificial synapse arrays, and each of them is taught to recognize a certain letter, in this case, M, I, and T.

The team constructs an optical system rather than using the traditional method of transmitting sensor data to the process through physical cables. In this approach, each sensor and artificial synapses combine to form an array that enables the communication between the letters without the need for physical connections.

The signals between the layers are sent via standard wire in the usual modular chip arrangement. These conventional chips are not reconfigurable because such intricate wiring arrangements are impossible to detach and rewire.

Researchers are anxiously awaiting the implementation of its ground-breaking design to advance computing devices, such as self-sufficient sensors and various other electronics, which don’t function with a central or distributed resource like cloud-based computing or supercomputers.

Chip configurations

A single-chip was created by the researchers, and its computational core was roughly the size of a piece of confetti at 4 square millimeters.

The chip has three image recognition “blocks” placed on top of one another, each of which has an image sensor, an optical communication layer, and an artificial synapses array for identifying one of the three letters M, I, or T. They then projected a randomly generated picture of pixels onto the device and measured the electrical current that each neural network array generated in response.

As the current increases, the likelihood that the picture is the letter that the specific array has been trained to detect increases

The researchers discovered that while the chip could discern between distinct hazy pictures, such as between the letters I and T, it had less success classifying clear images of each letter. When the processing layer of the chip was promptly replaced with a superior “denoising” processor, the researchers discovered that the device correctly recognized the pictures.

However, they quickly replaced the chip’s processing layer with a skilled denoising processor, and then they produced the clip that correctly detected pictures.

As they believe there are countless applications for these devices, the researchers also plan to increase the chips’ processing power and sensor capacity.

The applications are limitless, the researchers believe, and they intend to expand the chip’s sensing and processing capabilities.

Future of it

In terms of future work, the researchers are especially excited about the potential adoption of this architecture to edge computing devices like supercomputers or cloud-based computing, which would open up a completely new world of possibilities.

As the internet of things grows, demand for multifunctional edge computing devices will soar. The team believes that because it gives a lot of edge computing flexibility, its suggested design can help with this.

In order to detect more complex pictures or to be utilized in wearable electronic skin and healthcare monitoring, the researchers also plan to enhance the chip’s sensing and processing capabilities.

The researchers find it intriguing if users could put the chip together themselves using different sensors and processing layers that may be sold separately.

Depending on their needs for an image or video identification, the user can choose from a variety of neural networks.

Conclusion

The team singles out edge computing as one of the several possible uses. Jeehwan Kim, an associate professor of mechanical engineering at MIT, predicts that demand for multifunctional edge computing devices will increase significantly as we go into the era of the internet of things based on sensor networks.

In the future, “our suggested hardware design will allow tremendous adaptability of edge computing.”

In conclusion, this chip change the future and welcomes a broader range of AI application.

Leave a Reply