Have you ever watched a movie, played a video game, or used virtual reality and noticed anything off about how human characters moved and appeared?

Creating realistic and detailed computer-generated humans has long been an aim of computer graphics and computer vision research.

The HumanRF project is an exciting first step toward that aim

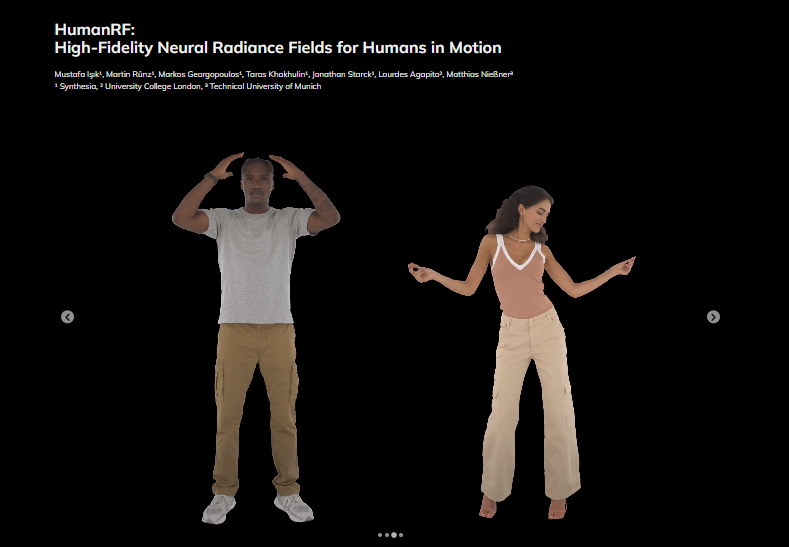

HumanRF is a dynamic neural scene representation that uses multi-view video input to capture the full-body look of humans in motion. Let’s see what it is all about and what are the potential benefits of this technology.

Capturing of Human Performance

Creating photorealistic representations of virtual settings has long been a problem in computer graphics.

Traditionally, artists generated 3D objects by hand. Recent studies, however, have concentrated on recreating 3D representations from real-world data.

Capturing and synthesizing realistic human performances, in particular, has been a focus of study for applications such as film production, computer games, and telepresence.

Dynamic Neural Radiance Field Advances

In recent years, tremendous progress has been made in addressing these challenges through the use of dynamic neural radiance fields (NeRF). NeRF is capable of reconstructing 3D fields encoded in a multi-layer perceptron (MLP), allowing for novel-view synthesis.

While NeRF was initially focused on static scenes, more recent work has addressed dynamic scenes using time conditioning or deformation fields. However, these methods continue to struggle with longer sequences with complex motion, particularly when it comes to capturing moving humans.

ActorsHQ’s Datase

To address these flaws, the professionals propose ActorsHQ, a new high-fidelity dataset of clothed humans in motion optimized for photorealistic novel view synthesis. The dataset contains multi-view recordings from 160 synchronized cameras, each capturing 12-megapixel video streams.

This dataset allows for the creation of a new scene representation that extends Instant-NGP hash encodings to the temporal domain by incorporating the time dimension alongside a low-rank space-time tensor decomposition of the feature grid.

Introducing HumanRF

HumanRF is a 4D dynamic neural scene representation that captures full-body motion from multi-view video input and allows playback from previously unseen perspectives. It is a technique for video recording that captures a lot of data while taking up very little space.

It accomplishes this by breaking down space and time into smaller pieces, similar to how a Lego set can be disassembled and reassembled.

HumanRF technology can capture the movements of people in a video very well, even if they are doing difficult or complex movements. The creators of this technology demonstrate HumanRF’s effectiveness on the newly introduced ActorsHQ dataset, demonstrating a significant improvement over existing state-of-the-art methods.

So, how was it possible to create HumanRF and what are its inner workings?

Overview of the HumanRF Method

Decomposition of 4D Feature Grid

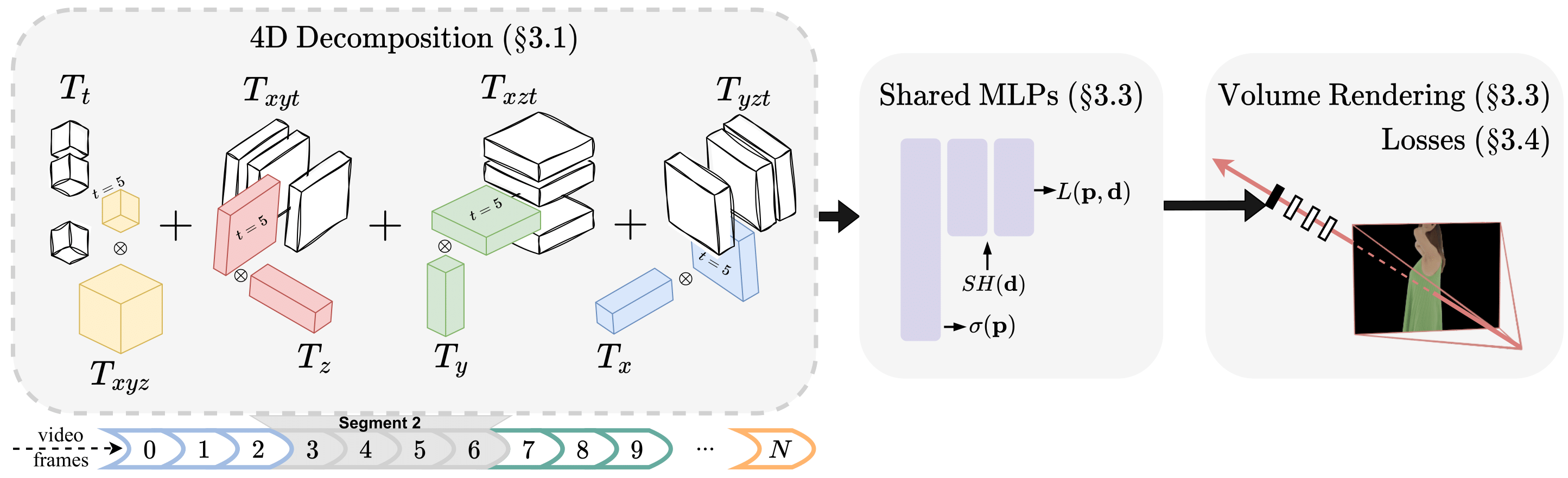

The 4D feature grid decomposition is a critical component of HumanRF. By combining optimally partitioned 4D segments, this method models a dynamic 3D scene. Each segment has its own trainable 4D feature grid, which encodes a sequence of frames.

To represent spatiotemporal data more compactly, the 4D feature grid is defined as a decomposition of four 3D and four 1D feature grids. The 4D feature grid decomposition aids the method in producing high-quality images with a high level of detail while taking up less space.

Adaptive Temporal Partitioning

HumanRF uses shallow multilayer perceptrons with sparse feature hash-grids to render arbitrarily long multi-view data effectively. A compact 4D feature grid is used to represent the optimally distributed temporal segments that make up the time domain.

Regardless of the temporal context, the method achieves superior representation power by using adaptive temporal partitioning to ensure that the total 3D space volume covered by each segment is of a similar size. No matter how long the video is, adaptive temporal partitioning aids in producing a consistent representation.

Supervision with 2D-only Losses

The errors between the rendered and input RGB images and the foreground masks are measured by HumanRF using 2D-only losses that are supervised.

The technique achieves temporal consistency using shared MLPs and 4D decomposition, and the outcomes are very similar to those of the best segment sizes.

The method is more effective and simpler to train than methods that use 3D losses because it only uses 2D losses.

The method produces results that are superior to those of other experimentally tested methods, making it a promising strategy for producing images of human actors in motion that are of a high caliber.

Possible Areas of Usage

Enhancing Video Games and Virtual Reality

Real-time virtual character creation for video games and VR applications is possible with HumanRF. The motion of the human actor can be recorded from various angles, and the data can then be processed through HumanRF.

This allows game developers to create characters that can move and interact with the environment more realistically, giving players a more engaging experience.

Motion Capture in Film Production

By producing clear images of the actors’ motion, HumanRF can enhance motion capture in the filmmaking process.

Filmmakers can create a realistic and dynamic performance that can be edited from different angles by using multiple cameras to record the actor’s performance and HumanRF to produce a 4D representation.

This reduces the need for reshoots and lowers production costs.

Enhancing Virtual Meetings and Teleconferencing

By producing 3D models of distant participants in real-time, HumanRF enables the creation of immersive and realism in virtual meetings.

Participants in virtual meetings can have a more interesting and interactive experience by capturing the motion of the remote participant from various angles and processing the data through HumanRF.

Additionally, HumanRF can be used to create high-quality views of remote participants during video conferencing, leading to better collaboration and communication.

Facilitating Education and Training

HumanRF can be used to build dynamic, realistic simulations in training and educational environments.

Training simulations that enable trainees to practice and learn in a more realistic and interesting environment can be made by recording the motion of instructors or actors carrying out particular tasks and processing the data through HumanRF.

HumanRF, for instance, can be used to develop simulations for driving, flight, or medical training.

Enhancing Security and Surveillance

In surveillance and security applications, HumanRF can be used to create 3D models of people or groups that are dynamic and realistic. Security personnel can have a more accurate representation of a person’s motion and behavior by capturing the motion of individuals from various viewpoints and processing the data through HumanRF.

This improves the identification and tracking of potential threats. Security personnel can practice and get ready for various situations by using HumanRF to create simulations of emergency scenarios.

Wrap-Up, What Does the Future Hold?

HumanRF is an effective approach for generating high-quality unique views of a moving human actor. It has demonstrated promising results in a variety of applications, including motion capture, virtual reality, and telepresence. HumanRF’s potential is not limited to these applications; there are several additional possible applications for this technology.

It is anticipated to improve as a study in this sector develops, becoming more efficient and precise.

New algorithms and architectures will almost certainly lead to more advanced ways of modeling and depicting human actors in motion, which might lead to numerous interesting advances in the industries of cinema, gaming, and communication.

Furthermore, the application of deep learning models together with HumanRF is a potential direction for future study. This might lead to more effective and efficient human motion analysis and modeling technologies.

Furthermore, combining HumanRF with other technologies like haptic feedback systems and augmented reality could give rise to new applications in medical training, education, and therapy.

Leave a Reply