Table of Contents[Hide][Show]

In recent years, neural networks have grown in popularity since they have shown to be extremely good at a broad range of tasks.

They have been shown to be a great choice for image and audio recognition, natural language processing, and even playing complicated games like Go and chess.

In this post, I will walk you through the whole process of training a neural network. I will mention and explain all the steps to train a neural network.

While I will go over the steps I would like to add a simple example to make sure there is a practical example as well.

So, come along, and let’s learn how to process neural networks

Let’s start simple and ask what are neural networks in the first place.

What Exactly Are Neural Networks?

Neural networks are computer software that simulates the operation of the human brain. They can learn from vast volumes of data and spot patterns that people may find difficult to detect.

Neural networks have grown in popularity in recent years because of their versatility in tasks such as picture and audio recognition, natural language processing, and predictive modeling.

Overall, neural networks are a strong tool for a wide range of applications and have a chance to transform the way we approach a wide range of jobs.

Why We Should Know About Them?

Understanding neural networks is critical because they have led to discoveries in a variety of fields, including computer vision, speech recognition, and natural language processing.

Neural networks, for example, are at the heart of recent developments in self-driving cars, automatic translation services, and even medical diagnostics.

Understanding how neural networks function and how to design them helps us build new and inventive applications. And, perhaps, it may lead to even greater discoveries in the future.

A Note About the Tutorial

As I said above, I would like to explain the steps of training a neural network by giving an example. To do this, we should talk about the MNIST dataset. It is a popular choice for beginners who wants to get started with neural networks.

MNIST is an acronym that stands for Modified National Institute of Standards and Technology. It is a handwritten digit dataset that is commonly used for training and testing machine learning models, notably neural networks.

The collection contains 70,000 grayscale photos of handwritten numerals ranging from 0 to 9.

The MNIST dataset is a popular benchmark for image classification tasks. It is frequently used for teaching and learning since it is compact and easy to deal with while yet posing a difficult challenge for machine learning algorithms to answer.

The MNIST dataset is supported by several machine learning frameworks and libraries, including TensorFlow, Keras, and PyTorch.

Now we know about the MNIST dataset, let’s get started with our steps of training a neural network.

Basic Steps to Train a Neural Network

Import Necessary Libraries

When first starting to train a neural network, it is critical to have the necessary tools to design and train the model. The initial step in creating a neural network is to import required libraries such as TensorFlow, Keras, and NumPy.

These libraries serve as building blocks for the neural network’s development and provide crucial capabilities. The combination of these libraries allows for the creation of sophisticated neural network designs and fast training.

To begin our example; we will import the required libraries, which include TensorFlow, Keras, and NumPy. TensorFlow is an open-source machine learning framework, Keras is a high-level neural network API, and NumPy is a numerical computing Python library.

import tensorflow as tf

from tensorflow import keras

import numpy as np

Load the Dataset

The dataset must now be loaded. The dataset is the set of data on which the neural network will be trained. This may be any type of data, including photos, audio, and text.

It is critical to divide the dataset into two parts: one for training the neural network and another for assessing the correctness of the trained model. Several libraries, including TensorFlow, Keras, and PyTorch, may be used to import the dataset.

For our example, we use also Keras to load the MNIST dataset. There are 60,000 training photos and 10,000 test images in the dataset.

mnist = keras.datasets.mnist

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

Preprocess The Data

Data preprocessing is an important stage in training a neural network. It entails preparing and cleaning the data before it is fed into the neural network.

Scaling pixel values, normalizing data, and converting labels to one-hot encoding are examples of preprocessing procedures. These processes assist the neural network in learning more effectively and precisely.

Preprocessing the data can also assist to minimize overfitting and improve the neural network’s performance.

You must preprocess the data before training the neural network. This includes changing the labels to one-hot encoding and scaling the pixel values to be between 0 and 1.

train_images = train_images / 255.0

test_images = test_images / 255.0

train_labels = keras.utils.to_categorical(train_labels, 10)

test_labels = keras.utils.to_categorical(test_labels, 10)

Define the Model

The process of defining the neural network model involves establishing its architecture, such as the number of layers, number of neurons per layer, activation functions, and network type (feedforward, recurrent, or convolutional).

The neural network design you use is determined by the sort of problem you are attempting to solve. A well-defined neural network design can aid in neural network learning by making it more efficient and accurate.

It’s time to describe the neural network model at this point. Use a simple model with two hidden layers, each with 128 neurons, and a softmax output layer, which has 10 neurons, for this example.

model = keras.Sequential([

keras.layers.Flatten(input_shape=(28, 28)),

keras.layers.Dense(128, activation='relu'),

keras.layers.Dense(128, activation='relu'),

keras.layers.Dense(10, activation='softmax')

])

Compile the Model

The loss function, optimizer, and metrics must be specified during the neural network model’s compilation. The neural network’s ability to correctly forecast the output is gauged by the loss function.

To increase the neural network’s accuracy during training, the optimizer modifies its weights. The effectiveness of the neural network during training is gauged using metrics. The model must be created before the neural network can be trained.

In our example, we must now right now construct the model.

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

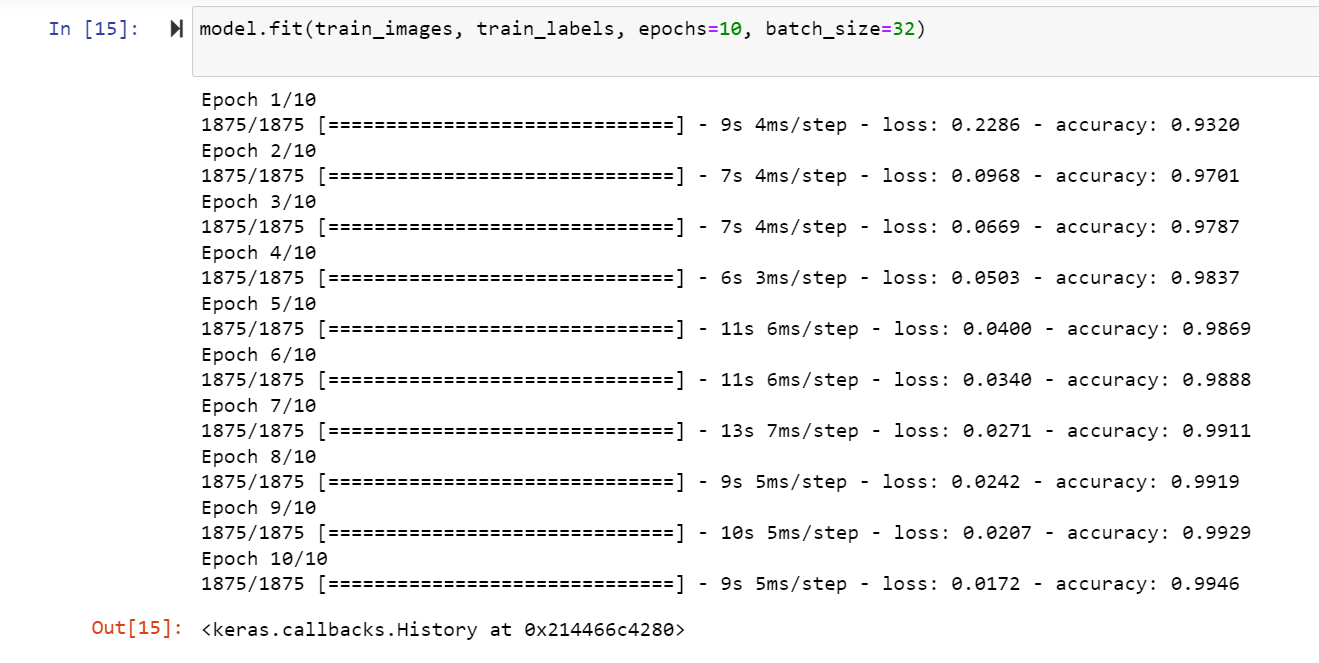

Train the Model

Passing the prepared dataset through the neural network while modifying the network’s weights to minimize the loss function is known as training the neural network.

The validation dataset is used to test the neural network during training to track its effectiveness and prevent overfitting. The training process can take some time, thus it’s important to make sure the neural network is appropriately trained to prevent underfitting.

Using the training data, we can now train the model. To do this, we must define the batch size (the number of samples processed before the model is updated) and the number of epochs (the number of repetitions across the complete dataset).

model.fit(train_images, train_labels, epochs=10, batch_size=32)

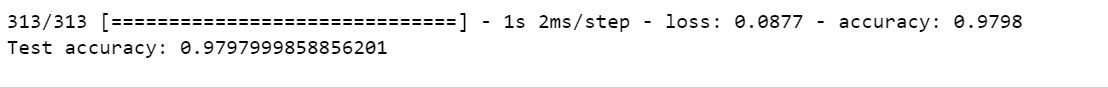

Evaluating the Model

Testing the neural network’s performance on the test dataset is the process of evaluating it. In this stage, the trained neural network is used to process the test dataset, and accuracy is evaluated.

How effectively a neural network can forecast the right result from brand-new, untried data is a measure of its accuracy. Analyzing the model may assist determine how well the neural network is working and also suggest ways to make it even better.

We can finally assess the model’s performance using the test data after training.

test_loss, test_acc = model.evaluate(test_images, test_labels)

print('Test accuracy:', test_acc)

That’s all! We trained a neural network to detect digits in the MNIST dataset.

From preparing the data to assessing the effectiveness of the trained model, training a neural network involves several processes. These instructions assist novices in efficiently building and training neural networks.

Beginners who want to use neural networks to tackle various issues can do so by following these instructions.

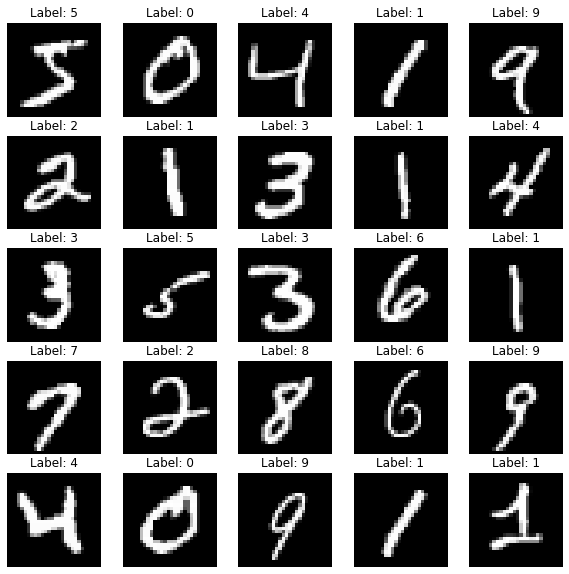

Visualizing the Example

Let’s try to visualize what we have done with this example to understand better.

The Matplotlib package is used in this code snippet to plot a random selection of photos from the training dataset. First, we import Matplotlib’s “pyplot” module and alias it as “plt”. Then, with a total dimension of 10 by 10 inches, we make a figure with 5 rows and 5 columns of subplots.

Then, we use a for loop to iterate over the subplots, displaying a picture from the training dataset on each one. To display the picture, the “imshow” function is used, with the “cmap” option set to ‘gray’ to display the photos in grayscale. The title of each subplot is also set to the label of the associated image in the collection.

Finally, we use the “show” function to display the plotted pictures in the figure. This function allows us to visually evaluate a sample of photos from the dataset, which can aid in our understanding of the data and the identification of any possible concerns.

import matplotlib.pyplot as plt

# Plot a random sample of images

fig, axes = plt.subplots(nrows=5, ncols=5, figsize=(10,10))

for i, ax in enumerate(axes.flat):

ax.imshow(train_images[i], cmap='gray')

ax.set_title(f"Label: {train_labels[i].argmax()}")

ax.axis('off')

plt.show()

Important Neural Network Models

- Feedforward Neural Networks (FFNN): A simple type of neural network in which information travels only in one way, from the input layer to the output layer via one or more hidden layers.

- Convolutional Neural Networks (CNN): A neural network that is commonly used in image detection and processing. CNNs are intended to recognize and extract features from pictures automatically.

- Recurrent Neural Networks (RNN): A neural network that is commonly used in image detection and processing. CNNs are intended to recognize and extract features from pictures automatically.

- Long Short-Term Memory (LSTM) Networks: A form of RNN created to overcome the issue of disappearing gradients in standard RNNs. Long-term dependencies in sequential data can be better captured with LSTMs.

- Autoencoders: Unsupervised learning neural network in which the network is taught to reproduce its input data at its output layer. Data compression, anomaly detection, and picture denoising may all be accomplished with autoencoders.

- Generative Adversarial Networks (GAN): A generative neural network is a form of neural network that is taught to produce new data that is comparable to a training dataset. GANs are made up of two networks: a generator network that creates fresh data and a discriminator network that assesses the quality of the created data.

Wrap-Up, What Should Be Your Next Steps?

Explore several online resources and courses to learn more about training a neural network. Working on projects or examples is one method to gain a better grasp of neural networks.

Start with easy examples like binary classification problems or picture classification tasks, and then go to more difficult tasks like natural language processing or reinforcement learning.

Working on projects helps you to obtain real experience and improve your neural network training skills.

You may also join online machine learning and neural network groups and forums to interact with other learners and professionals, share your work, and receive comments and help.

⁶ĵWould have liked to see the python program for the error minimization. Special selection nodes for weight changes to the next layer