Data Scientists and machine learning professionals deal with a significant number of data of various types in a typical data science project. Numerous models have been developed with various configurations and features, as well as multiple iterations of parameter tuning to get the optimal performance.

In such a scenario, all data modifications and model building process adjustments must be monitored and measured in order to determine what worked and what did not. It’s also vital to be able to go back to a previous edition and look into previous outcomes.

Data Version Control (DVC), which assists in managing the data, the underlying model, and running reproducible outcomes, is one such technology that enables us to monitor all of this.

In this post, we will closely look into Data Version Control, and the best tools to use. Let’s begin.

What is Data Version Control?

Versioning is required for all production systems. A single point of access to the most up-to-date data. Any resource that is often modified, particularly by several users at the same time, needs the creation of an audit trail to keep track of all changes.

The version control system is responsible for ensuring that everyone in the team is on the same page. It guarantees that everyone in the team is working on the most recent version of the file and, more importantly, that everyone is collaborating on the same project at a time.

If you have the proper equipment, you can accomplish this with minimal effort!

You’ll have consistent data sets and a thorough archive of all your research if you use a dependable data version management strategy. Data versioning tools are critical for your workflow if you care about reproducibility, traceability, and ML model history.

They help you acquire a version of an item, like a hash of a dataset or model, which you can then use to identify and compare. This data version is often entered into your metadata management solution to guarantee that your model training is versioned and repeatable.

Best Data Version Control tools

Now it’s time to look at the finest data version control solutions available, which you can use to keep track of every part of your code.

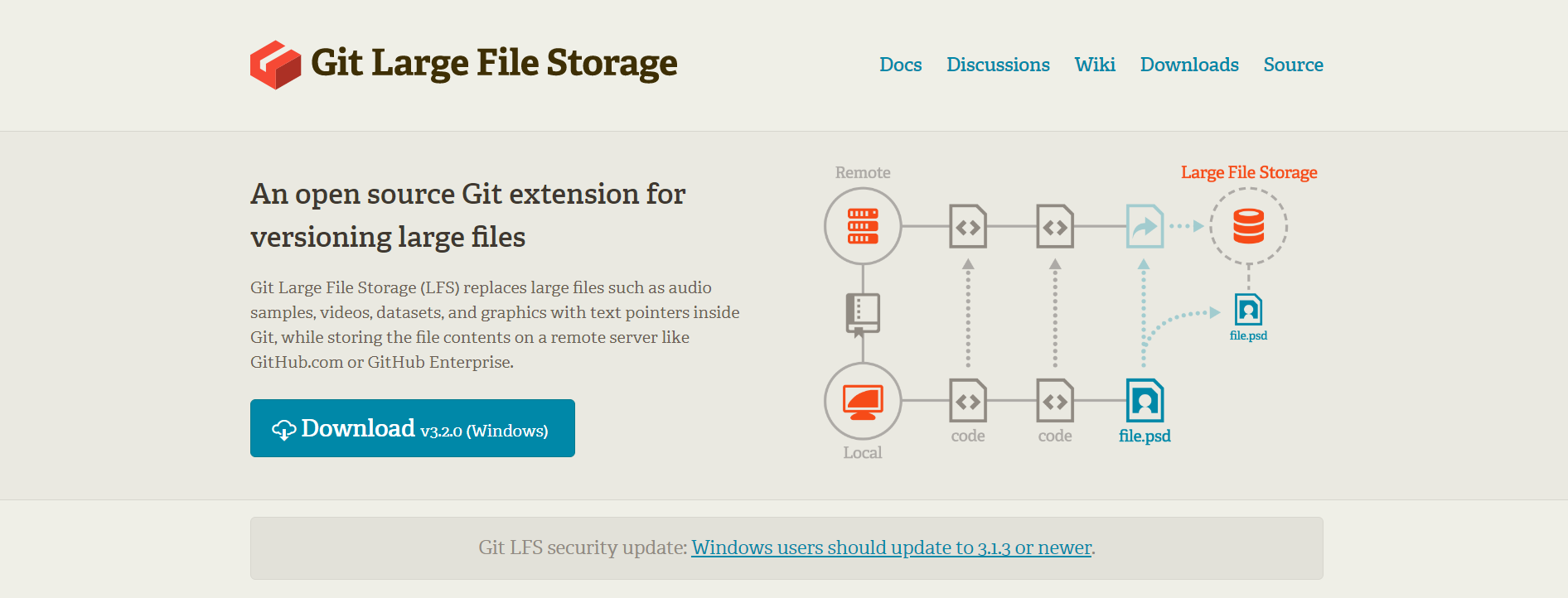

1. Git LFS

The Git LFS project is free to use. Within Git, large files like audio samples, videos, databases, and photos are substituted with text pointers, and the file contents are saved on a remote server like GitHub.com or GitHub Enterprise.

It allows you to use Git to version enormous files—up to several GB in size—host more in your Git repositories utilizing external storage, and clone and retrieve large file repositories more quickly. When it comes to data management, this is a pretty light solution. To work with Git, you don’t require any extra commands, storage systems, or toolkits.

It limits the quantity of information you download. This implies that cloning and retrieving large files from repositories will be faster. The pointers are made of a lighter material and point to the LFS.

As a result, when you push your repo into the main repository, it updates quickly and takes up less space.

Pros

- Easily integrates into the development workflows of most businesses.

- There is no need to handle extra rights because it uses the same permissions as the Git repository.

Cons

- Git LFS necessitates the use of dedicated servers to store your data. As a result, your data science teams will be locked in, and your engineering workload will rise.

- Very specialized, and may necessitate the use of a variety of different tools for subsequent phases in the data science workflow.

Pricing

It is free to use for everyone.

2. LakeFS

LakeFS is an open-source data versioning solution that stores data in S3 or GCS and has a Git-like branching and committing paradigm that scales to petabytes.

This branching strategy makes your data lake ACID compliant by allowing changes to happen in distinct branches that can be constructed, merged, and rolled back atomically and instantaneously.

LakeFS enables teams to create data lake activities that are repeatable, atomic, and versioned. It’s a newbie to the scene, but it’s a force to be reckoned with.

It uses a Git-like branching and version control approach to interact with your data lake, scalable up to Petabytes of data. On an exabyte scale, you can check for version control.

Pros

- Git-like operations include branching, committing, merging, and reverting.

- Pre-commit/merge hooks are used for data CI/CD checks.

- Provides complex features like ACID transactions for simple cloud storage like S3 and GCS, all while remaining format neutral.

- Revert changes to data in real-time.

- Scales readily, allowing it to accommodate very huge data lakes. Version control can be provided for both development and production settings.

Cons

- LakeFS is a new product, thus functionality and documentation may change more quickly than with previous solutions.

- Since it is focused on data versioning, you will need to utilize a variety of additional tools for various parts of the data science workflow.

Pricing

It is free to use for everyone.

3. DVC

Data Version Control is a free data versioning solution designed for data science and machine learning applications. It’s a program that allows you to define your pipeline in any language.

By managing large files, data sets, machine learning models, code, and so on, the tool makes machine learning models shareable and reproducible. The program follows Git’s lead in providing a simple command line that can be set up in only a few steps.

As its name implies, DVC isn’t only about data versioning. It also facilitates the management of pipelines and machine learning models for teams.

Finally, DVC will aid in improving the consistency of your team’s models and their repeatability. Instead of using complicated file suffixes and comments in code, take advantage of Git branches to try out new ideas. To travel, employ automated metric-tracking instead of paper and pencil.

To transmit consistent bundles of machine learning models, data, and code into production, faraway computers, or a colleague’s desktop, you can utilize push/pull commands instead of ad-hoc scripts.

Pros

- It’s lightweight, open-source, and works with all major cloud platforms and storage kinds.

- Flexible, agnostic of format and framework, and simple to implement.

- Every ML model’s entire evolution can be traced back to its source code and data.

Cons

- Pipeline management and DVC version control are inextricably linked. There will be redundancy if your team is already utilizing another data pipeline product.

- Since DVC is lightweight, your team may need to design additional features manually to make it more user-friendly.

Pricing

It is free to use for everyone.

4. DeltaLake

DeltaLake is an open-source storage layer that boosts data lake reliability. Delta Lake supports ACID transactions and scalable metadata management in addition to streaming and batch data processing.

It works with Apache Spark APIs and sits on your existing data lake. Delta Sharing is the world’s first open protocol for safe data sharing in business, making it simple to exchange data with other businesses independent of their computer systems.

Delta Lakes are capable of handling petabytes of data with ease. Metadata is stored in the same way as data, and users can get it using the Describe Detail method. Delta Lakes has a single architecture that can read both stream and batch data.

Upserts are simple to do using Delta. These upserts or merges into the Delta table are comparable to SQL Merges. You can use it to integrate data from another data frame into your table and perform updates, inserts, and deletes.

Pros

- Many capabilities, like ACID transactions and robust metadata management, can be available in your present data storage solution.

- Delta Lake can now effortlessly manage tables with billions of partitions and files on a petabyte-scale.

- Reduces the need for manual data version control and other data concerns, allowing developers to concentrate on developing products on top of their data lakes.

Cons

- As it was designed to work with Spark and huge data, Delta Lake is generally overkilled for most tasks.

- It necessitates the use of a dedicated data format, which limits its flexibility and makes it incompatible with your present forms.

Pricing

It is free to use for everyone.

5. Dolt

Dolt is a SQL database that does forking, cloning, branching, merging, pushing, and pulling in the same way as a git repository does. To improve the user experience of a version control database, Dolt allows data and structure to change in sync.

It’s an excellent tool for you and your coworkers to collaborate on. You can connect to Dolt in the same way that you would to any other MySQL database and run queries or make changes to the data using SQL commands.

When it comes to data versioning, Dolt is one-of-a-kind. Dolt is a database, as opposed to some of the other solutions that just version data. While the software is currently in its early stages, there are hopes to make it fully compatible with Git and MySQL in the near future.

All of the commands that you’re familiar with using with Git will also work with Dolt. Git versions files, Dolt versions tables Using the command line interface, import CSV files, commit your changes, publish them to a remote, and merge your teammate’s changes.

Pros

- Lightweight and open source in part.

- In comparison to more obscure choices, it has a SQL interface, making it more accessible to data analysts.

Cons

- In comparison to other database versioning alternatives, Dolt is still a developing product.

- Since Dolt is a database, you must transfer your data into it to get the benefits.

Pricing

Everyone is welcome to use the community session. The platform does not provide premium pricing; instead, you must contact the provider.

6. Pachyderm

Pachyderm is a free data science version control system with a lot of features. Pachyderm Enterprise is a powerful data science platform designed for large-scale collaboration in highly secure environments.

Pachyderm is one of the list’s few data science platforms. Pachyderm’s goal is to provide a platform that manages the complete data cycle and makes it simple to duplicate the findings of machine learning models. Pachyderm is known as “the Docker of Data” in this context. Pachyderm packages up your execution environment using Docker containers. This makes it simple to duplicate the same results.

Data scientists and DevOps teams can deploy models with confidence thanks to the combination of versioned data with Docker. Thanks to an efficient storage system, petabytes of structured and unstructured data can be maintained while storage costs are kept to a minimum.

Throughout the pipeline phases, file-based versioning provides a thorough audit record for all data and artifacts, including intermediate outputs. Many of the tool’s capabilities are driven by these pillars, which help teams to get the most out of it.

Pros

- Based on containers, your data environments will be portable and easy to transfer between cloud providers.

- Robust, with the ability to scale from small to extremely big systems.

Cons

- Since there are so many moving elements, such as the Kubernetes server necessary to handle Pachyderm’s free edition, there is a steeper learning curve.

- Pachyderm might be challenging to incorporate into a company’s existing infrastructure because of its many technological components.

Pricing

You can start using the platform with the community session and for the enterprise edition, you have to contact the vendor.

7. Neptune

Model-building metadata is managed by the ML metadata store, which is an important aspect of the MLOps stack. For every MLOps workflow, Neptune serves as centralized metadata storage.

You can keep track of, visualize, and compare thousands of machine learning models all in one place. It includes features such as experiment tracking, model registry, and model monitoring, as well as a collaborative interface. It includes over 25 different tools and libraries integrated, including several model training and hyperparameter tuning tools.

You can join up for Neptune without using your credit card. A Gmail account will suffice in its place.

Pros

- Integration with any pipeline, flow, codebase, or framework is simple.

- The real-time visualizations, the easy API, and the quick support

- With Neptune, you can make a “backup” of all of your experiments’ data in one location, which you can recover later.

Cons

- Although not entirely open-source, an individual version would presumably suffice for private use, although such access is limited to one month.

- There are a few small design flaws to be found.

Pricing

You can start using the platform with the Individual plan which is free to use for everyone. The pricing section starts from $150/month.

Conclusion

In this post, we discussed the best data versioning tools. Each tool, as we’ve seen, has its own set of features. Some were free, while others required payment. Some are well suited to the small business model, while others are better suited to the large business model.

As a consequence, you must select the finest software for your purposes after weighing the advantages and disadvantages. We encourage that you test out the free trial version before purchasing a premium product.

Leave a Reply