Table of Contents[Hide][Show]

Sensors and software are combined in autonomous vehicles to navigate, steer, and operate a variety of vehicles, including motorcycles, automobiles, trucks, and drones.

Depending on how they were developed or designed, they may or may not require driver assistance.

Fully autonomous cars can operate safely without human drivers. Some, like Google’s Waymo automobile, could not even have a steering wheel.

A partially autonomous vehicle, such as a Tesla, can assume complete control of the vehicle but may need a human driver to assist if the system runs into doubt.

Different degrees of self-automation are included in these cars, from lane guidance and braking help to fully independent, self-driving prototypes.

The goal of driverless automobiles is to lower traffic, emissions, and accident rates.

This is possible because autonomous vehicles are more adept at adhering to traffic regulations than people.

For a smooth drive, certain information is necessary, such as the location of the car or any nearby objects, the shortest and safest path to the destination, and the capacity to operate the driving system.

It’s crucial to understand when and how to carry out necessary tasks.

This article will cover a lot of ground, including the system architecture for autonomous cars, components required, and vehicular ad hoc networks (VANETs).

Necessary components required for Autonomous Vehicle

Today’s autonomous vehicles employ a variety of sensors, including cameras, GPS, inertial measurement units (IMUs), sonar, laser illumination detection and range (lidar), radio detection and ranging (radar), sound navigation, and ranging (sonar), and 3D maps.

Together, these sensors and technologies analyze data in real-time to control the steering, acceleration, and braking.

The radar sensors aid in keeping track of the whereabouts of surrounding cars. Vehicles are helped with ultrasonic sensors during parking.

A technology known as lidar was created by using both types of sensors. By reflecting light pulses off the environment around the automobile, lidar sensors can detect the margins of roadways and identify lane markers.

These also warn drivers of adjacent impediments, such as other vehicles, pedestrians, and bicycles.

The size and distance of everything around the car are measured using lidar technology, which also creates a 3D map that allows the vehicle to view its surroundings and identify any risks.

Regardless of the time of day, whether it is bright or gloomy, it does a superb job of recording information in different types of ambient light.

The automobile uses cameras, radar, and GPS antennas, together with lidar and cameras, to detect its surroundings and identify its location.

Cameras check for pedestrians, bikers, automobiles, and other impediments while also detecting traffic signals, reading road signs and markings, and keeping track of other vehicles.

However, they could have a hard time in dim or shadowy areas. An autonomous vehicle can see where it is going by using a mix of lidar, radar, cameras, GPS antennas, and ultrasonic sensors to map out the road in front of it digitally.

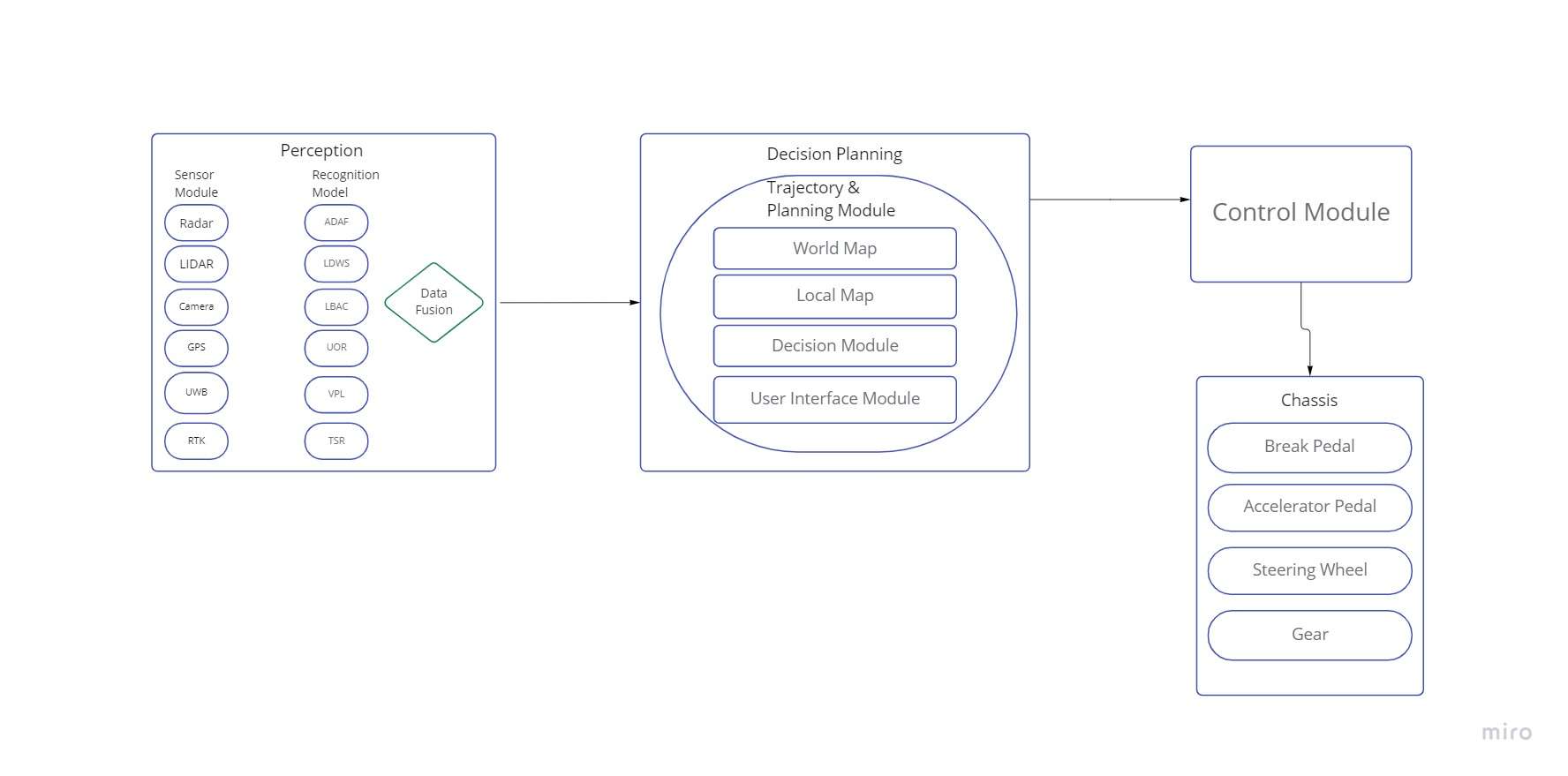

High-level System architecture

The essential sensors, actuators, hardware, and software are listed in the architecture, which also demonstrates the whole communication mechanism or protocol in AVs.

Perception

This stage comprises identifying the AV’s location in relation to the environment and sensing the environment around the AV using a variety of sensors.

The AV uses RADAR, LIDAR, camera, real-time kinetic (RTK), and other sensors at this step. The recognition modules receive the data from these sensors and process it after passing it along.

In general, the AV consists of a control system, LDWS, TSR, unknown obstacles recognition (UOR), a vehicle positioning and localization (VPL) module, etc.

The combined information is given to the stage of decision-making and planning after being processed.

Decision & Planning

The movements and behavior of the AV are decided upon, planned, and controlled at this step using the information received during the perception process.

This stage, which the brain would represent, is where choices are made on things like path planning, action prediction, obstacle avoidance, etc.

The choice is based on the information that is now and historically accessible, including real-time map data, traffic specifics, trends, user information, etc.

There could be a data log module that keeps track of mistakes and data for later use.

Control

The control module executes operations/actions relating to physical control of the AV, such as steering, braking, accelerating, etc. after receiving information from the decision and planning module.

Chassis

The last step involves interacting with the mechanical parts affixed to the chassis, such as the gear motor, steering wheel motor, brake pedal motor, and pedal motors for the accelerator and brake.

The control module signals and manages all of these components.

Now we will talk about the general communication of an AV before talking about the design, operation, and use of various key sensors.

RADAR

In AVs, RADARs are used to scan the environment to find and locate automobiles and other objects.

RADARs are often employed in both military and civilian purposes, such as airports or meteorological systems, and they operate in the millimeter-wave (mm-Wave) spectrum.

Different frequency bands, including 24, 60, 77, and 79 GHz, are used in contemporary automobiles and have a measurement range of 5 to 200 m [10].

By calculating the ToF between the transmitted signal and the returned echo, the distance between the AV and the object is determined.

In AVs, the RADARs employ an array of micro-antennas that create a collection of lobes to enhance range resolution and multiple target identification. mm-Wave RADAR can precisely assess close-range objects in any direction by utilizing the variance in Doppler shift due to its increased penetrability and larger bandwidth.

Since mm-Wave radars have a longer wavelength, they feature anti-blocking and anti-pollution capabilities that enable them to function in rain, snow, fog, and low light.

Additionally, Doppler shift can be used to calculate the relative velocity via mm-Wave radars. Due to their capability, mm-Wave radars are well suited for a wide range of AV applications, including obstacle detection, and pedestrian and vehicle recognition.

Ultrasonic Sensors

These sensors work in the 20–40 kHz range and employ ultrasonic waves. A magneto-resistive membrane that is used to gauge the object’s distance produces these waves.

By calculating the time-of-flight (ToF) of the emitted wave to the echoed signal, the distance is determined. The typical range of ultrasonic sensors is less than 3 meters.

The sensor output is refreshed every 20 ms, which prevents it from conforming to the ITS’s rigorous QoS requirements. These sensors have a relatively small beam detection range and are directed.

Therefore, to obtain a full-field vision, numerous sensors are required. However, many sensors will interact and can result in significant range inaccuracies.

LiDAR

The spectra of 905 and 1550 nm are used in LiDAR. Since the human eye is susceptible to retinal damage from the 905 nm range, the current LiDAR operates in the 1550 nm band to reduce retinal damage.

Up to 200 meters is LiDAR’s maximum working range. Solid-state, 2D, and 3D LiDAR are the different subcategories of LiDAR.

A single laser beam is dispersed over a mirror that spins rapidly in a 2D LiDAR. By placing several lasers on the pod, a 3D LiDAR can acquire a 3D picture of the surroundings.

It has been demonstrated that a roadside LiDAR system lowers the number of vehicle-to-pedestrian (V2P) collisions in both intersectional and non-intersectional zones.

It employs a 16-line, real-time, computationally effective LiDAR system.

It is suggested to use a deep auto-encoder artificial neural network (DA-ANN), which achieves an accuracy of 95% across a 30 m range.

In, it is demonstrated how a support vector machine (SVM)-based algorithm combined with a 64-line 3D LiDAR can enhance pedestrian recognition.

Despite having better measurement precision and 3D vision than an mm-Wave radar, LiDAR performs less well in adverse weather including fog, snow, and rain.

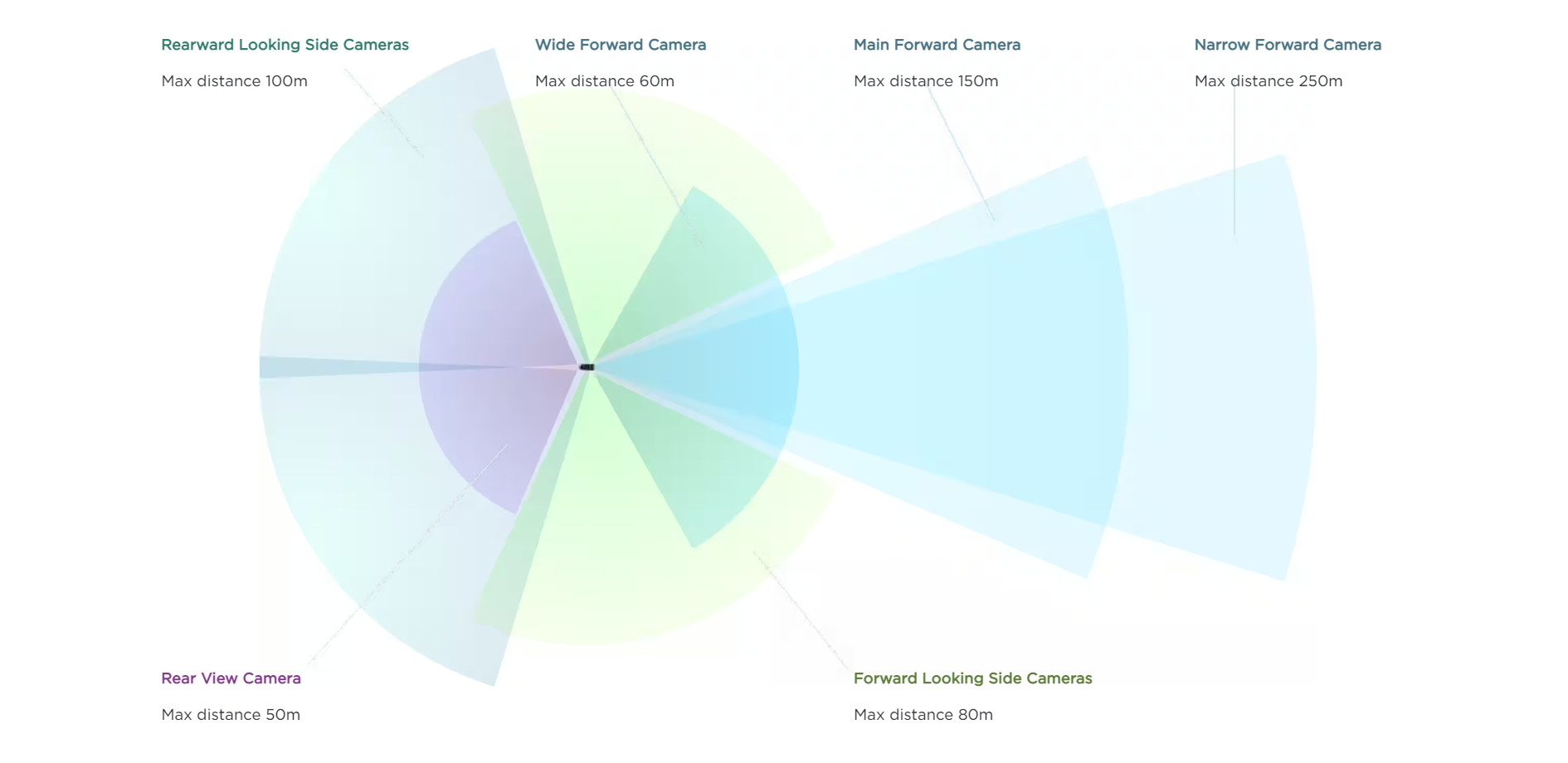

Cameras

Depending on the device’s wavelength, the camera in AVs can either be infrared- or visible-light-based.

Charge-coupled device (CCD) and complementary metal-oxide-semiconductor (CMOS) image sensors are used in the camera (CMOS).

Depending on the lens quality, the camera’s maximum range is around 250 m. The three bands used by visible cameras—Red, Green, and Blue—are separated by the same wavelength as the human eye, or 400–780 nm (RGB).

Two VIS cameras are coupled with established focal lengths to create a new channel that contains depth (D) information, allowing for the creation of stereoscopic vision.

A 3D view of the area surrounding the vehicle can be obtained thanks to this capability via the camera (RGB-D).

Passive sensors having a wavelength of between 780 nm and 1 mm are used by the infrared (IR) camera. In peak illumination, the IR sensors in AVs offer visual control.

This camera aids AVs with object recognition, side view control, accident recording, and BSD. However, in adverse weather, such as snow, fog, and changing light conditions, the camera’s performance alters.

A camera’s primary benefits are its ability to precisely gather and record the texture, color distribution, and shape of the environment.

Global Navigation Satellite System and Global Positioning System, Inertial Measurement Unit

This technology aids the AV in navigating by pinpointing its precise location. A group of satellites in orbit around the surface of the planet are used by GNSS to localize.

The system stores data on the AV’s location, speed, and precise time.

It works by figuring out the ToF between the signal received and the satellite’s emission. The Global Positioning System (GPS) coordinates are often used to obtain the AV location.

The GPS-extracted coordinates are not always precise, and they typically add a positional error with a mean value of 3 m and a standard variation of 1 m.

In metropolitan situations, performance is further deteriorated, with an error in the location of up to 20 m, and in certain severe circumstances, the GPS position error is approximately 100 m.

Additionally, AVs can employ the RTK system to precisely determine the position of the vehicle.

In AVs, the position and direction of the vehicle can also be determined using dead reckoning (DR) and the inertial position.

Sensor Fusion

For proper vehicle management and safety, AVs must get precise, real-time knowledge of the location, status, and other vehicle factors like weight, stability, velocity, etc.

This information must be gathered by the AVs utilizing a variety of sensors.

By merging the data acquired from several sensors, the sensor fusion technique is utilized to produce coherent information.

The method permits the synthesis of unprocessed data acquired from complementary sources.

As a result, sensor fusion enables the AV to accurately comprehend its surroundings by merging all the useful data gathered from various sensors.

Different types of algorithms, including Kalman filters and Bayesian filters, are used to carry out the fusion process in AVs.

Because it is used in several applications, including RADAR tracking, satellite navigation systems, and optical odometry, the Kalman filter is seen as being crucial for a vehicle to operate autonomously.

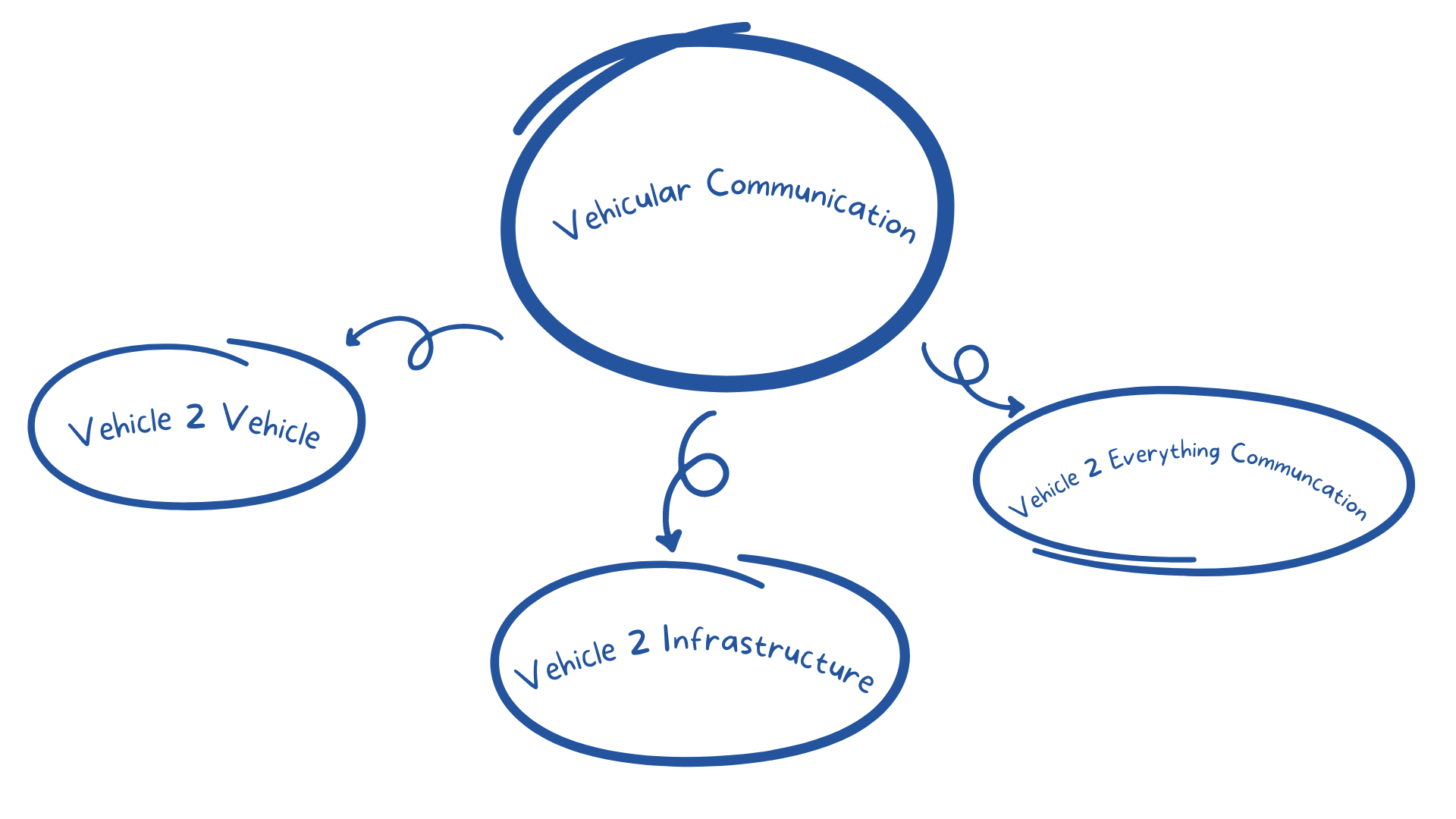

Vehicular Ad-Hoc Networks (VANETs)

VANETs are a new subclass of mobile ad hoc networks that can spontaneously create a network of mobile devices/vehicles. Vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) communication are possible with VANETs.

The primary goal of such technology is to increase road safety; for example, in dangerous situations such as accidents and traffic jams, cars can interact with each other and the network to relay crucial information.

The following are the primary components of VANET technology:

- OBU (on-board unit): It is a GPS-based tracking system placed in each vehicle that allows them to interact with one another and with roadside units (RSU). The OBU is outfitted with several electronic components, including a resource command processor (RCP), sensor devices, and user interfaces, to obtain essential information. Its primary purpose is to use a wireless network to communicate between multiple RSUs and OBUs.

- Roadside Unit (RSU): RSUs are fixed computer units that are positioned at precise points on streets, parking lots, and junctions. Its major objective is to link autonomous vehicles to the infrastructure, and it also helps with vehicle localization. Additionally, it can be utilized to link a vehicle to other RSUs utilizing various network topologies. Additionally, they have been run on ambient energy sources including solar power.

- Trusted Authority (TA): It is a body that controls every step of the VANETs process, ensuring that only legitimate RSUs and vehicle OBUs can register and interact. By confirming the OBU ID and authenticating the vehicle, it offers security. Additionally, it finds harmful communications and odd behavior.

VANETs are used for vehicular communication, which includes V2V, V2I, and V2X communication.

Vehicle 2 Vehicle Communication

The ability for automobiles to talk with one another and exchange crucial information concerning traffic congestion, accidents, and speed restrictions is known as inter-vehicle communication (IVC).

V2V communication can create the network by joining various nodes (Vehicles) together using a mesh topology, either partial or full.

They are categorized as single-hop (SIVC) or multi-hop (MIVC) systems depending on how many hops are used for inter-vehicle communication.

While the MIVC can be utilized for long-range communication, such as traffic monitoring, the SIVC can be used for short-range applications like lane merging, ACC, etc.

Numerous benefits, including BSD, FCWS, automated emergency braking (AEB), and LDWS, are offered through V2V communication.

Vehicle 2 Infrastructure Communication

The automobiles can communicate with the RSUs through a process known as roadside-to-vehicle communication (RVC). It aids in the detection of parking meters, cameras, lane markers, and traffic signals.

Ad hoc, wireless, and bidirectional connection between the cars and the infrastructure.

For the administration and supervision of traffic, the infrastructure’s data is employed. They are utilized to adjust various speed parameters that allow the cars to maximize fuel economy and manage traffic flow.

The RVC system can be separated into the Sparse RVC (SRVC) and the Ubiquitous RVC depending on the infrastructure (URVC).

The SRVC system only offers communication services at hotspots, such as to locate open parking spaces or petrol stations, whereas the URVC system offers coverage along the whole route, even at high speeds.

In order to guarantee network coverage, the URVC system necessitates a large investment.

Vehicle 2 Everything Communication

The car can connect with other entities via V2X, including pedestrians, roadside objects, devices, and the Grid (V2P, V2R, and V2D) (V2G).

Using this kind of communication, drivers can avoid hitting at-risk pedestrians, cyclists, and motorcycle riders.

The Pedestrian Collision Warning (PCW) system can warn the driver of a roadside passenger before a catastrophic collision occurs thanks to V2X communication.

In order to send the pedestrian important messages, the PCW can make advantage of the smartphone’s Bluetooth or Near Field Communication (NFC).

Conclusion

The many technologies utilized to construct autonomous cars can have a big impact on how they operate.

At its most basic, the car develops a map of its surroundings using an array of sensors that provide information about the route around it and other vehicles in its path.

This data is then analyzed by a complicated machine-learning system, which generates a set of actions for the car to execute. These behaviors are regularly altered and updated as the system learns more about the vehicle’s surroundings.

Despite my best efforts to present you with an overview of the autonomous vehicle system architecture, there is a lot more going on behind the scenes.

I genuinely hope you will find this knowledge valuable and make use of it.

Leave a Reply