Table of Contents[Hide][Show]

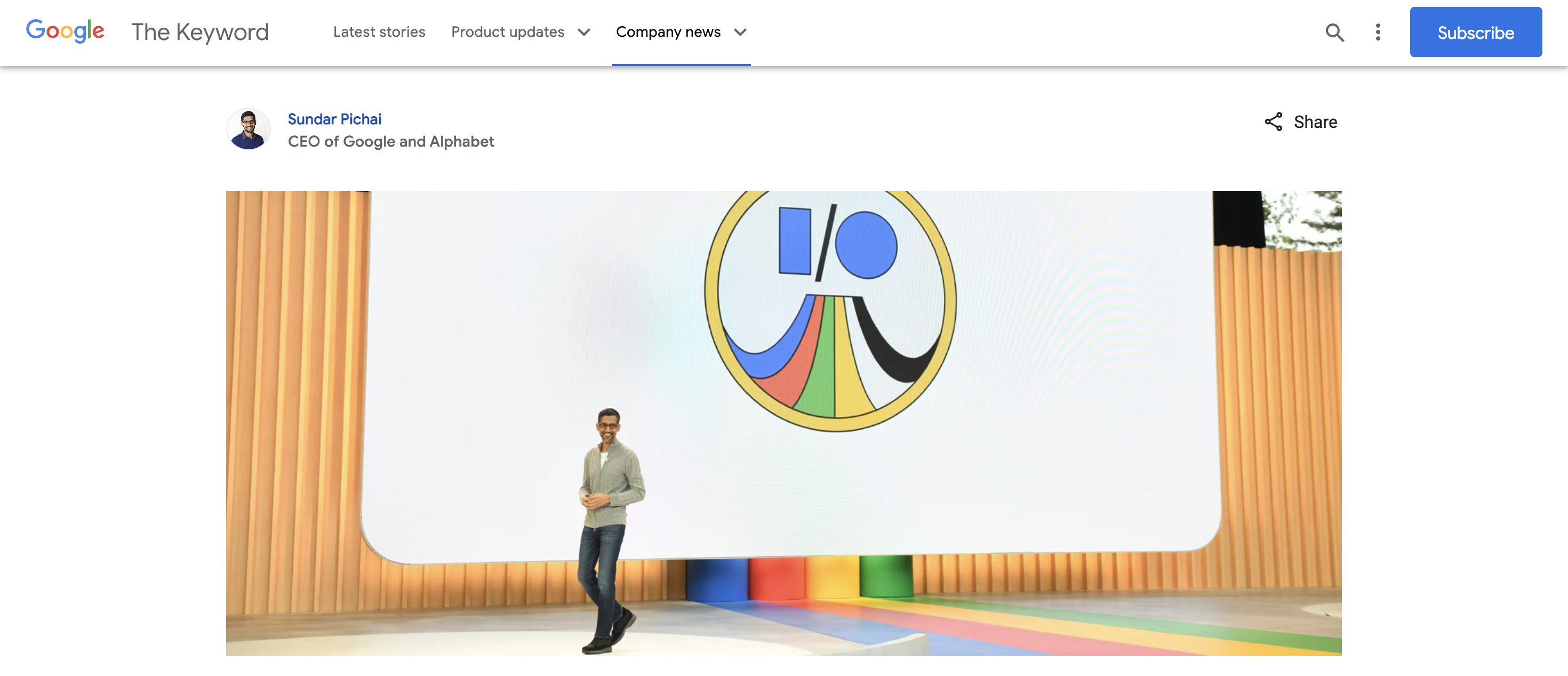

The 23rd Google I/O was quite thrilling! In the area of artificial intelligence, they presented several important advances.

The competition between OpenAI’s GPT-4 and Google’s Gemini was one of the most significant reveals. After their existing PaLM 2 system, Gemini is the large language model (LLM) of the following generation.

The machine-learning model has been enhanced by Google, making it even more sophisticated. In order to compete with Microsoft’s Bing improvements, they are also adding generative AI capabilities into Android and boosting Google Search with AI.

To make Google Bard more competitive with other chatbots like ChatGPT, they announced a significant improvement for it during the event. Bard will eventually support 40 more languages, including Korean and Japanese. With this extension, Bard will be able to support devs anywhere.

Additionally, in order to improve its replies to user requests, Bard will now offer pictures, maps, and other graphics. For all the developers out there, it will be of immense assistance. Here, we’ll examine the LLM Gemini’s specifics.

What is Gemini?

According to Google, Gemini was intended from the ground up to be multimodal, very efficient in terms of connecting tools and APIs, and ready for future advancements such as memory and planning. Google stated in their blog post that Gemini already has outstanding multimodal capabilities that prior models did not have.

“Once we fine-tune and thoroughly test Gemini for safety, we will offer it in various sizes and capabilities, similar to PaLM 2,” Google said.

As a result, it can be implemented across multiple products, apps, and devices to benefit everyone.”

They presented PaLM 2, a cutting-edge language model with expanded multilingual, reasoning, and coding capabilities, at the conference. It was extensively trained with multilingual material from over 100 languages.

PaLM 2 can produce and translate subtle content such as idioms, poetry, and riddles in a variety of languages.

Gemini is likely to boost Google’s AI efforts and challenge the pioneer, OpenAI’s ChatGPT. While ChatGPT is mostly used for text-based conversations, Gemini is multimodal, meaning it can respond to both text and visuals. Once integrated with Google Search, it has the potential to transform how consumers interact with the popular search engine.

Although further details about Gemini are not yet available, it can outperform ChatGPT and Bing AI, propelling Google to the head of the AI field.

Sundar Pichai, CEO of Google, remarked during the conference, “After seven years of being an AI-first company, we find ourselves at an exciting turning point.”

Gemini is now undergoing training, according to Pichai, and it is being created with a multimodal approach with the goal of being extremely effective and opening the door for future advances like memory and planning. Gemini is already showing off outstanding multimodal capabilities that were absent from earlier versions, according to Pichai, even if it is still in its early phases.

Google instructs Gemini via its TPU (chips). Pichai stated that after Gemini has been optimized and has passed safety inspections, it would be accessible in a range of sizes and capacities, though no specific release date was mentioned.

Pichai did make it clear that all of Google’s AI models will incorporate watermarking and metadata in outputs, such as pictures, to prevent the spread of incorrect information.

What makes Gemini superior to ChatGPT and BingAI?

Gemini has several intriguing “multimodal” characteristics. Gemini, in contrast to ChatGPT, which can only read and produce text, is based on a multimodal paradigm and can comprehend and produce text, code, and pictures.

Numerous opportunities are made possible by this wider variety of skills. Gemini, for instance, can be used to create a novel class of AI chatbots that can understand and react to both text and visuals.

However, both ChatGPT and Bing only provide text-based communication, with Bing providing a separate link for creating images but lacking in-chat picture support.

Gemini can handle a wider variety of products and applications in contrast to ChatGPT. It can be used, for example, to upgrade Google Search or create a cutting-edge virtual assistant that uses AI. These features are lacking in BingAI and ChatGPT. ChatGPT, however, provides plugins that enhance the results.

Gemini also possesses characteristics like memory and planning, allowing the development of AI-powered apps that go beyond what ChatGPT is capable of.

Exciting possibilities arise when you consider having a personal assistant powered by Gemini that keeps track of your preferences and aids in daily planning. To see Gemini’s full potential and investigate the opportunities it opens up, however, we must first wait impatiently for its public release.

Conclusion

Gemini, Google’s next-generation language model, has shown outstanding multimodal features, making it more adaptable than ChatGPT, its text-only rival.

Gemini creates new opportunities for chatbots and AI apps by enabling them to read and produce text, code, and graphics. These applications can now manage a larger range of activities. As opposed to Gemini, which supports both images and multimodal interactions, ChatGPT and BingAI are only capable of text-based interactions.

Although more specific information regarding Gemini has not yet been made public, it is clear that Google is committed to advancing AI technology and maintaining its lead in the field.

We anticipate seeing Gemini’s full potential and the creative possibilities it opens up as we excitedly await its formal launch.

Leave a Reply