It might be a little difficult to consider all of the available services and architectural options when thinking about data platforms.

An enterprise data platform often consists of data warehouses, data models, data lakes, and reports, each with a specific purpose and set of skills needed. In contrast, a new design called the data lakehouse has emerged during the last few years.

The versatility of data lakes and data warehouse data management are combined in a revolutionary data storage architecture dubbed a “data lakehouse.”

We will examine data lakehouse in-depth in this post, including its components, features, architecture, and other aspects.

What is Data Lakehouse?

As the name implies, a data lakehouse is a new type of data architecture that combines a data lake with a data warehouse to solve the shortcomings of each separately.

In essence, the lakehouse system uses inexpensive storage to maintain massive amounts of data in their original forms, much like data lakes. Adding the metadata layer on top of the store also gives data structure and empowers data management tools like to those found in data warehouses.

It stores the enormous volumes of organized, semi-structured, and unstructured data that they get from the different business applications, systems, and gadgets used throughout their organization.

The majority of the time, data lakes use low-cost storage infrastructure with a file application programming interface (API) to store data in open, generic file formats.

This makes it possible for many teams to access all of the company data through a single system for a variety of initiatives, such as data science, machine learning, and business intelligence.

Features

- Low-cost storage. A data lakehouse must be able to store data in inexpensive object storage, such as Google Cloud Storage, Azure Blob Storage, Amazon Simple Storage Service, or natively using ORC or Parquet.

- Capability for data optimization: Data layout optimization, caching, and indexing are a few examples of how a data lakehouse must be able to optimize the data while maintaining the data’s original format.

- A layer of transactional metadata: On top of the essential low-cost storage, this enables data management capabilities crucial for data warehouse performance.

- Support for the Declarative DataFrame API: The majority of AI tools can use DataFrames to retrieve raw object store data. Support for Declarative DataFrame API increases the ability to dynamically improve the data’s presentation and structure in response to particular data science or AI task.

- Support for ACID transactions: The acronym ACID, which stands for atomicity, consistency, isolation, and durability, is a critical component in defining a transaction and ensuring the consistency and dependability of data. Such transactions were previously only possible in data warehouses, but the lakehouse offers the option to utilize them with data lakes as well. With several data pipelines including concurrent data reads and writes, this resolves the problem of low data quality of the latter.

Elements of Data Lakehouse

The architecture of the data lakehouse is divided into two main tiers at a high level. The storage layer’s data intake is controlled by the Lakehouse platform (i.e., the data lake).

Without needing to load the data into a data warehouse or convert it into a proprietary format, the processing layer is then able to query the data in the storage layer directly using a range of tools.

Then, BI apps, as well as AI and ML technologies, can use the data. The economics of a data lake is provided by this design, but because any processing engine can read this data, businesses have the freedom to make the prepared data accessible for analysis by a range of systems. Processor performance and cost can both be improved by using this method for processing and analysis.

Due to its support for database transactions that adhere to the following ACID (atomicity, consistency, isolation, and durability) criteria, the architecture also enables many parties to access and write data simultaneously within the system:

- Atomicity refers to the fact that either the full transaction or none of it, succeeds while completing a transaction. In the event that a process is interrupted, this helps avoid data loss or corruption.

- Consistency guarantees transactions occur in a predictable, consistent manner. It maintains the integrity of the data by ensuring that every data is legitimate in accordance with predetermined rules.

- Isolation ensures that, up until it is finished, no transaction can be impacted by any other transaction within the system. This allows numerous parties to read and write from the same system simultaneously without interfering with each other.

- Durability guarantees that changes to the data in a system continue to exist after a transaction is finished, even in the event of a system failure. Any alterations brought about by a transaction are kept on file forever.

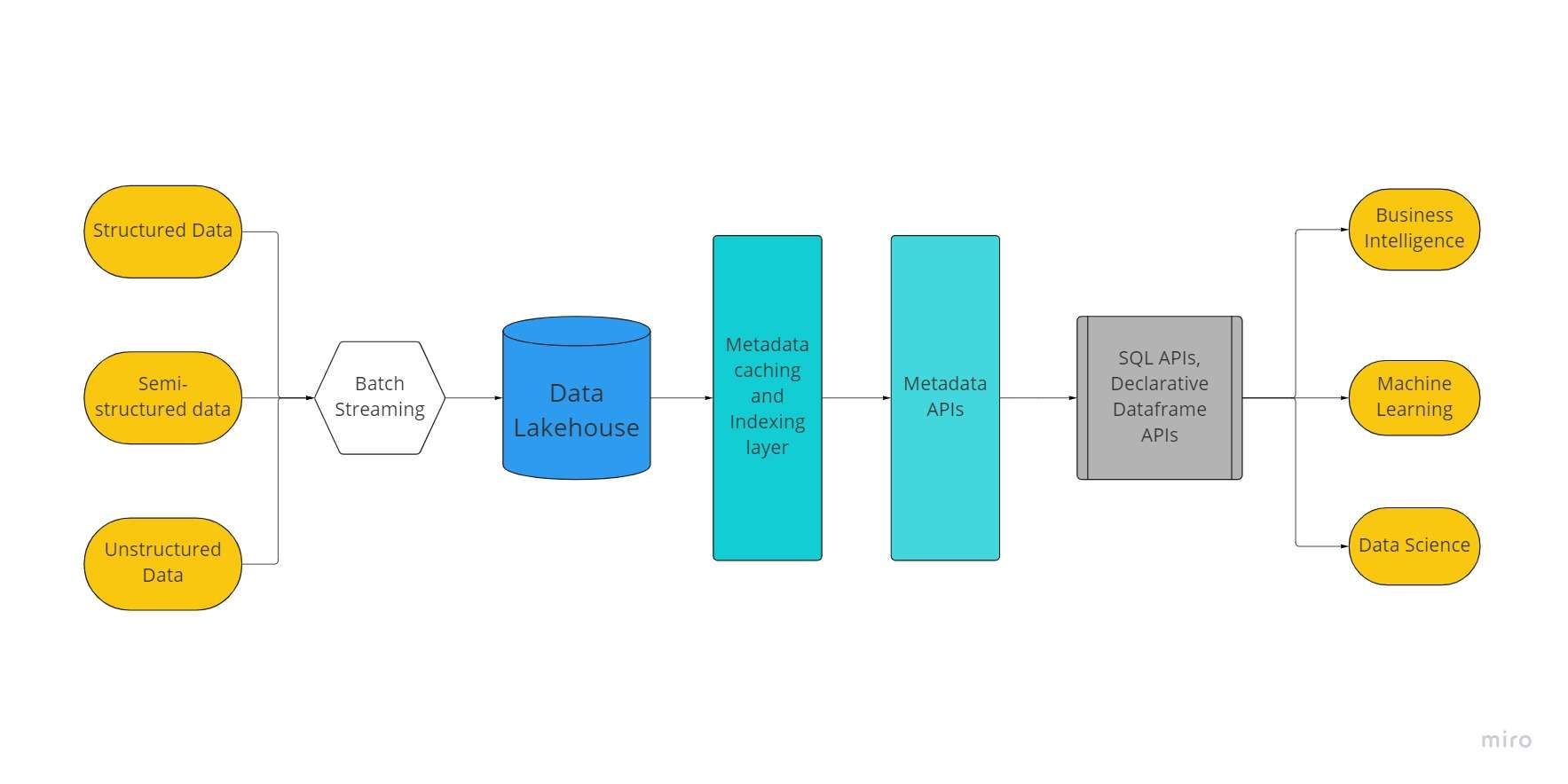

Data Lakehouse Architecture

Databricks (the innovator and designer of their Delta Lake concept) and AWS are the two main advocates for the concept of a data lakehouse. We shall thus rely on their knowledge and insight to describe the architectural layout of lakehouses.

A data lakehouse system will typically have five layers:

- Ingestion layer

- Storage layer

- Metadata layer

- API layer

- Consumption layer

Ingestion layer

The system’s first layer is in charge of collecting data from various sources and sending it to the storage layer. The layer can utilize several protocols to connect to numerous internal and external sources, including combining batch and streaming data processing capabilities, such as

- NoSQL databases,

- file shares

- CRM applications,

- websites,

- IoT sensors,

- social media,

- Software as a Service (SaaS) applications, and

- relational database management systems, etc.

At this point, components like Apache Kafka for data streaming and Amazon Data Migration Service (Amazon DMS) for importing data from RDBMSs and NoSQL databases can be employed.

Storage layer

The lakehouse architecture is meant to enable the storage of various types of data as objects in inexpensive object stores, such as AWS S3. Using open file formats, the client tools can then read these items directly from the store.

This makes it possible for many APIs and consumption layer components to access and utilize the same data. The metadata layer stores the schemas for structured and semi-structured datasets so that the components can apply them to the data as they read it.

The Hadoop Distributed File System (HDFS) platform, for example, can be used to construct cloud repository services that split computing and storage on-premises. Lakehouse is ideally suited for these services.

Metadata layer

The metadata layer is the fundamental component of a data lakehouse that distinguishes this design. It is a single catalog that offers metadata (information about other data pieces) for all items stored in the lake and allows users to employ administration capabilities like:

- A consistent version of the database is seen by concurrent transactions thanks to ACID transactions;

- caching to save cloud object store files;

- adding data structure indexes using indexing to speed up query processing;

- using zero-copy cloning to duplicate data objects; and

- to store certain versions of the data, etc., use data versioning.

Additionally, the metadata layer enables the implementation of schema management, the use of DW schema topologies like star/snowflake schemas, and the provision of data governance and auditing capability directly on the data lake, enhancing the integrity of the entire data pipeline.

Features for schema evolution and enforcement are included in schema management. By rejecting any writes that don’t meet the table’s schema, schema enforcement enables users to maintain data integrity and quality.

Schema evolution allows the table’s present schema to be modified to accommodate changing data. Due to a single administration interface on top of the data lake, there are also access control and auditing possibilities.

API layer

Another crucial layer of the architecture is now present, hosting a number of APIs that all end users can use to perform jobs more quickly and get more sophisticated statistics.

The use of metadata APIs makes it easier to identify and access the data items needed for a given application.

In terms of machine learning libraries, some of them, such as TensorFlow and Spark MLlib, can read open file formats like Parquet and directly access the metadata layer.

At the same time, DataFrame APIs offer greater chances for optimization, enabling programmers to organize and change dispersed data.

Consumption layer

Power BI, Tableau, and other tools and apps are hosted under the consumption layer. With the lakehouse design, all of the metadata and all of the data that is kept in a lake are accessible to the client apps.

The lakehouse can be used by all users within a company to perform all kinds of analytics operations, including creating business intelligence dashboards and running SQL queries and machine learning tasks.

Advantages of Data Lakehouse

Organizations can create a data lakehouse to unify their current data platform and optimize their whole data management process. By dismantling the silo barriers connecting various sources, a data lakehouse can replace the need for distinct solutions.

Compared to curated data sources, this integration produces a significantly more effective end-to-end procedure. This has several advantages:

- Less administration: Rather than extracting data from raw data and preparing it for use within a data warehouse, a data lakehouse allows any sources linked to it to have their data available and organized for utilization.

- Increased cost-effectiveness: Data lakehouses are constructed using contemporary infrastructure that divides computation and storage, making it simple to expand storage without increasing compute power. Just the usage of inexpensive data storage results in scalability that is cost-effective.

- Better data governance: Data lakehouses are constructed with standardized open architecture, allowing for more control over security, metrics, role-based access, and other important management components. By unifying resources and data sources, they simplify and enhance governance.

- Simplified standards: Since the connection was highly restricted in the 1980s, when data warehouses were first developed, localized schema standards were frequently developed inside businesses, even departments. Data lakehouses make use of the fact that many types of data now have open standards for schema by ingesting numerous data sources with the overlapping uniform schema to streamline procedures.

Disadvantages of Data Lakehouse

Despite all the hoopla surrounding data lakehouses, it’s important to keep in mind that the idea is still very new. Be sure to weigh the disadvantages before committing fully to this new design.

- Monolithic structure: A lakehouse’s all-inclusive design offers several advantages, but it also raises some problems. Monolithic architecture often leads to poor service for all users and can be rigid and difficult to maintain. Typically, architects and designers like a more modular architecture that they can customize for various use cases.

- The technology isn’t quite there yet: the final goal entails a significant amount of machine learning and artificial intelligence. Before lakehouses can perform as envisioned, these technologies must develop further.

- Not a significant advancement over existing structures: There is still considerable skepticism over how much more value lakehouses will actually contribute. Some detractors contend that a lake-warehouse design paired with the appropriate automated equipment can achieve comparable efficiency.

Challenges of Data Lakehouse

It could be difficult to adopt the data lakehouse technique. Due to the intricacy of its component pieces, it is incorrect to view the data lakehouse as an all-encompassing ideal structure or “one platform for everything,” for one.

Additionally, due to the increasing adoption of data lakes, businesses will have to move their current data warehouses to them, relying only on a promise of success with no demonstrable economic benefit.

If there are any latency problems or outages throughout the transfer process, this might wind up being expensive, time-consuming, and perhaps unsafe.

Business users must embrace highly specialized technologies, according to certain vendors that expressly or implicitly market solutions as data lakehouses. These might not always work with other tools linked to the data lake at the center of the system, adding to the issues.

Additionally, it might be difficult to supply 24/7 analytics while running business-critical workloads, which calls for infrastructure with cost-effective scalability.

Conclusion

The newest variety of data centers in recent years is the data lakehouse. It integrates a variety of fields, such as information technology, open-source software, cloud computing, and distributed storage protocols.

It enables businesses to centrally store all data kinds from any location, simplifying management and analysis. Data Lakehouse is a pretty intriguing concept.

Any firm would have a significant competitive edge if it had access to an all-in-one data platform that was as quick and efficient as a data warehouse while also being as flexible as a data lake.

The idea is still developing and remains relatively new. As a result, it could take some time to determine whether or not something can become widespread.

We all ought to be curious about the direction that Lakehouse architecture is heading.

Leave a Reply