LangChain is a cutting-edge and robust tool developed to harness the power of Large Language Models (LLMs).

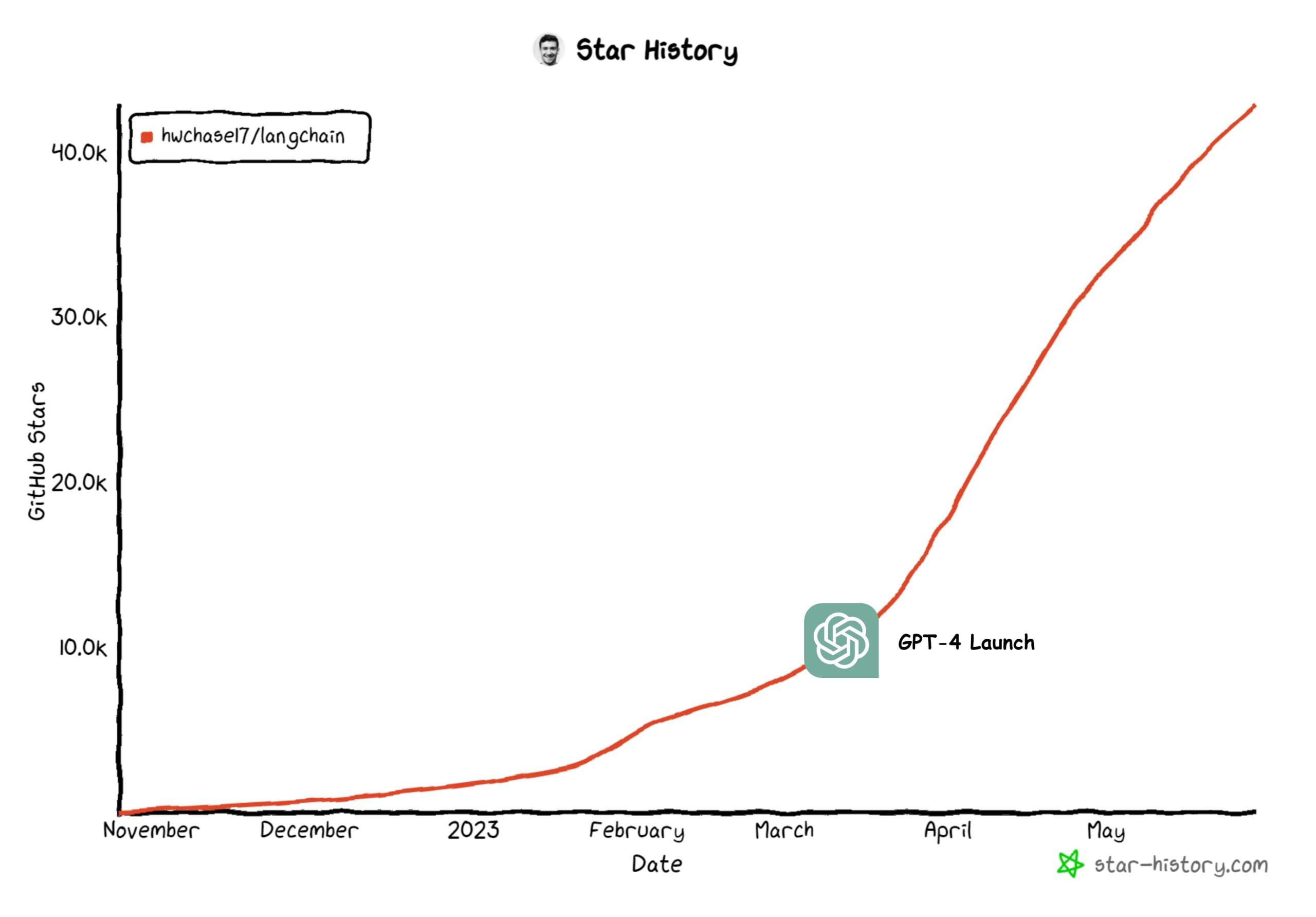

These LLMs possess remarkable capabilities and can efficiently tackle a wide array of tasks. However, it’s important to note that their strength lies in their general nature rather than in-depth domain expertise. Its popularity has grown rapidly since the introduction of GPT-4.

While LLMs excel at handling various tasks, they might face limitations when it comes to providing specific answers or tackling tasks that require profound domain knowledge. Consider, for instance, utilizing an LLM to answer questions or perform tasks within specialized fields like medicine or law.

While the LLM can certainly respond to general inquiries about these fields, it may struggle to offer more detailed or nuanced answers that necessitate specialized knowledge or expertise.

This is because LLMs are trained on vast amounts of text data from diverse sources, enabling them to learn patterns, understand context, and generate coherent responses. However, their training does not typically involve domain-specific or specialized knowledge acquisition to the same extent as human experts in those fields.

Therefore, while LangChain, in conjunction with LLMs, can be an invaluable tool for a broad range of tasks, it’s important to recognize that deep domain expertise may still be necessary in certain situations. Human experts with specialized knowledge can provide the necessary depth, nuanced understanding, and context-specific insights that might be beyond the capabilities of LLMs alone.

We’d advise looking at LangChain’s docs or GitHub repository for a more thorough understanding of its typical usage cases. It is strongly advised to get a bigger picture of this bundle.

How does it Work?

To understand the purpose and work of LangChain, let’s consider a practical example. We’re aware that GPT-4 has impressive general knowledge and can provide reliable answers to a wide range of questions.

However, what if we want specific information from our own data, such as a personal document, book, PDF file, or proprietary database?

LangChain allows us to connect a large language model like GPT-4 to our own sources of data. It goes beyond simply pasting a snippet of text into a chat interface. Instead, we can reference an entire database filled with our own data.

Once we obtain the desired information, LangChain can assist us in taking specific actions. For instance, we can instruct it to send an email containing certain details.

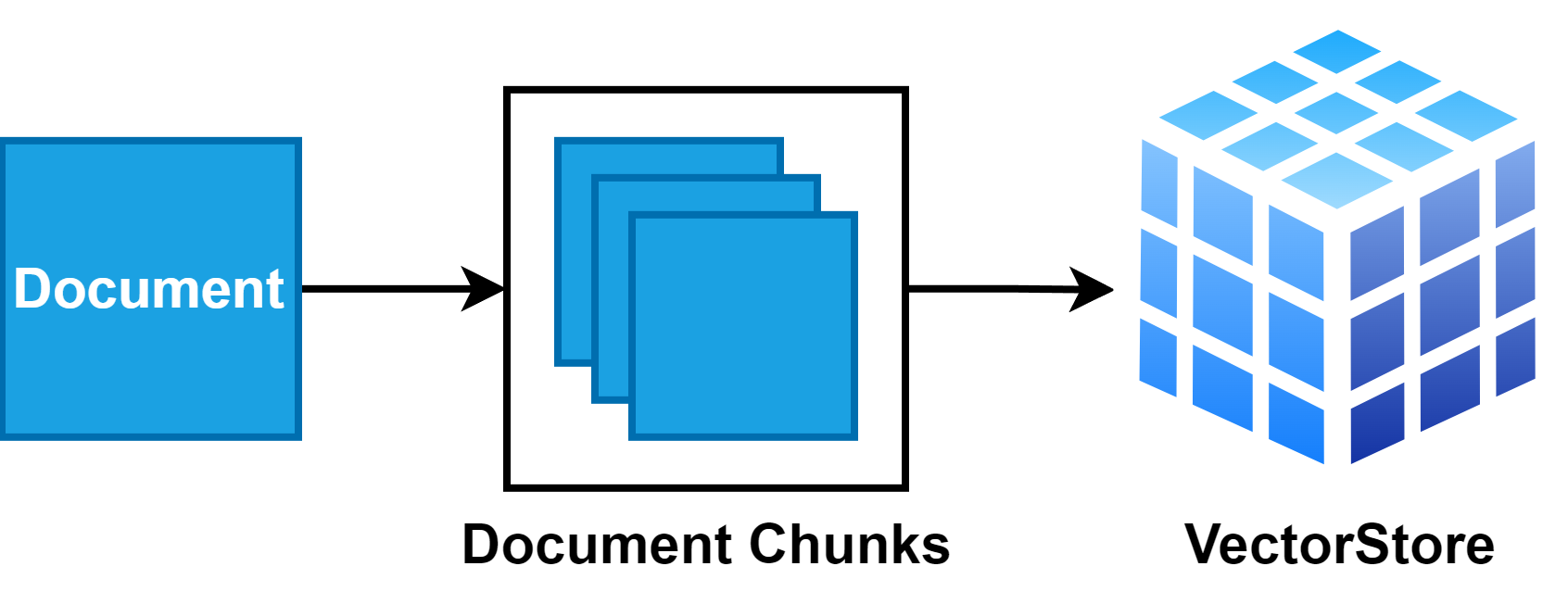

To achieve this, we follow a pipeline approach using LangChain. First, we take the document we want the language model to reference and divide it into smaller chunks. These chunks are then stored as embeddings, which are vector representations of the text, in a Vector Database.

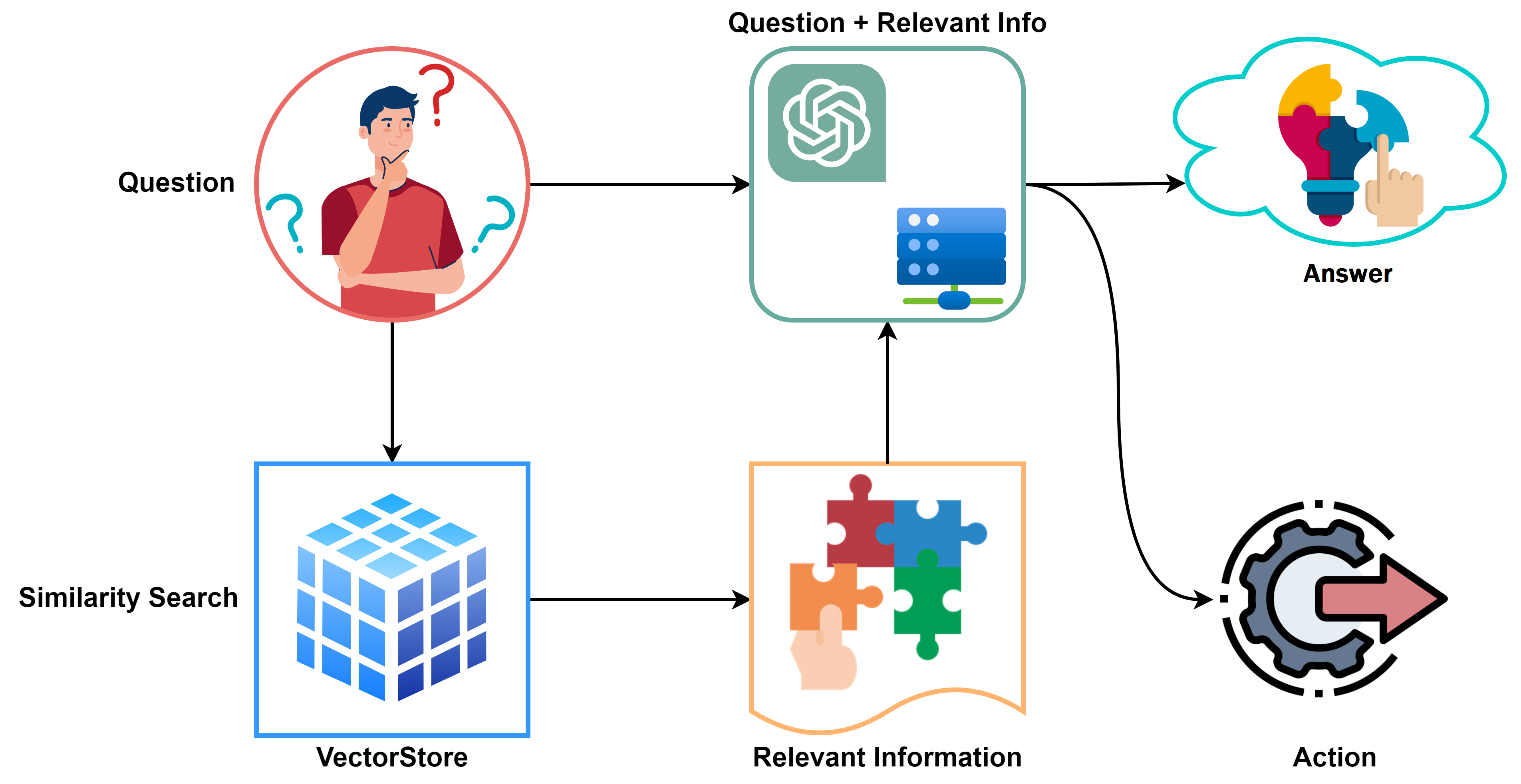

With this setup, we can build language model applications that follow a standard pipeline: a user asks an initial question, which is then sent to the language model. The question’s vector representation is used to perform a similarity search in the Vector Database, retrieving the relevant chunks of information.

These chunks are then fed back to the language model, enabling it to provide an answer or take the desired action.

LangChain facilitates the development of applications that are data-aware, as we can reference our own data in a vector store, and authentic, as they can take actions beyond answering questions. T

his opens up a multitude of practical use cases, particularly in personal assistance, where a large language model can handle tasks like booking flights, transferring money, or assisting with tax-related matters.

Additionally, the implications for studying and learning new subjects are significant, as a language model can reference an entire syllabus and expedite the learning process. Coding, data analysis, and data science are also expected to be greatly influenced by these advancements.

One of the most exciting prospects is connecting large language models to existing company data, such as customer information or marketing data. This integration with advanced APIs like Meta’s API or Google’s API promises exponential progress in data analytics and data science.

How to Build a Webpage (Demo)

Currently, Langchain is available as Python and JavaScript Packages.

We can create a demonstration Web App utilizing Streamlit, LangChain, and the OpenAI GPT-3 model to implement the LangChain concept.

But first, we must install a few dependencies, including Streamlit, LangChain, and OpenAI.

Pre-requisites

Streamlit: A popular Python package for creating data science-related web applications

OpenAI: Access to OpenAI’s GPT-3 language model is needed.

To install these dependencies, use the following commands in cmd:

pip install streamlit

pip install langchain

pip install openai

Import Packages

We start by importing the required packages, such as OpenAI, LangChain, and Streamlit. Our language model chains are defined and executed using three classes from LangChain: LLMChain, SimpleSequentialChain, and PromptTemplate.

import streamlit as st

from langchain.chains import LLMChain, SimpleSequentialChain

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

Basic Setup

The structural basis of our project was then put up using Streamlit syntax. We gave the app the title “What’s TRUE: Using Simple Sequential Chain” and included a markdown link to the GitHub repository that served as the app’s inspiration.

import streamlit as st

from langchain.chains import LLMChain, SimpleSequentialChain

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

Front-End Widgets

We set up the app with few relevant information, using simple Streamlit syntax:

# If an API key has been provided, create an OpenAI language model instance

if API:

llm = OpenAI(temperature=0.7, openai_api_key=API)

else:

# If an API key hasn't been provided, display a warning message

st.warning("Enter your OPENAI API-KEY. Get your OpenAI API key from [here](https://platform.openai.com/account/api-keys).\n")

To add front-end widgets

Further, we need to provide an input widget to allow our users to enter any questions.

# Add a text input box for the user's question

user_question = st.text_input(

"Enter Your Question : ",

placeholder = "Cyanobacteria can perform photosynthetsis , are they considered as plants?",

)

All done! The chains are up and running!

We employ various chains of operations together with SimpleSequentialChain to respond to the user’s query. The chains are carried out in the following sequence when the user selects the "Tell me about it" button:

if st.button("Tell me about it", type="primary"):

# Chain 1: Generating a rephrased version of the user's question

template = """{question}\n\n"""

prompt_template = PromptTemplate(input_variables=["question"], template=template)

question_chain = LLMChain(llm=llm, prompt=prompt_template)

# Chain 2: Generating assumptions made in the statement

template = """Here is a statement:

{statement}

Make a bullet point list of the assumptions you made when producing the above statement.\n\n"""

prompt_template = PromptTemplate(input_variables=["statement"], template=template)

assumptions_chain = LLMChain(llm=llm, prompt=prompt_template)

assumptions_chain_seq = SimpleSequentialChain(

chains=[question_chain, assumptions_chain], verbose=True

)

# Chain 3: Fact checking the assumptions

template = """Here is a bullet point list of assertions:

{assertions}

For each assertion, determine whether it is true or false. If it is false, explain why.\n\n"""

prompt_template = PromptTemplate(input_variables=["assertions"], template=template)

fact_checker_chain = LLMChain(llm=llm, prompt=prompt_template)

fact_checker_chain_seq = SimpleSequentialChain(

chains=[question_chain, assumptions_chain, fact_checker_chain], verbose=True

)

# Final Chain: Generating the final answer to the user's question based on the facts and assumptions

template = """In light of the above facts, how would you answer the question '{}'""".format(

user_question

)

template = """{facts}\n""" + template

prompt_template = PromptTemplate(input_variables=["facts"], template=template)

answer_chain = LLMChain(llm=llm, prompt=prompt_template)

overall_chain = SimpleSequentialChain(

chains=[question_chain, assumptions_chain, fact_checker_chain, answer_chain],

verbose=True,

)

# Running all the chains on the user's question and displaying the final answer

st.success(overall_chain.run(user_question))

question_chain: which is the first step in our pipeline, receives the user’s question as input and output. The user’s query serves as the chain’s template.- Based on a statement linked to the question, the

assumptions_chaingenerates a bullet-point list of assumptions using the output from thequestion_chainas input. TheLLMChainandOpenAImodel from LangChain was used to construct the statement. The user is tasked with creating a list of the assumptions that were made in order to produce the statement using the template for this chain. - Based on the outputs from the

question_chainandassumptions_chain, thefact_checker_chaingenerates a list of assertions in the form of bullet points. The claims are produced using theOpenAImodel andLLMChainfrom LangChain. The user is tasked with determining if each claim is accurate or incorrect and providing justification for those that are. - The

answer_chainuses the outputs from thequestion_chain,assumptions_chain, andfact_checker_chainas inputs to create a response to the user’s question using the data produced by the earlier chains. The template for this chain requests that the user responds to the first query using the facts that were created. - In order to provide the ultimate response to the user’s inquiry based on the information produced by the earlier chains, we integrate these chains into the overall chain. After the chains are completed, we use

st.success()to show the user the solution.

Conclusion

We can simply chain together different language model actions to create more complicated pipelines by using the SimpleSequentialChain module of LangChain. For a wide variety of NLP applications, including chatbots, question-and-answer systems, and language translation tools, this may be quite helpful.

The brilliance of LangChain is found in its capacity to abstract, which enables the user to concentrate on the current issue rather than the specifics of language modeling.

LangChain makes the process of creating sophisticated language models more user-friendly by offering pre-trained models and a selection of templates.

It gives you the option to fine-tune the language models using their own data, making it simple to customize the language models. This enables the development of more precise, domain-specific models that, for a given job, outperform the trained models.

The SimpleSequentialChain module and other features of LangChain make it an effective tool for quickly developing and deploying sophisticated NLP systems.

Leave a Reply