Table of Contents[Hide][Show]

One of the simplest yet most intriguing ideas in deep learning is object detection. The fundamental idea is to divide each item into successive classes that represent comparable traits and then draw a box around it.

These distinguishing characteristics can be as simple as form or color, which aids in our ability to categorize them.

The applications of Object Detection are widely employed in the medical sciences, autonomous driving, defense and military, public administration, and many other fields thanks to substantial improvements in Computer Vision and Image Processing.

Here we have MMDetection, a fantastic open-source object detection toolset built on Pytorch. In this article, we’ll examine MMDetection in detail, go hands-on with it, discuss its features, and much more.

What is MMDetection?

The MMDetection toolbox was created as a Python codebase specifically for problems involving object identification and instance segmentation.

The PyTorch implementation is used, and it is created in a modular fashion. For object recognition and instance segmentation, a wide range of effective models has been compiled into a variety of methodologies.

It permits effective inference and rapid training. On the other hand, the toolbox includes weights for over 200 pre-trained networks, making it a quick fix in the object identification field.

With the ability to adapt the current techniques or create a new detector using the available modules, MMDetection functions as a benchmark.

The toolbox’s key feature is its inclusion of straightforward, modular parts from a normal object detection framework that can be used to create unique pipelines or unique models.

The benchmarking capabilities of this toolkit make it simple to build a new detector framework on top of an existing framework and compare its performance.

Features

- Popular and modern detection frameworks, such as Faster RCNN, Mask RCNN, RetinaNet, etc., are directly supported by the toolkit.

- Use of 360+ pre-trained models for fine-tuning (or training afresh).

- For well-known vision datasets including COCO, Cityscapes, LVIS, and PASCAL VOC.

- On GPUs, all fundamental bbox and mask operations are executed. Other codebases, such as Detectron2, maskrcnn-benchmark, and SimpleDet, can be trained at a quicker rate than or on par with this one.

- Researchers break down the object detection framework into several modules, which can then be combined to create a unique object detection system.

MMDetection Architecture

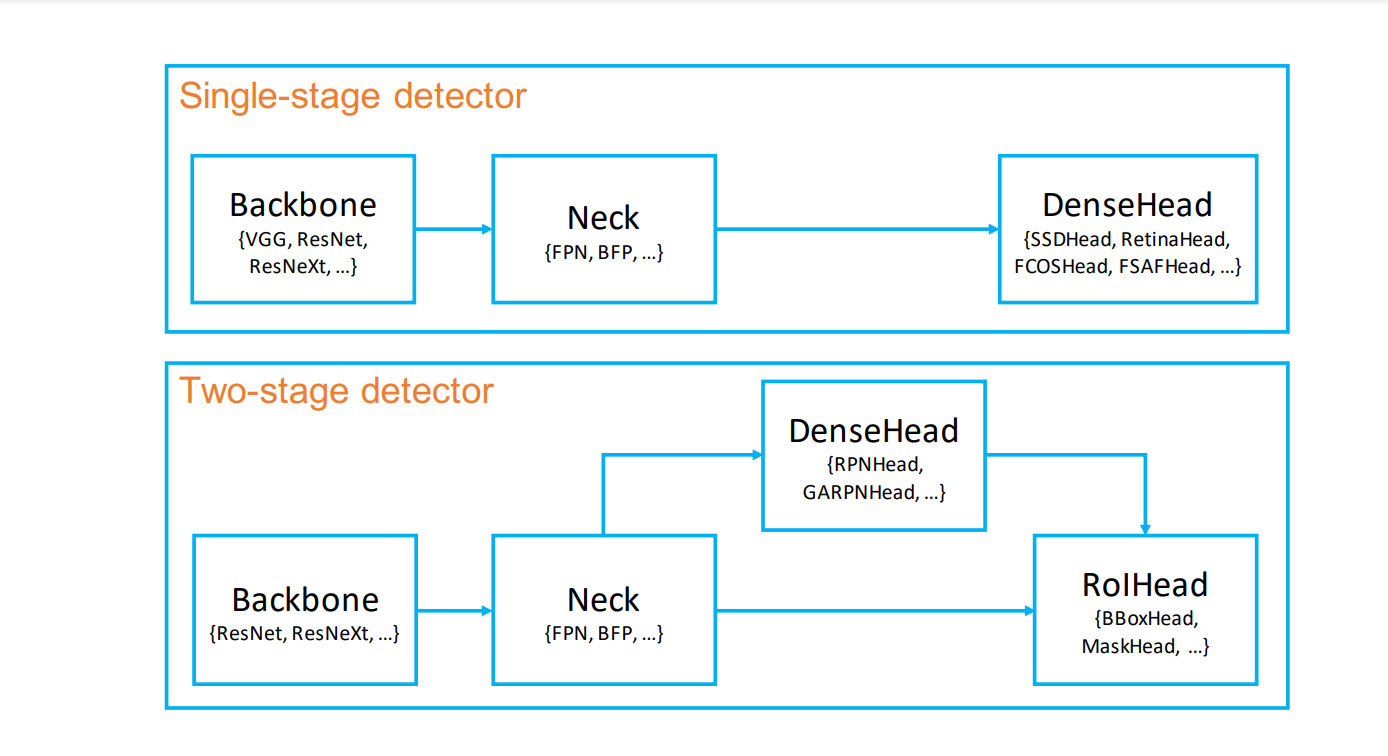

MMDetection specifies a generic design that can be applied to any model since it is a toolbox with a variety of pre-built models, each of which has its own architecture. The following components make up this overall architecture:

- Backbone: Backbone, such as a ResNet-50 without the final fully connected layer, is the component that converts an image to feature maps.

- Neck: The neck is the segment that connects the backbone to the heads. On the backbone’s raw feature maps, it does certain adjustments or reconfigurations. Feature Pyramid Network is one illustration (FPN).

- DenseHead (AnchorHead/AnchorFreeHead): It is the component that operates on dense areas of feature maps, such as AnchorHead and AnchorFreeHead, such as RPNHead, RetinaHead, and FCOSHead.

- RoIExtractor: With the use of RoIPooling-like operators, it is the section that pulls RoIwise features from a single or a collection of feature maps. The SingleRoIExtractor sample extracts RoI features from the matching level of feature pyramids.

- RoIHead (BBoxHead/MaskHead): It is the portion of the system that uses RoI characteristics as an input and generates RoI-based task-specific predictions, such as bounding box classification/regression and mask prediction.

The construction of single-stage and two-stage detectors is illustrated using the aforementioned concepts. We can develop our own procedures simply by constructing a few fresh parts and combining some existing ones.

List of models included in MMDetection

MMDetection provides top-notch codebases for several well-known models and task-oriented modules. The models that have previously been made and adaptable methods that may be utilized with the MMDetection toolbox are listed below. The list keeps growing as more models and methods are added.

- Fast R-CNN

- Faster R-CNN

- Mask R-CNN

- RetinaNet

- DCN

- DCNv2

- Cascade R-CNN

- M2Det

- GHM

- ScratchDet

- Double-Head R-CNN

- Grid R-CNN

- FSAF

- Libra R-CNN

- GCNet

- HRNet

- Mask Scoring R-CNN

- FCOS

- SSD

- R-FCN

- Mixed Precision Training

- Weight Standardization

- Hybrid Task Cascade

- Guided Anchoring

- Generalized Attention

Building object detection model using MMDetection

In this tutorial, we will be the Google collab notebook because it is easy to set and use.

Installation

To install everything we need, we will first install the necessary libraries and clone the MMdetection GitHub project.

Importing env

The environment for our project will now be imported from the repository.

Importing libraries and MMdetection

We will now import the required libraries, along with the MMdetection of course.

Download the pre-trained checkpoints

The pre-trained model checkpoints from MMdetection should now be downloaded for further adjustment and inference.

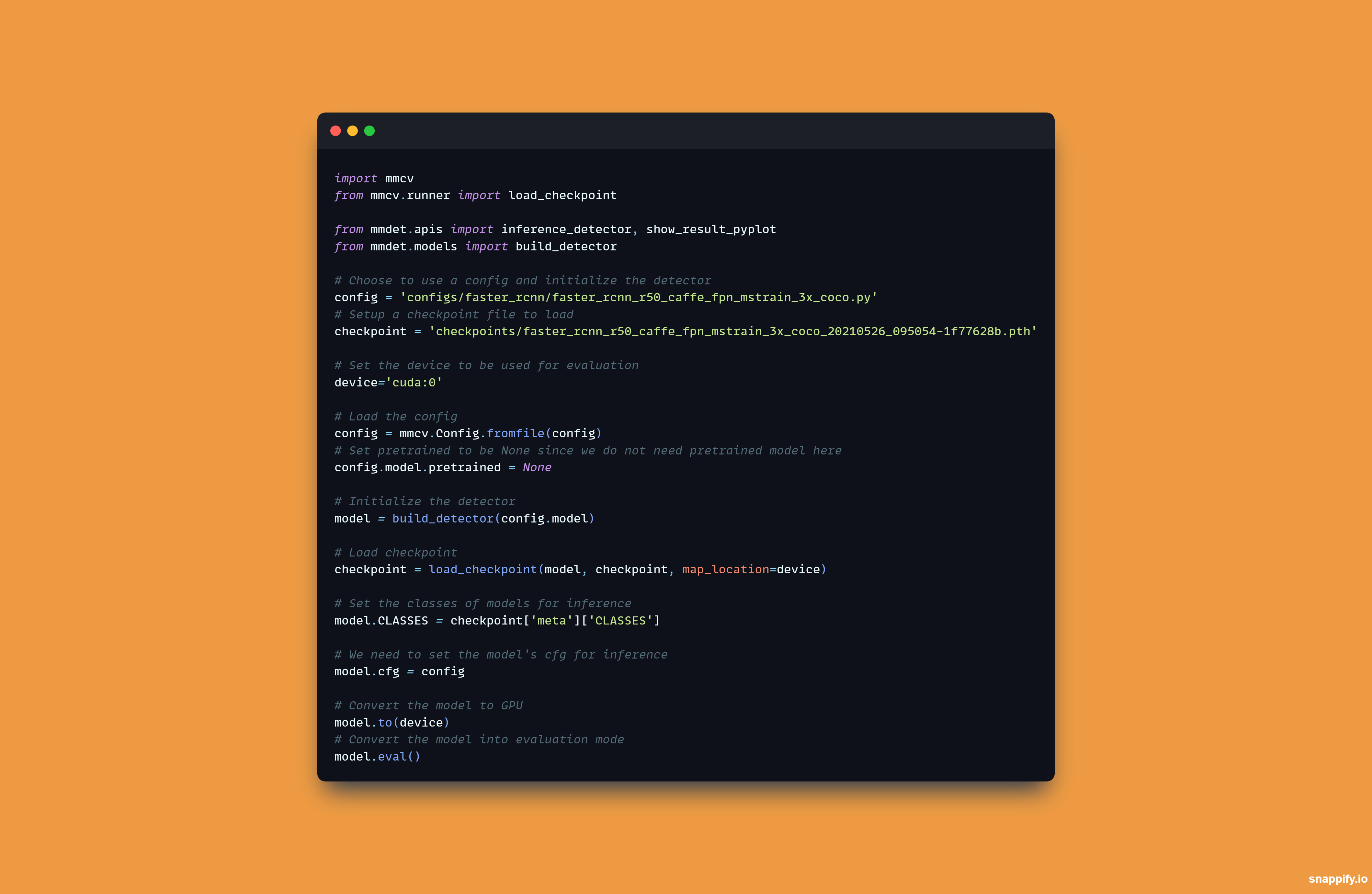

Building model

We will now construct the model and apply the checkpoints to the dataset.

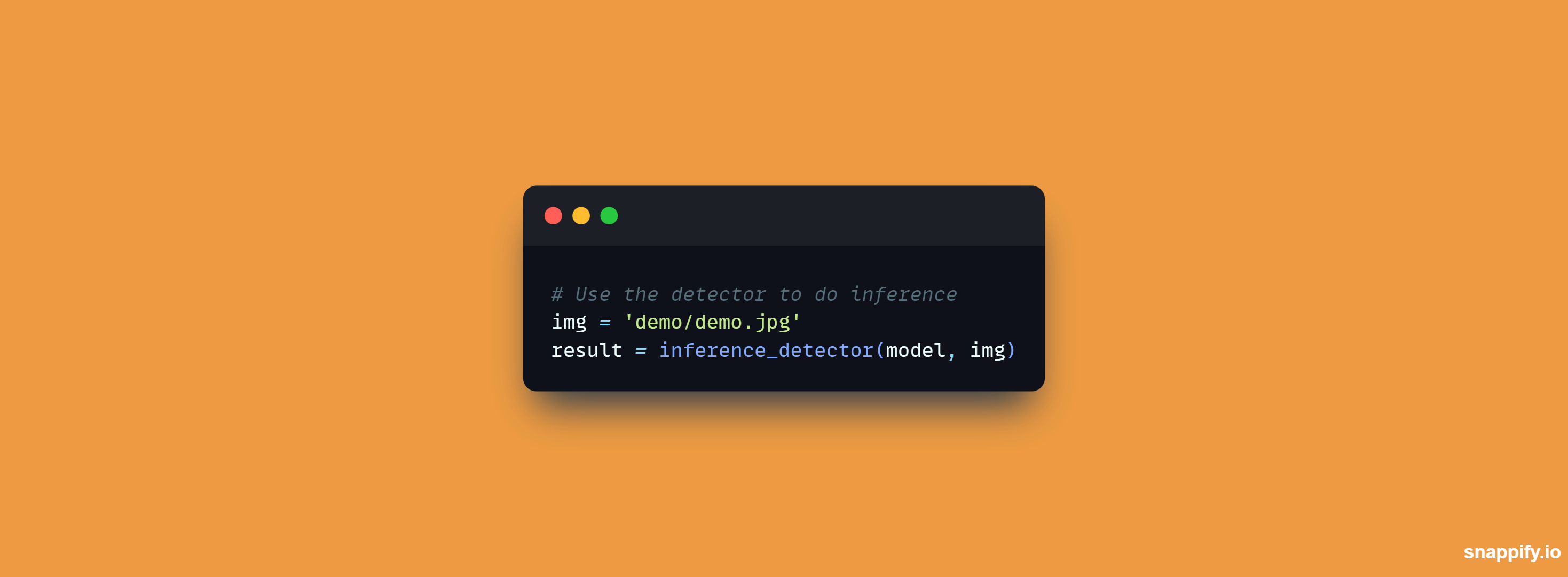

Inference the detector

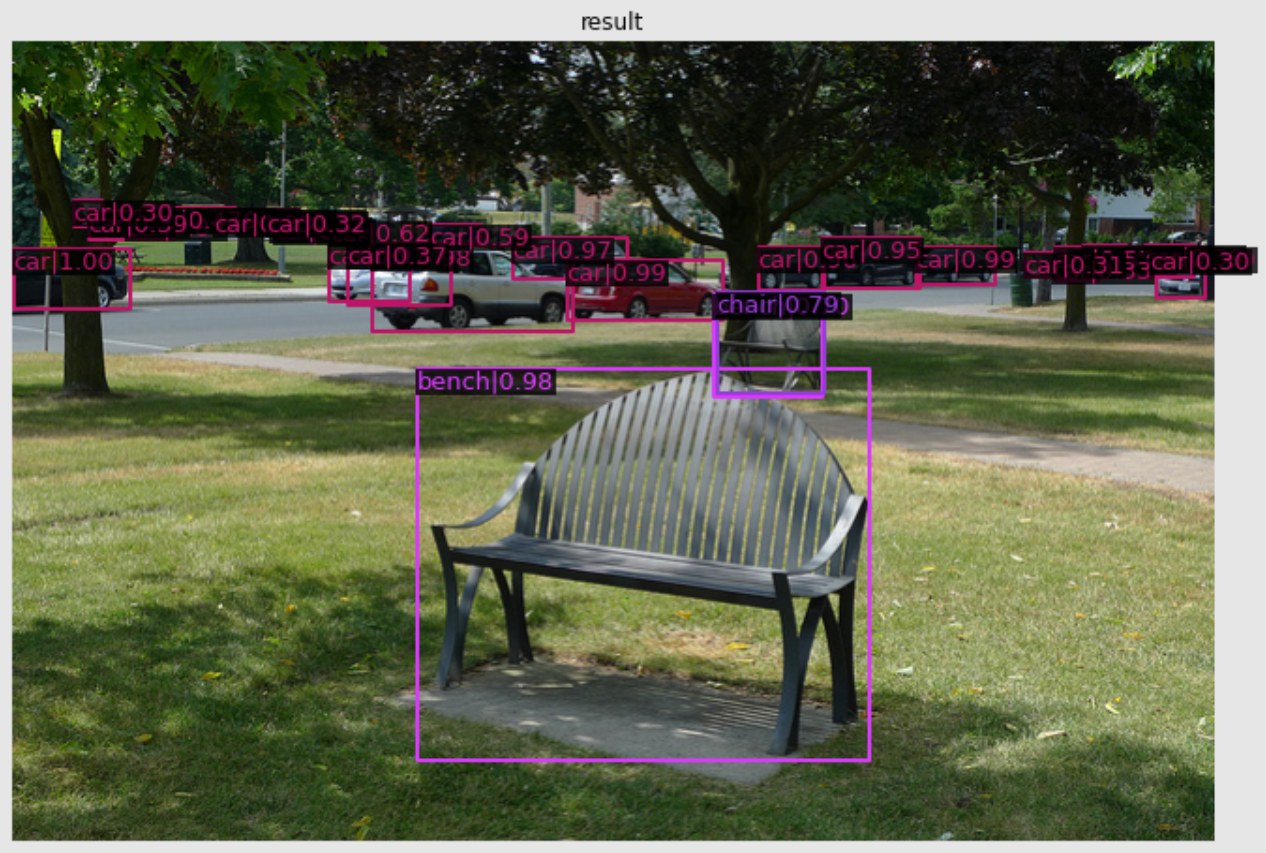

Now that the model has been properly constructed and loaded, let’s check how excellent it is. We utilize MMDetection’s high-level API inference detector. This API was designed to make the inference process easier.

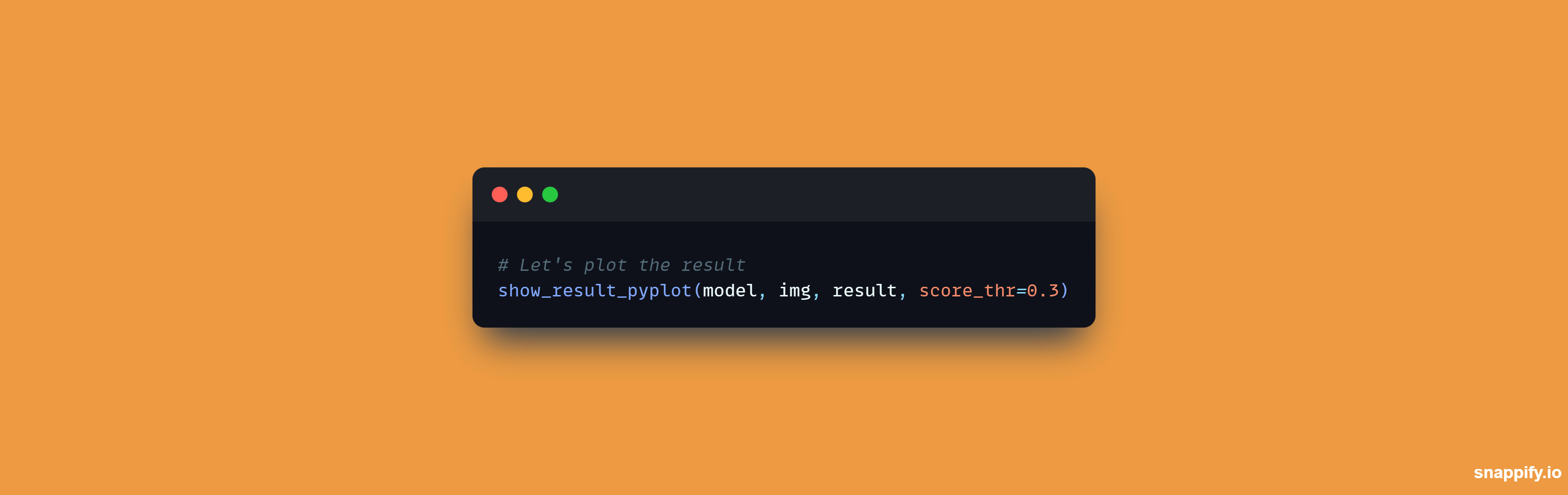

Result

Let’s have a look at the results.

Conclusion

In conclusion, the MMDetection toolbox outperforms recently released codebases like SimpleDet, Detectron, and Maskrcnn-benchmark. With a large model collection,

MMDetection is now state-of-the-art technology. MMDetection outperforms all other codebases in terms of efficiency and performance.

One of the nicest things about MMdetection is that you can now just point to a different configuration file, download a different checkpoint, and run the same code if you wish to change the models.

I advise looking at their instructions if you run into problems with any of the stages or wish to carry out some of them differently.

Leave a Reply