Several global sectors are starting to invest more substantially in machine learning (ML).

ML models can be initially launched and operated by teams of specialists, but one of the biggest obstacles is transferring the knowledge gained to the next model so that processes can be expanded.

To improve and standardize the processes involved in model lifecycle management, MLOps techniques are increasingly being used by the teams that create machine learning models.

Continue reading to find out more about some of the best MLOps tools and platforms available today and how they can make machine learning easier from a tool, developer, and procedural standpoint.

What is MLOps?

A technique for creating policies, norms, and best practices for machine learning models is known as “machine learning operations,” or “MLOps.”

MLOps aims to guarantee the whole lifecycle of ML development — from conception to deployment — is meticulously documented and managed for the best results rather than investing a lot of time and resources in it without a strategy.

The goal of MLOps is to codify best practices in a way that makes machine learning development more scalable for ML operators and developers, as well as to enhance the quality and security of ML models.

Some refer to MLOps as “DevOps for machine learning” since it successfully applies DevOps principles to a more specialized field of technological development.

This is a useful way to think about MLOps because, like DevOps, it emphasizes knowledge sharing, collaboration, and best practices among teams and tools.

MLOps provides developers, data scientists, and operations teams with a framework for cooperating and, as a result, producing the most powerful ML models.

Why Use MLOps Tools?

MLOps tools can perform a wide range of duties for an ML team, however, they are often split into two groups: platform administration and individual component management.

While some MLOps products focus only on a single core function, such as data or metadata management, other tools adopt a more all-encompassing strategy and provide an MLOps platform to control several aspects of the ML lifecycle.

Look for MLOps solutions that assist your team in managing these ML development areas, whether you’re looking for a specialist or a more broad tool:

- Handling of data

- Design and modeling

- Management of projects and workplace

- ML model deployment and continuous upkeep

- Lifecycle management from beginning to end, which is typically offered by full-service MLOps platforms.

MLOps Tools

1. MLFlow

The machine learning lifecycle is controlled by the open-source platform MLflow and includes a central model registration, deployment, and experimentation.

MLflow can be used by any size team, both individually and collectively. Libraries have no bearing on the tool.

Any programming language and machine learning library can utilize it.

To make it simpler to train, deploy, and manage machine learning applications, MLFlow interacts with a number of machine learning frameworks, including TensorFlow and Pytorch.

Additionally, MLflow provides easy-to-use APIs that can be included in any existing machine learning programs or libraries.

MLflow has four key features that facilitate tracking and planning experiments:

- MLflow Tracking – an API and UI for logging machine learning code parameters, versions, metrics, and artifacts as well as for subsequently displaying and contrasting the outcomes

- MLflow Projects – packaging machine learning code in a reusable, reproducible format for transfer to production or sharing with other data scientists

- MLflow Models – maintaining and deploying models to a range of model serving and inference systems from various ML libraries

- MLflow Model Registry – a central model store that enables cooperative management of an MLflow model’s whole lifespan, including model versioning, stage transitions, and annotations.

2. Kubeflow

The ML toolbox for Kubernetes is called Kubeflow. Packaging and managing Docker containers, aids in the maintenance of machine learning systems.

By simplifying run orchestration and deployments of machine learning workflows, it promotes the scalability of machine learning models.

It is an open-source project that includes a carefully chosen group of complementary tools and frameworks tailored to different ML needs.

Long ML training tasks, manual experimentation, repeatability, and DevOps challenges can be handled with Kubeflow Pipelines.

For several stages of machine learning, including training, pipeline development, and maintenance of Jupyter notebooks, Kubeflow offers specialized services and integration.

It makes it simple to manage and track the lifetime of your AI workloads as well as to deploy machine learning (ML) models and data pipelines to Kubernetes clusters.

It offers:

- Notebooks for utilizing the SDK to interact with the system

- a user interface (UI) for controlling and monitoring runs, jobs, and experiments

- To swiftly design end-to-end solutions without having to rebuild each time, and reuse components and pipelines.

- As a key component of Kubeflow or as a standalone installation, Kubeflow Pipelines is offered.

3. Data Version Control

An open-source version control solution for machine learning projects is called DVC, or Data Version Control.

Whatever language you pick, it’s an experimental tool that aids in pipeline definition.

DVC utilizes code, data versioning, and reproducibility to help you save time when you discover an issue with an earlier version of your ML model.

Additionally, you can use DVC pipelines to train your model and distribute it to your team members. Big data organization and versioning can be handled by DVC, and the data can be stored in an easily accessible manner.

Although it includes some (limited) experiment tracking features, it mostly focuses on data and pipeline versioning and management.

It offers:

- It is storage agnostic, therefore it is possible to employ a variety of storage types.

- It provides tracking stats as well.

- a pre-built means of joining ML stages into a DAG and running the entire pipeline from beginning to finish

- Each ML model’s entire development can be followed using its whole code and data provenance.

- Reproducibility by faithfully preserving the initial configuration, input data, and program code for an experiment.

4. Pachyderm

Pachyderm is a version-control program for machine learning and data science, similar to DVC.

Additionally, because it was created using Docker and Kubernetes, it can execute and deploy Machine Learning applications on any cloud platform.

Pachyderm makes guarantees that each piece of data that is consumed into a machine learning model can be tracked back and versioned.

It is used to create, distribute, manage, and keep an eye on machine learning models. A model registry, a model management system, and a CLI toolbox are all included.

Developers can automate and expand their machine learning lifecycle using Pachyderm’s data foundation, which also ensures repeatability.

It supports stringent data governance standards, lowers data processing and storage costs, and aids businesses in bringing their data science initiatives to market more quickly.

5. Polyaxon

Using the Polyaxon platform, machine learning projects and deep learning applications can be replicated and managed over their entire life cycle.

Polyaxon is able to host and administer the tool, and it can be placed into any data center or cloud provider. Such as Torch, Tensorflow, and MXNet, which support all of the most popular deep learning frameworks.

When it comes to orchestration, Polyaxon enables you to make the most of your cluster by scheduling tasks and tests via their CLI, dashboard, SDKs, or REST API.

It offers:

- You can use the open-source version right now, but it also includes choices for the corporate.

- Although it covers the complete lifecycle, including run orchestration, it is capable of much more.

- With technical reference documents, getting started guidelines, learning materials, manuals, tutorials, changelogs, and more, it is a highly well-documented platform.

- With the experiment insights dashboard, it is possible to keep an eye on, track, and evaluate each optimization experiment.

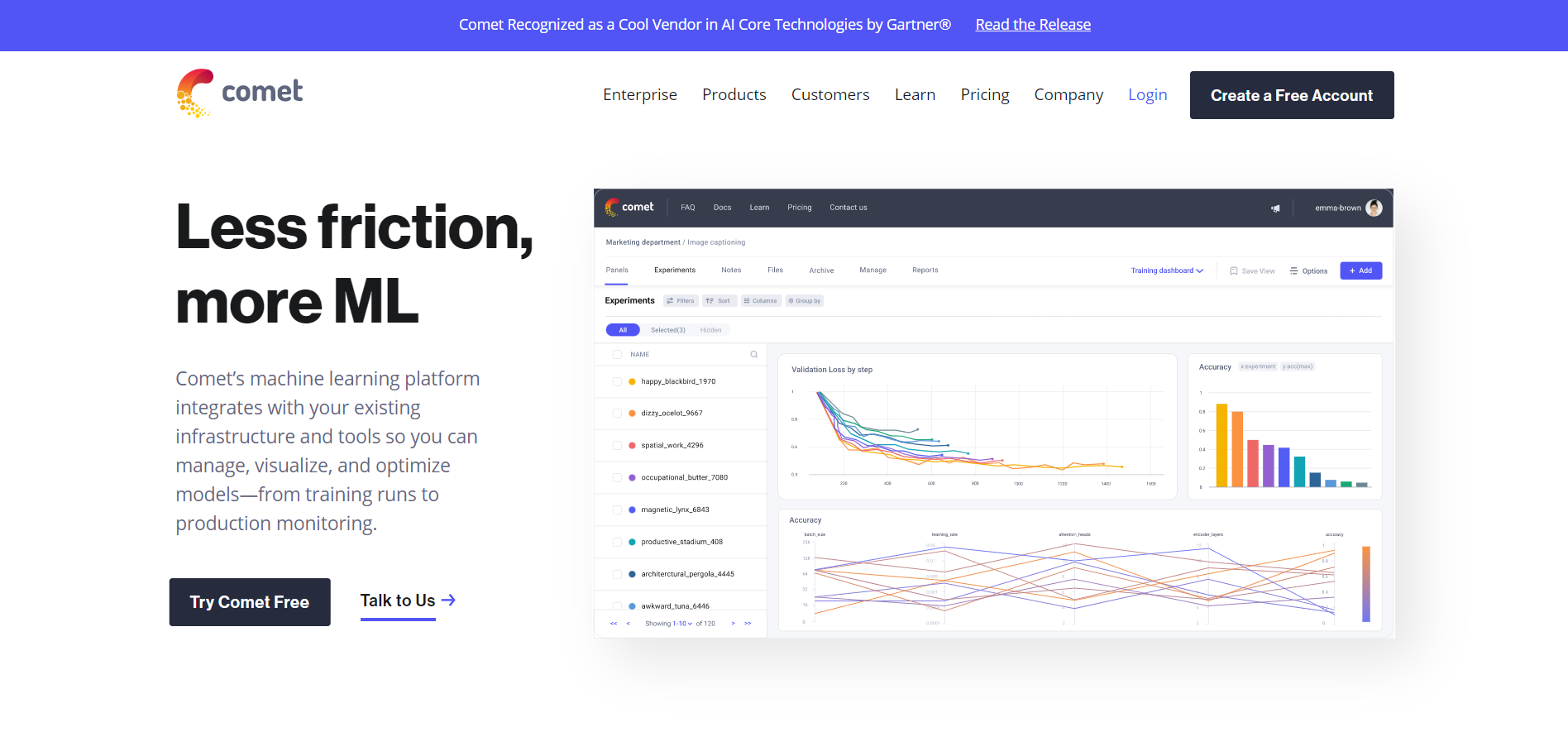

6. Comet

Comet is a platform for meta machine learning that tracks, contrasts, explains, and improves experiments and models.

All of your experiments can be seen and compared in one location.

It functions for any machine learning task, anywhere your code is performed, and with any machine learning library.

Comet is appropriate for groups, individuals, academic institutions, businesses, and anybody else that wishes to quickly visualize experiments, streamline work, and conduct experiments.

Data scientists and teams can track, clarify, improve, and compare experiments and models using the self-hosted and cloud-based meta-machine learning platform Comet.

It offers:

- Many capabilities exist for team members to share tasks.

- It has several integrations that make it simple to link it to other technologies

- Functions well with current ML libraries

- Takes care of user management

- Comparison of experiments is enabled, including a comparison of code, hyperparameters, metrics, predictions, dependencies, and system metrics.

- Provides distinct modules for vision, audio, text, and tabular data that let you visualize samples.

7. Optuna

Optuna is a system for autonomous hyperparameter optimization that can be applied to both machine learning and deep learning as well as other fields.

It contains a variety of cutting-edge algorithms from which you can select (or link), makes it very simple to distribute training over numerous computers, and offers attractive results visualization.

Popular machine learning libraries like PyTorch, TensorFlow, Keras, FastAI, sci-kit-learn, LightGBM, and XGBoost are all integrated with it.

It provides cutting-edge algorithms that enable customers to get results more rapidly by swiftly reducing the samples that don’t look promising.

Using Python-based algorithms, it automatically searches for the ideal hyperparameters. Optuna encourages parallelized hyperparameter searches across many threads without altering the original code.

It offers:

- It supports distributed training on a cluster as well as a single computer (multi-process) (multi-node)

- It supports several trimming techniques to speed up convergence (and use less compute)

- It has a variety of potent visualizations, such as slice plot, contour plot, and parallel coordinates.

8. Kedro

Kedro is a free Python framework for writing code that can be updated and maintained for data science projects.

It brings ideas from best practices in software engineering to machine learning code. Python is the foundation of this workflow orchestration tool.

To make your ML processes simpler and more precise, you can develop reproducible, maintainable, and modular workflows.

Kedro incorporates software engineering principles like modularity, separation of responsibilities, and versioning into a machine learning environment.

On basis of Cookiecutter Data Science, it provides a common, adaptable project framework.

A number of simple data connectors used to store and load data across several file systems and file formats, are managed by the data catalog. It makes machine learning projects more effective and makes it simpler to build up a data pipeline.

It offers:

- Kedro allows for either dispersed or solitary machine deployment.

- You can automate dependencies between Python code and workflow visualization using pipeline abstraction.

- Through the use of modular, reusable code, this technology facilitates team collaboration on a variety of levels and improves productivity in the coding environment.

- The primary goal is to overcome the drawbacks of Jupyter notebooks, one-off scripts, and glue-code by writing maintainable data science programming.

9. BentoML

Building machine learning API endpoints is made easier with BentoML.

It provides a typical yet condensed infrastructure to move learned machine learning models into production.

It enables you to package learned models for use in a production setting, interpreting them using any ML framework. Both offline batch serving and online API serving are supported.

A high-performance model server and a flexible workflow are features of BentoML.

Additionally, the server offers adaptive micro-batching. A unified approach for organizing models and keeping track of deployment procedures is provided by the UI dashboard.

There will be no server downtime because the operating mechanism is modular and the configuration is reusable. It is a flexible platform for providing, organizing, and deploying ML models.

It offers:

- It has a modular design that is adaptable.

- It enables deployment across several platforms.

- It cannot automatically handle horizontal scaling.

- It enables a single model format, model management, model packaging, and high-performance model serving.

10. Seldon

Data scientists can create, deploy, and manage machine learning models and experiments at scale on Kubernetes using the open-source Seldon Core framework.

TensorFlow, sci-kit-learn, Spark, R, Java, and H2O are just a few of the toolkits that are supported by it.

It also interfaces with Kubeflow and RedHat’s OpenShift. The Seldon core transforms machine learning models (ML models) or language wrappers (languages like Python, Java, etc.) into production REST/GRPC microservices.

One of the best MLOps tools for improving machine learning processes is this one.

It is simple to containerize ML models and test for usability and security using Seldon Core.

It offers:

- Model deployment can be made simpler with several alternatives, such as canary deployment.

- To understand why specific predictions were made, use model explainers.

- When issues arise, keep an eye on the production models using the alerting system.

Conclusion

MLOps can help to make machine learning operations better. MLOps can speed up deployment, make data collection and debugging simpler, and improve collaboration between engineers and data scientists.

In order for you to choose the MLOps tool that best suits your needs, this post examined 10 popular MLOps solutions, most of which are open-source.

Leave a Reply